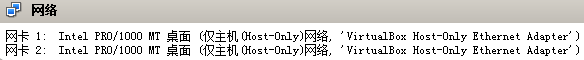

本文档是在《OEL5.10安装11.1.0.6 RAC升级11.1.0.7》基础上完成的升级测试:http://www.lynnlee.cn/?p=1000,文档包含了整个升级过程的所有步骤和过程,包括将Cluster管理集群模式更换为Grid Infrastructure,迁移原ASM磁盘组到grid下,安装11.2.0.4版本的databse软件,升级database等一些列过程。由于11gR2之后版本的RAC和11gR1相比变化很大,因此本文档按照11gR2官方推荐管理方式进行安装配置升级。

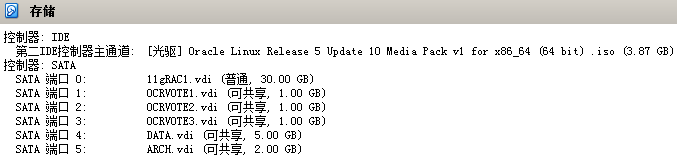

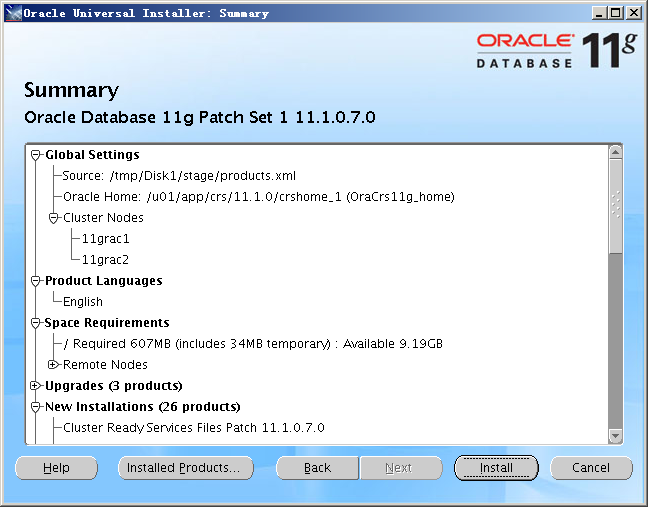

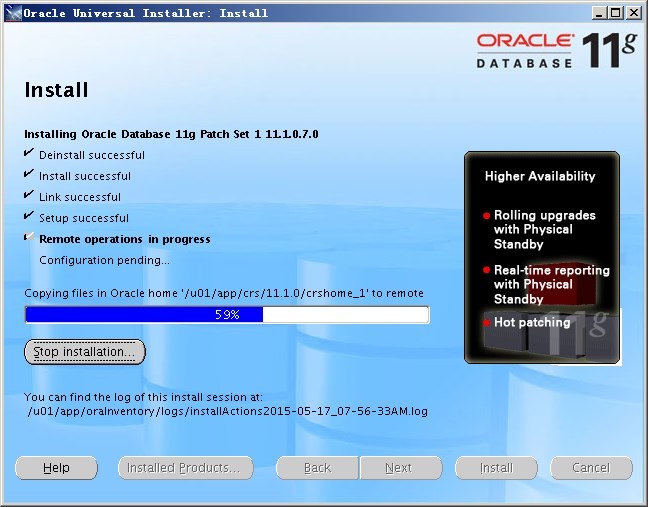

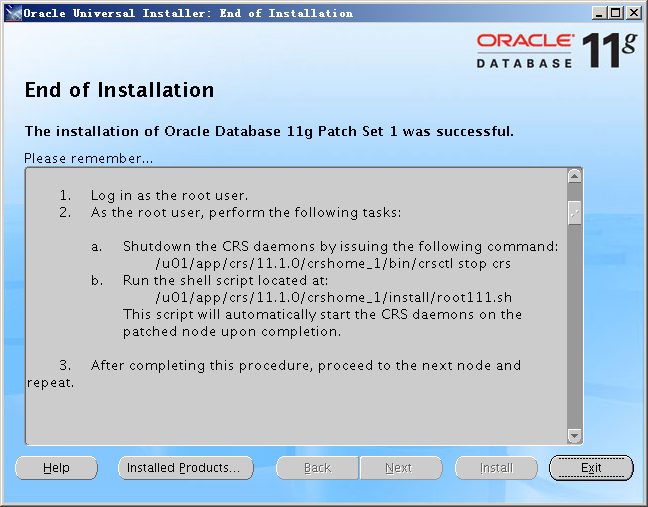

〇、环境描述

OEL 5.10

Database 11.1.0.7

Cluster 11.1.0.7

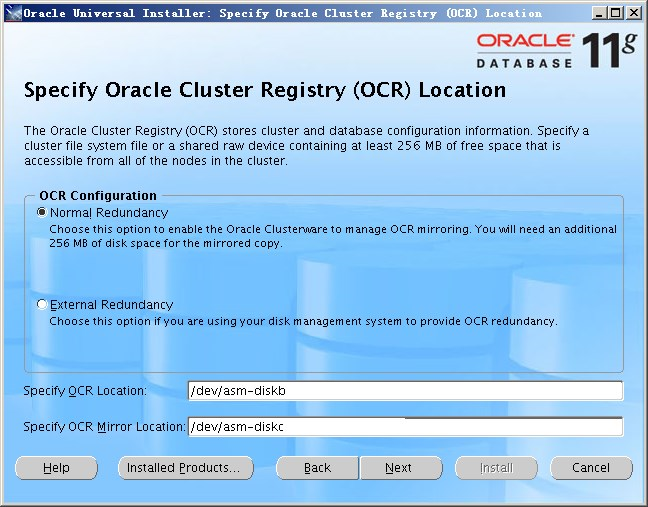

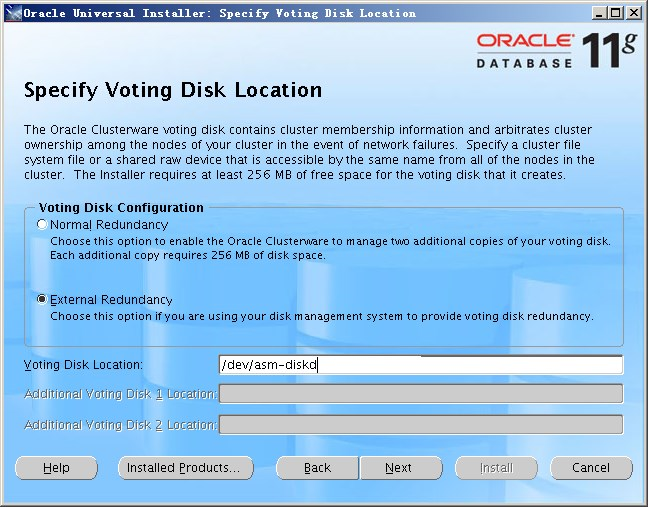

/dev/asm-diskb/c存放OCR

/dev/asm-diskd 存放VOTING DISK

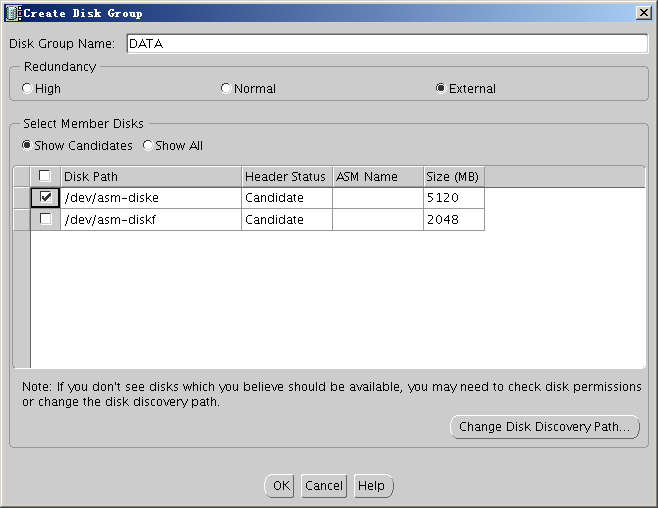

/dev/asm-diske DATA ASM DISK GROUP数据

/dev/asm-diskf FRA ASM DISK GROUP闪回区

一、配置SCAN IP和DNS

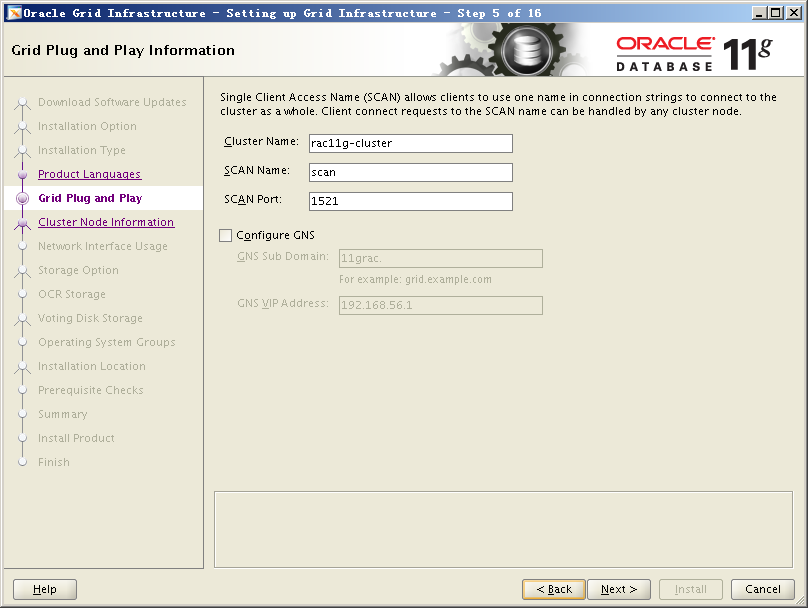

由于11gR1版本之前的RAC没有SCAN IP概念,从11gR2之后版本均使用SCAN IP,该IP可是像VIP一样使用hosts文件解析,但是官方还是首选推荐使用DNS进行解析,SCAN最多可以有三个IP地址解析,这里我们使用节点1作为DNS Server,配置DNS解析SCAN IP的步骤过程请参考《Oracle 11g R2 RAC配置DNS解析SCAN IP》链接:http://www.lynnlee.cn/?p=960,这里我们的SCAN IP设置如下:

# SCAN IP(使用DNS进行)

192.168.56.101 scan scan.oracle.com

192.168.56.102 scan scan.oracle.com

192.168.56.103 scan scan.oracle.com

二、添加grid用户及修改oracle用户组

11gR2版本的RAC使用grid用户管理安装和管理集群,因此这里需要添加grid用户,同时调整oracle用户属主:

[root@11grac1.localdomain:/root]$ id oracle

uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba)

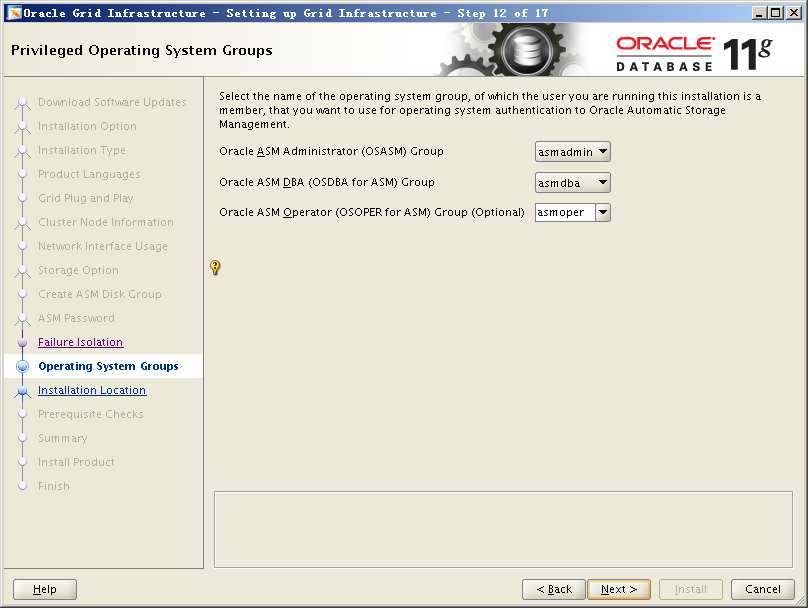

[root@11grac1.localdomain:/root]$ groupadd -g 5000 asmadmin

[root@11grac1.localdomain:/root]$ groupadd -g 5001 asmdba

[root@11grac1.localdomain:/root]$ groupadd -g 5002 asmoper

[root@11grac1.localdomain:/root]$ groupadd -g 6002 oper

[root@11grac2.localdomain:/root]$ id oracle

uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba)

[root@11grac2.localdomain:/root]$ groupadd -g 5000 asmadmin

[root@11grac2.localdomain:/root]$ groupadd -g 5001 asmdba

[root@11grac2.localdomain:/root]$ groupadd -g 5002 asmoper

[root@11grac2.localdomain:/root]$ groupadd -g 6002 oper

[root@11grac1.localdomain:/root]$ usermod -a -G asmadmin,asmdba,asmoper,oper oracle

[root@11grac1.localdomain:/root]$ id oracle

uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),5000(asmadmin),5001(asmdba),5002(asmoper),6002(oper)

[root@11grac2.localdomain:/root]$ usermod -a -G asmadmin,asmdba,asmoper,oper oracle

[root@11grac2.localdomain:/root]$ id oracle

uid=54321(oracle) gid=54321(oinstall) groups=54321(oinstall),54322(dba),5000(asmadmin),5001(asmdba),5002(asmoper),6002(oper)

[root@11grac1.localdomain:/root]$ useradd -g oinstall -G dba,asmadmin,asmdba,asmoper grid

[root@11grac1.localdomain:/root]$ id grid

uid=54322(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),5000(asmadmin),5001(asmdba),5002(asmoper)

[root@11grac1.localdomain:/root]$ passwd grid

Changing password for user grid.

New UNIX password:

BAD PASSWORD: it is based on a dictionary word

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

[root@11grac2.localdomain:/root]$ useradd -g oinstall -G dba,asmadmin,asmdba,asmoper grid

[root@11grac2.localdomain:/root]$ id grid

uid=54322(grid) gid=54321(oinstall) groups=54321(oinstall),54322(dba),5000(asmadmin),5001(asmdba),5002(asmoper)

[root@11grac2.localdomain:/root]$ passwd grid

Changing password for user grid.

New UNIX password:

BAD PASSWORD: it is based on a dictionary word

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

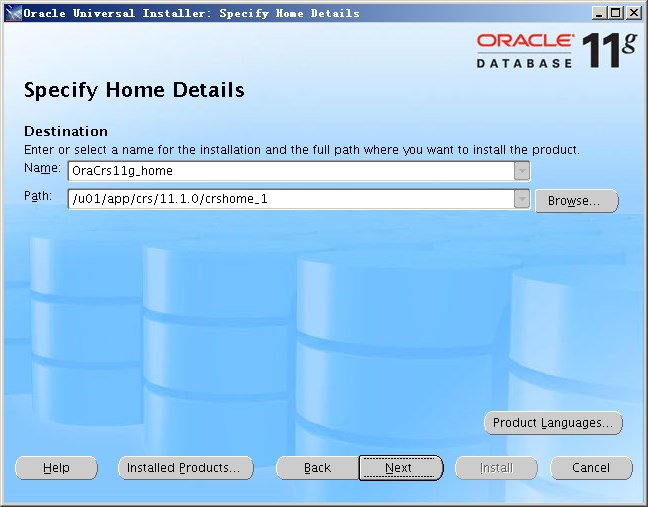

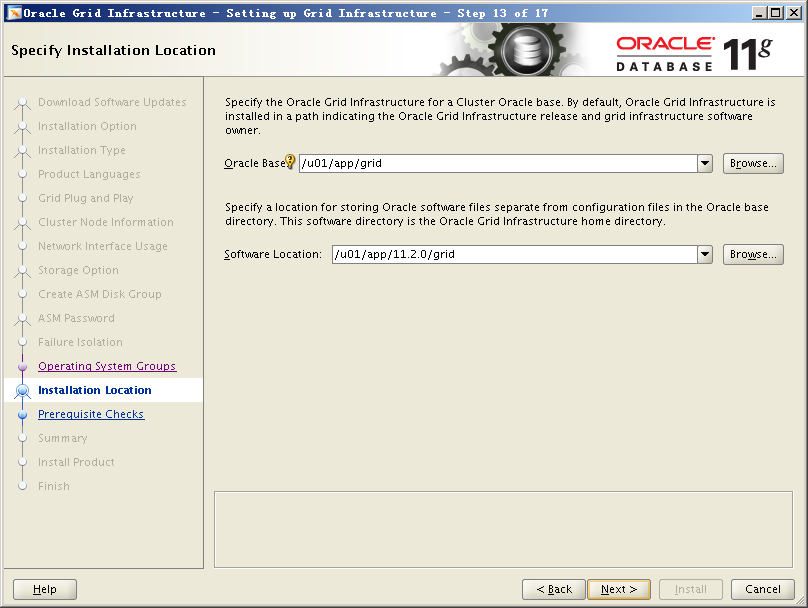

三、创建grid软件安装的GI_BASE/GI_HOME相关目录修改环境变量

[root@11grac1.localdomain:/root]$ mkdir -p /u01/app/grid

[root@11grac1.localdomain:/root]$ mkdir -p /u01/app/11.2.0/grid

[root@11grac2.localdomain:/root]$ mkdir -p /u01/app/grid

[root@11grac2.localdomain:/root]$ mkdir -p /u01/app/11.2.0/grid

[root@11grac1.localdomain:/root]$ cd /u01/app/

[root@11grac1.localdomain:/u01/app]$ chown -R grid:oinstall 11.2.0

[root@11grac1.localdomain:/u01/app]$ chown grid:oinstall grid

[root@11grac2.localdomain:/root]$ cd /u01/app/

[root@11grac2.localdomain:/u01/app]$ chown -R grid:oinstall 11.2.0

[root@11grac2.localdomain:/u01/app]$ chown grid:oinstall grid

[grid@11grac1 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_SID=+ASM1

export ORACLE_BASE=/u01/app/grid

export GRID_BASE=$ORACLE_BASE

export GI_BASE=$GRID_BASE

export ORACLE_HOME=/u01/app/11.2.0/grid

export GRID_HOME=$ORACLE_HOME

export GI_HOME=$GRID_HOME

export PATH=$ORACLE_HOME/bin:$PATH

[grid@11grac2 ~]$ cat .bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH

export ORACLE_SID=+ASM2

export ORACLE_BASE=/u01/app/grid

export GRID_BASE=$ORACLE_BASE

export GI_BASE=$GRID_BASE

export ORACLE_HOME=/u01/app/11.2.0/grid

export GRID_HOME=$ORACLE_HOME

export GI_HOME=$GRID_HOME

export PATH=$ORACLE_HOME/bin:$PATH

四、创建11gR2 oracle软件的HOME目录(BASE沿用原来的BASE目录)

[oracle@11grac1.localdomain:/home/oracle]$ cd /u01/app/oracle/product/

[oracle@11grac1.localdomain:/u01/app/oracle/product]$ ls -l

total 4

drwxr-xr-x 3 oracle oinstall 4096 May 14 22:54 11.1.0

[oracle@11grac1.localdomain:/u01/app/oracle/product]$ mkdir -p 11.2.0/dbhome_1

[oracle@11grac1.localdomain:/u01/app/oracle/product]$ ls -l

total 8

drwxr-xr-x 3 oracle oinstall 4096 May 14 22:54 11.1.0

drwxr-xr-x 3 oracle oinstall 4096 May 18 07:28 11.2.0

[oracle@11grac2.localdomain:/home/oracle]$ cd /u01/app/oracle/product/

[oracle@11grac2.localdomain:/u01/app/oracle/product]$ ls -l

total 4

drwxr-xr-x 3 oracle oinstall 4096 May 14 22:54 11.1.0

[oracle@11grac2.localdomain:/u01/app/oracle/product]$ mkdir -p 11.2.0/dbhome_1

[oracle@11grac2.localdomain:/u01/app/oracle/product]$ ls -l

total 8

drwxr-xr-x 3 oracle oinstall 4096 May 14 23:06 11.1.0

drwxr-xr-x 3 oracle oinstall 4096 May 18 07:28 11.2.0

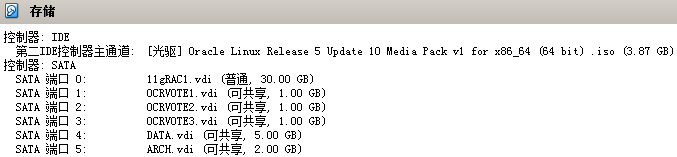

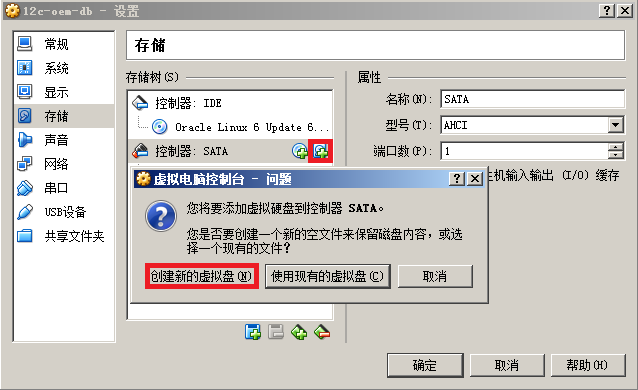

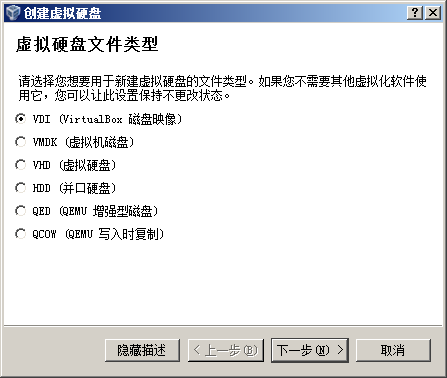

五、创建安装11gR2 grid Infrastructure ASM共享磁盘(使用udev不使用asmlib)

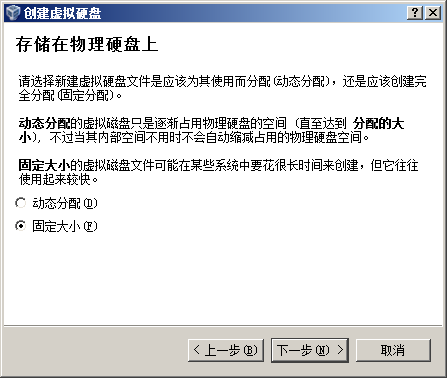

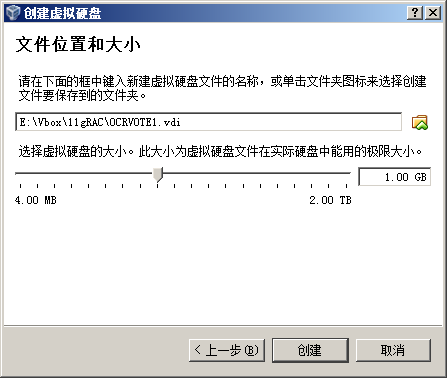

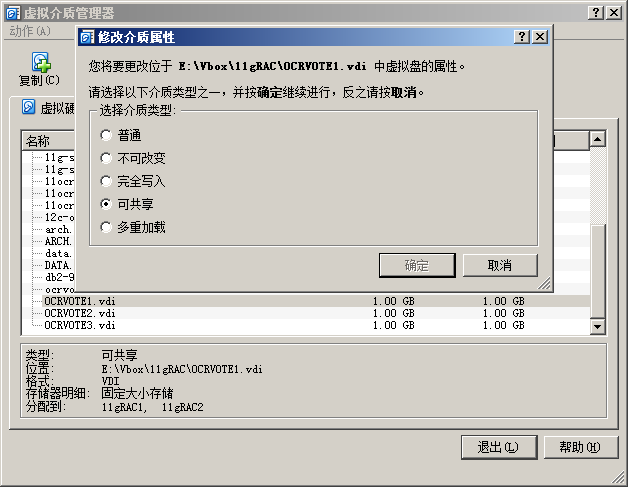

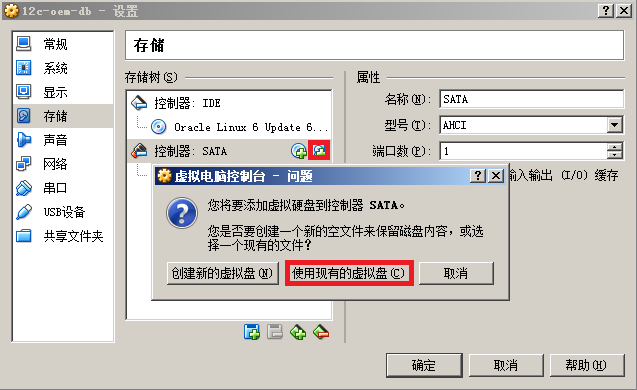

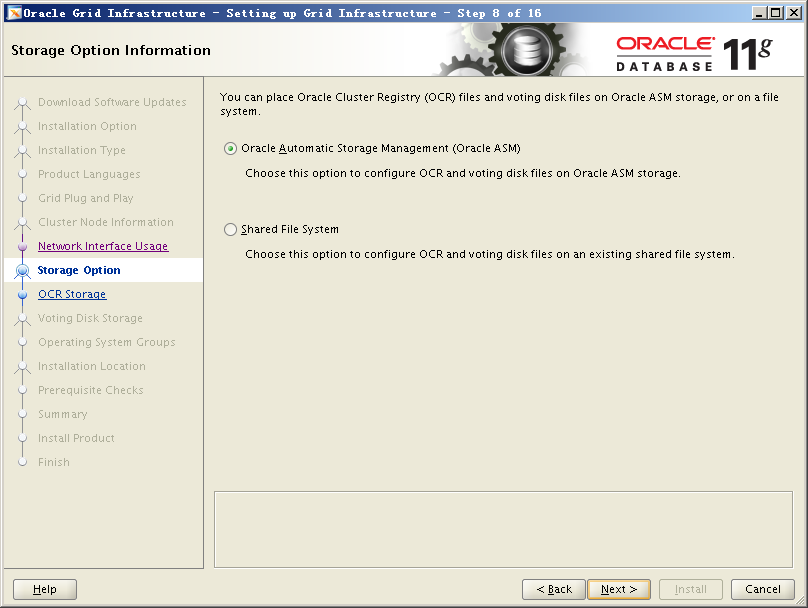

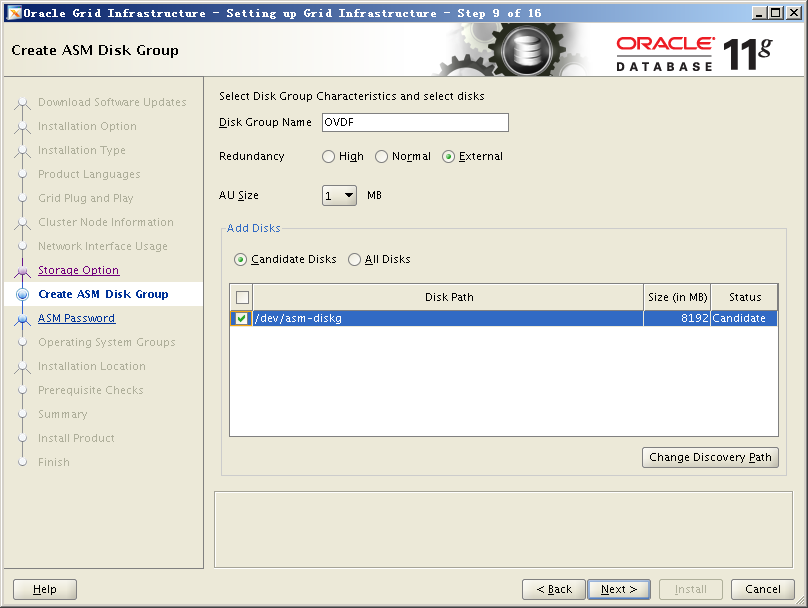

这里作为测试只添加一块磁盘/dev/sdg,创建ASM磁盘组,存放11gR2版本grid集群的ocr/votingdisk(VirtualBox为两个节点添加共享磁盘方法不再赘述)

[root@11grac1.localdomain:/root]$ fdisk -l

Disk /dev/sdg: 8589 MB, 8589934592 bytes

255 heads, 63 sectors/track, 1044 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/sdg doesn’t contain a valid partition table

[root@11grac2.localdomain:/root]$ fdisk -l

Disk /dev/sdg: 8589 MB, 8589934592 bytes

255 heads, 63 sectors/track, 1044 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk /dev/sdg doesn’t contain a valid partition table

使用该命令将新添加的磁盘/dev/sdg添加到udev rule文件中:

for i in g;

do

echo “KERNEL==\”sd*\”, BUS==\”scsi\”, PROGRAM==\”/sbin/scsi_id -g -u -s %p\”, RESULT==\”`scsi_id -g -u -s /block/sd$i`\”, NAME=\”asm-disk$i\”, OWNER=\”grid\”, GROUP=\”asmadmin\”, MODE=\”0660\””

done

[root@11grac1.localdomain:/root]$ for i in g;

> do

> echo “KERNEL==\”sd*\”, BUS==\”scsi\”, PROGRAM==\”/sbin/scsi_id -g -u -s %p\”, RESULT==\”`scsi_id -g -u -s /block/sd$i`\”, NAME=\”asm-disk$i\”, OWNER=\”grid\”, GROUP=\”asmadmin\”, MODE=\”0660\””

> done

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac1.localdomain:/root]$

[root@11grac1.localdomain:/root]$ vi /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB424a5eb7-c9274de0_”, NAME=”asm-diskb”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB95c63929-9336a092_”, NAME=”asm-diskc”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa044f79d-51b67554_”, NAME=”asm-diskd”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”00660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB86ee407e-415b5b32_”, NAME=”asm-diske”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBe59f5561-e0df75b7_”, NAME=”asm-diskf”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac2.localdomain:/root]$ for i in g;

> do

> echo “KERNEL==\”sd*\”, BUS==\”scsi\”, PROGRAM==\”/sbin/scsi_id -g -u -s %p\”, RESULT==\”`scsi_id -g -u -s /block/sd$i`\”, NAME=\”asm-disk$i\”, OWNER=\”grid\”, GROUP=\”asmadmin\”, MODE=\”0660\””

> done

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac2.localdomain:/root]$ vi /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB424a5eb7-c9274de0_”, NAME=”asm-diskb”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB95c63929-9336a092_”, NAME=”asm-diskc”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa044f79d-51b67554_”, NAME=”asm-diskd”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB86ee407e-415b5b32_”, NAME=”asm-diske”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBe59f5561-e0df75b7_”, NAME=”asm-diskf”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac1.localdomain:/root]$ start_udev

Starting udev: [ OK ]

[root@11grac2.localdomain:/root]$ start_udev

Starting udev: [ OK ]

[root@11grac1.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 2015 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 2015 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:16 /dev/asm-diskd

brw-rw—- 1 oracle oinstall 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 oracle oinstall 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 08:17 /dev/asm-diskg

[root@11grac2.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 08:16 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 08:16 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:17 /dev/asm-diskd

brw-rw—- 1 oracle oinstall 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 oracle oinstall 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 08:18 /dev/asm-diskg

由于11gR1的RAC ASM磁盘均使用oracle用户管理,因此从udev下的规则文件中我们可以看到,曾经的asm-diskb~f用户属主和权限均为oracle:oinstall,但是因为新添加的这块磁盘是用来存放11gR2 RAC grid集群OCR和Voting Disk,因此磁盘权限和属主均变化为grid:asmadmin

六、停止11gR1 RAC数据库

[root@11grac1.localdomain:/root]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM1.asm application ONLINE ONLINE 11grac1

ora....C1.lsnr application ONLINE ONLINE 11grac1

ora....ac1.gsd application ONLINE ONLINE 11grac1

ora....ac1.ons application ONLINE ONLINE 11grac1

ora....ac1.vip application ONLINE ONLINE 11grac1

ora....SM2.asm application ONLINE ONLINE 11grac2

ora....C2.lsnr application ONLINE ONLINE 11grac2

ora....ac2.gsd application ONLINE ONLINE 11grac2

ora....ac2.ons application ONLINE ONLINE 11grac2

ora....ac2.vip application ONLINE ONLINE 11grac2

ora.rac11g.db application ONLINE ONLINE 11grac2

ora....g1.inst application ONLINE ONLINE 11grac1

ora....g2.inst application ONLINE ONLINE 11grac2

[oracle@11grac1.localdomain:/home/oracle]$ srvctl stop database -d rac11g

[oracle@11grac1.localdomain:/home/oracle]$ srvctl stop asm -n 11grac1

[oracle@11grac1.localdomain:/home/oracle]$ srvctl stop asm -n 11grac2

[oracle@11grac1.localdomain:/home/oracle]$ srvctl stop nodeapps -n 11grac1

[oracle@11grac1.localdomain:/home/oracle]$ srvctl stop nodeapps -n 11grac2

[oracle@11grac1.localdomain:/home/oracle]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM1.asm application OFFLINE OFFLINE

ora....C1.lsnr application OFFLINE OFFLINE

ora....ac1.gsd application OFFLINE OFFLINE

ora....ac1.ons application OFFLINE OFFLINE

ora....ac1.vip application OFFLINE OFFLINE

ora....SM2.asm application OFFLINE OFFLINE

ora....C2.lsnr application OFFLINE OFFLINE

ora....ac2.gsd application OFFLINE OFFLINE

ora....ac2.ons application OFFLINE OFFLINE

ora....ac2.vip application OFFLINE OFFLINE

ora.rac11g.db application OFFLINE OFFLINE

ora....g1.inst application OFFLINE OFFLINE

ora....g2.inst application OFFLINE OFFLINE

[root@11grac1.localdomain:/root]$ crsctl stop crs

Stopping resources.

This could take several minutes.

Successfully stopped Oracle Clusterware resources

Stopping Cluster Synchronization Services.

Shutting down the Cluster Synchronization Services daemon.

Shutdown request successfully issued.

[root@11grac2.localdomain:/root]$ crsctl stop crs

Stopping resources.

This could take several minutes.

Successfully stopped Oracle Clusterware resources

Stopping Cluster Synchronization Services.

Shutting down the Cluster Synchronization Services daemon.

Shutdown request successfully issued.

七、备份11gR1 RAC软件

[root@11grac1.localdomain:/root]$ mkdir -p /tmp/bk

[root@11grac1.localdomain:/root]$ cd /tmp/bk

1)备份OCR/voting disk

[root@11grac1.localdomain:/tmp/bk]$ ocrconfig -export ocrexp.bak

[root@11grac1.localdomain:/tmp/bk]$ ll

total 92

-rw-r–r– 1 root root 89399 May 18 08:42 ocrexp.bak

[root@11grac1.localdomain:/tmp/bk]$ crsctl query css votedisk

0. 0 /dev/asm-diskd

Located 1 voting disk(s).

[root@11grac1.localdomain:/tmp/bk]$ dd if=/dev/asm-diskd of=/tmp/bk/votedisk.bak

2097152+0 records in

2097152+0 records out

1073741824 bytes (1.1 GB) copied, 55.8614 seconds, 19.2 MB/s

2)备份RAC初始化脚本

— 这里需要在双节点上备份/etc/inittab配置文件以及以下初始化脚本

/etc/init.d/init.crs

/etc/init.d/init.crsd

/etc/init.d/init.cssd

/etc/init.d/init.evmd

[root@11grac1.localdomain:/tmp/bk]$ cp /etc/inittab inittab.bak

[root@11grac1.localdomain:/tmp/bk]$ cp /etc/init.d/init.crs init.crs.bak

[root@11grac1.localdomain:/tmp/bk]$ cp /etc/init.d/init.crsd init.crsd.bak

[root@11grac1.localdomain:/tmp/bk]$ cp /etc/init.d/init.cssd init.cssd.bak

[root@11grac1.localdomain:/tmp/bk]$ cp /etc/init.d/init.evmd init.evmd.bak

[root@11grac1.localdomain:/tmp/bk]$ ls -l

total 1049776

-rwxr-xr-x 1 root root 2236 May 18 08:50 init.crs.bak

-rwxr-xr-x 1 root root 5579 May 18 08:50 init.crsd.bak

-rwxr-xr-x 1 root root 56322 May 18 08:50 init.cssd.bak

-rwxr-xr-x 1 root root 3854 May 18 08:50 init.evmd.bak

-rw-r–r– 1 root root 1870 May 18 08:49 inittab.bak

-rw-r–r– 1 root root 89399 May 18 08:42 ocrexp.bak

-rw-r–r– 1 root root 1073741824 May 18 08:47 votedisk.bak

[root@11grac2.localdomain:/root]$ mkdir -p /tmp/bk

[root@11grac2.localdomain:/root]$ cd /tmp/bk

[root@11grac2.localdomain:/tmp/bk]$ cp /etc/inittab inittab.bak

[root@11grac2.localdomain:/tmp/bk]$ cp /etc/init.d/init.crs init.crs.bak

[root@11grac2.localdomain:/tmp/bk]$ cp /etc/init.d/init.crsd init.crsd.bak

[root@11grac2.localdomain:/tmp/bk]$ cp /etc/init.d/init.cssd init.cssd.bak

[root@11grac2.localdomain:/tmp/bk]$ cp /etc/init.d/init.evmd init.evmd.bak

[root@11grac2.localdomain:/tmp/bk]$ ls -l

total 80

-rwxr-xr-x 1 root root 2236 May 18 08:52 init.crs.bak

-rwxr-xr-x 1 root root 5579 May 18 08:52 init.crsd.bak

-rwxr-xr-x 1 root root 56322 May 18 08:52 init.cssd.bak

-rwxr-xr-x 1 root root 3854 May 18 08:52 init.evmd.bak

-rw-r–r– 1 root root 1870 May 18 08:52 inittab.bak

3)备份集群及数据库软件

tar -cxzf /tmp/bk/dbs.tar.gz /u01/app/oracle/*

tar -cxzf /tmp/bk/crs.tar.gz /u01/app/crs/*

4)备份数据库

使用RMAN备份数据库不再赘述。

5)移除/etc/oracle

[root@11grac1.localdomain:/root]$ ls -l /etc/oracle

total 12

-rw-r–r– 1 root oinstall 81 May 14 22:29 ocr.loc

drwxrwxr-x 5 root root 4096 May 18 07:06 oprocd

drwxr-xr-x 3 root root 4096 May 14 22:29 scls_scr

[root@11grac1.localdomain:/root]$ mv /etc/oracle/ /tmp/bk/etc_oracle

[root@11grac2.localdomain:/root]$ ls -l /etc/oracle

total 12

-rw-r–r– 1 root oinstall 81 May 14 22:35 ocr.loc

drwxrwxr-x 5 root root 4096 May 18 07:06 oprocd

drwxr-xr-x 3 root root 4096 May 14 22:35 scls_scr

[root@11grac2.localdomain:/root]$ mv /etc/oracle /tmp/bk/etc_oracle

6)移除/etc/init.d/init*

[root@11grac1.localdomain:/root]$ cd /tmp/bk

[root@11grac1.localdomain:/tmp/bk]$ ls -l

total 1049780

drwxr-xr-x 4 root oinstall 4096 May 14 22:29 etc_oracle

-rwxr-xr-x 1 root root 2236 May 18 08:50 init.crs.bak

-rwxr-xr-x 1 root root 5579 May 18 08:50 init.crsd.bak

-rwxr-xr-x 1 root root 56322 May 18 08:50 init.cssd.bak

-rwxr-xr-x 1 root root 3854 May 18 08:50 init.evmd.bak

-rw-r–r– 1 root root 1870 May 18 08:49 inittab.bak

-rw-r–r– 1 root root 89399 May 18 08:42 ocrexp.bak

-rw-r–r– 1 root root 1073741824 May 18 08:47 votedisk.bak

[root@11grac1.localdomain:/tmp/bk]$ mkdir init_mv

[root@11grac1.localdomain:/tmp/bk]$ cd init_mv

[root@11grac1.localdomain:/tmp/bk/init_mv]$ mv /etc/init.d/init.* /tmp/bk/init_mv/

[root@11grac1.localdomain:/tmp/bk/init_mv]$ ls -l

total 76

-rwxr-xr-x 1 root root 2236 May 17 08:20 init.crs

-rwxr-xr-x 1 root root 5579 May 17 08:20 init.crsd

-rwxr-xr-x 1 root root 56322 May 17 08:20 init.cssd

-rwxr-xr-x 1 root root 3854 May 17 08:20 init.evmd

[root@11grac2.localdomain:/root]$ cd /tmp/bk/

[root@11grac2.localdomain:/tmp/bk]$ ls -l

total 84

drwxr-xr-x 4 root oinstall 4096 May 14 22:35 etc_oracle

-rwxr-xr-x 1 root root 2236 May 18 08:52 init.crs.bak

-rwxr-xr-x 1 root root 5579 May 18 08:52 init.crsd.bak

-rwxr-xr-x 1 root root 56322 May 18 08:52 init.cssd.bak

-rwxr-xr-x 1 root root 3854 May 18 08:52 init.evmd.bak

-rw-r–r– 1 root root 1870 May 18 08:52 inittab.bak

[root@11grac2.localdomain:/tmp/bk]$ mkdir init_mv

[root@11grac2.localdomain:/tmp/bk]$ cd init_mv

[root@11grac2.localdomain:/tmp/bk/init_mv]$ mv /etc/init.d/init.* /tmp/bk/init_mv/

[root@11grac2.localdomain:/tmp/bk/init_mv]$ ls -l

total 76

-rwxr-xr-x 1 root root 2236 May 17 08:31 init.crs

-rwxr-xr-x 1 root root 5579 May 17 08:31 init.crsd

-rwxr-xr-x 1 root root 56322 May 17 08:31 init.cssd

-rwxr-xr-x 1 root root 3854 May 17 08:31 init.evmd

7)修改/etc/inittab文件

— 将文件中最后三行注释掉

[root@11grac1.localdomain:/root]$ tail -f /etc/inittab

4:2345:respawn:/sbin/mingetty tty4

5:2345:respawn:/sbin/mingetty tty5

6:2345:respawn:/sbin/mingetty tty6

# Run xdm in runlevel 5

x:5:respawn:/etc/X11/prefdm -nodaemon

# h1:35:respawn:/etc/init.d/init.evmd run >/dev/null 2>&1 </dev/null

# h2:35:respawn:/etc/init.d/init.cssd fatal >/dev/null 2>&1 </dev/null

# h3:35:respawn:/etc/init.d/init.crsd run >/dev/null 2>&1 </dev/null

[root@11grac1.localdomain:/root]$ tail -f /etc/inittab

4:2345:respawn:/sbin/mingetty tty4

5:2345:respawn:/sbin/mingetty tty5

6:2345:respawn:/sbin/mingetty tty6

# Run xdm in runlevel 5

x:5:respawn:/etc/X11/prefdm -nodaemon

# h1:35:respawn:/etc/init.d/init.evmd run >/dev/null 2>&1 </dev/null

# h2:35:respawn:/etc/init.d/init.cssd fatal >/dev/null 2>&1 </dev/null

# h3:35:respawn:/etc/init.d/init.crsd run >/dev/null 2>&1 </dev/null

8)删除/tmp/.oracle和/var/tmp/.oracle

[root@11grac1.localdomain:/root]$ rm -rf /tmp/.oracle

[root@11grac1.localdomain:/root]$ rm -rf /var/tmp/.oracle

[root@11grac2.localdomain:/root]$ rm -rf /tmp/.oracle

[root@11grac2.localdomain:/root]$ rm -rf /var/tmp/.oracle

八、安装grid软件

1)在安装grid之前先配置grid用户的ssh互信关系

[grid@11grac1 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_rsa):

Created directory ‘/home/grid/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_rsa.

Your public key has been saved in /home/grid/.ssh/id_rsa.pub.

The key fingerprint is:

10:75:22:03:21:35:c7:5b:ef:c6:3a:d9:5a:dd:6b:7b grid@11grac1.localdomain

[grid@11grac1 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_dsa.

Your public key has been saved in /home/grid/.ssh/id_dsa.pub.

The key fingerprint is:

ca:19:c2:55:b7:c9:2e:30:55:96:8c:be:da:35:06:a9 grid@11grac1.localdomain

[grid@11grac2 ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_rsa):

Created directory ‘/home/grid/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_rsa.

Your public key has been saved in /home/grid/.ssh/id_rsa.pub.

The key fingerprint is:

8e:4b:06:9e:7f:ce:8a:6b:d0:b8:9f:15:23:63:3b:33 grid@11grac2.localdomain

[grid@11grac2 ~]$ ssh-keygen -t dsa

Generating public/private dsa key pair.

Enter file in which to save the key (/home/grid/.ssh/id_dsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/grid/.ssh/id_dsa.

Your public key has been saved in /home/grid/.ssh/id_dsa.pub.

The key fingerprint is:

a9:d2:dd:d5:91:37:30:23:81:fc:fa:c9:5f:0e:5a:96 grid@11grac2.localdomain

[grid@11grac1 ~]$ cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

[grid@11grac1 ~]$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[grid@11grac1 ~]$ ssh 11grac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ’11grac2 (192.168.56.222)’ can’t be established.

RSA key fingerprint is 25:8c:5f:0f:cd:8a:4b:35:84:75:c8:cd:58:75:35:6b.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ’11grac2,192.168.56.222′ (RSA) to the list of known hosts.

grid@11grac2’s password:

[grid@11grac1 ~]$ ssh 11grac2 cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

grid@11grac2’s password:

[grid@11grac1 ~]$ scp ~/.ssh/authorized_keys 11grac2:~/.ssh/authorized_keys

grid@11grac2’s password:

authorized_keys 100% 2040 2.0KB/s 00:00

2)测试grid用户ssh互信关系

$ cat ssh.sh

ssh 11grac1 date

ssh 11grac2 date

ssh 11grac1-priv date

ssh 11grac2-priv date

[grid@11grac1 ~]$ sh ssh.sh

Mon May 18 08:33:34 CST 2015

Mon May 18 08:33:35 CST 2015

Mon May 18 08:33:34 CST 2015

Mon May 18 08:33:35 CST 2015

[grid@11grac2 ~]$ sh ssh.sh

Mon May 18 08:33:44 CST 2015

Mon May 18 08:33:45 CST 2015

Mon May 18 08:33:45 CST 2015

Mon May 18 08:33:46 CST 2015

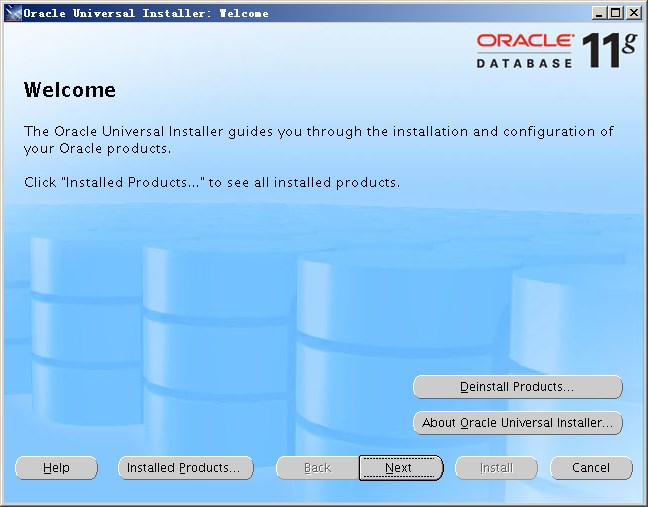

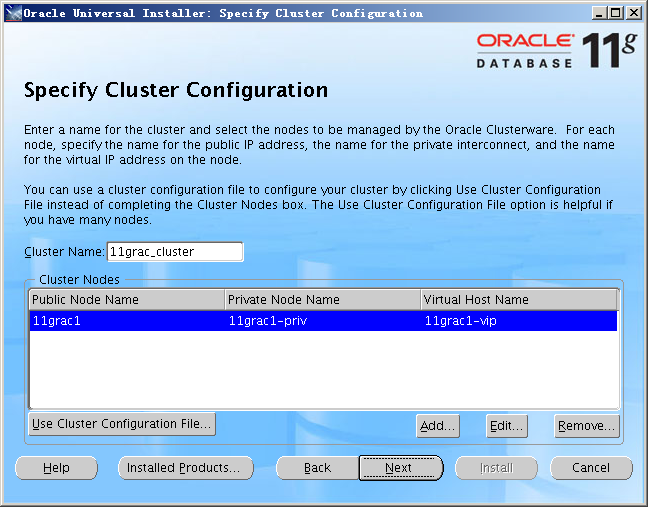

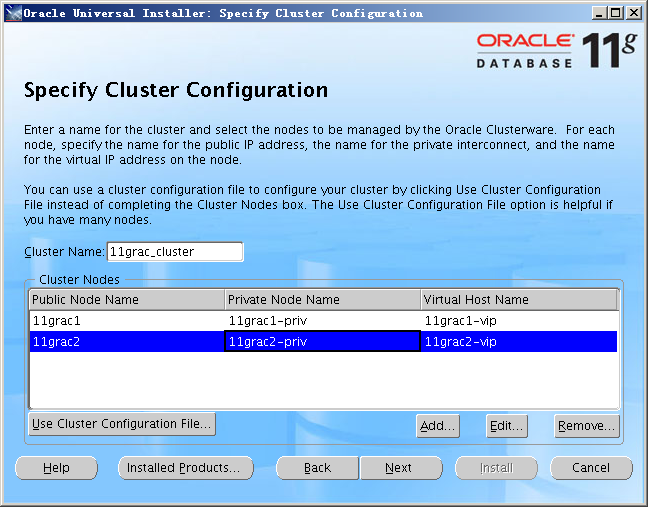

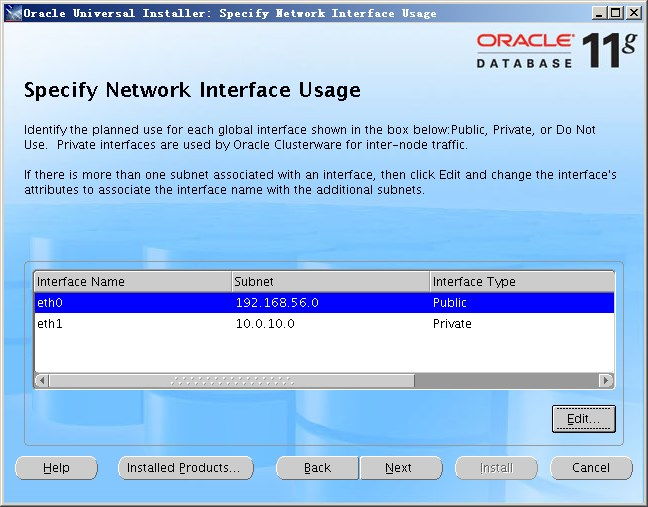

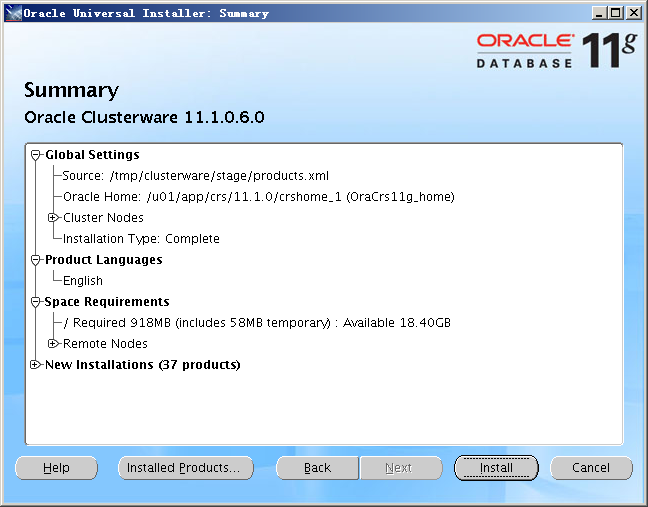

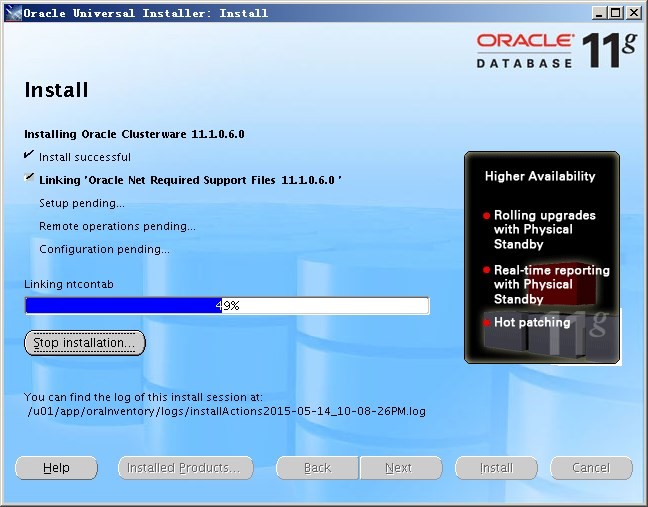

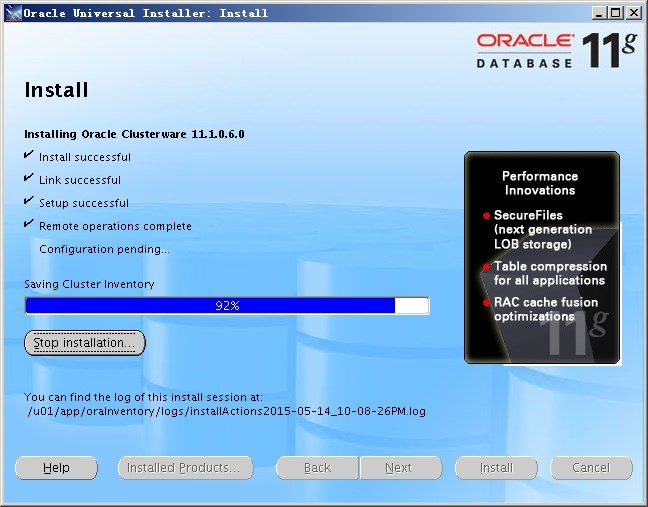

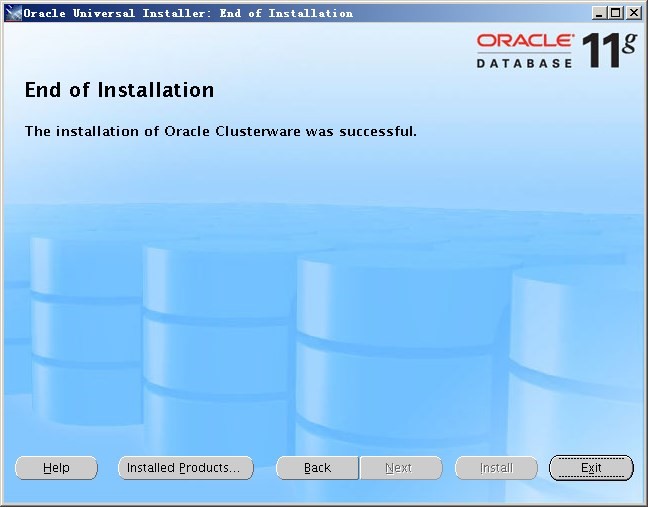

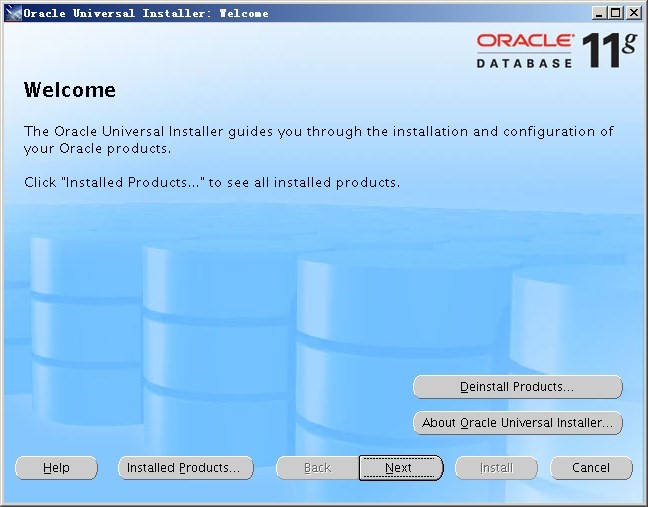

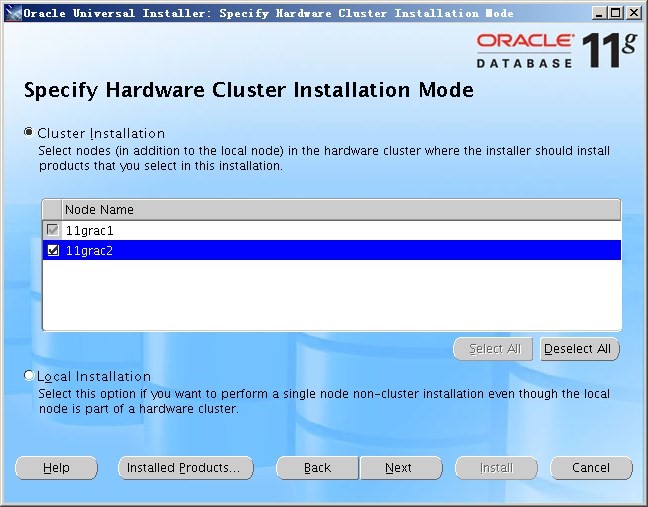

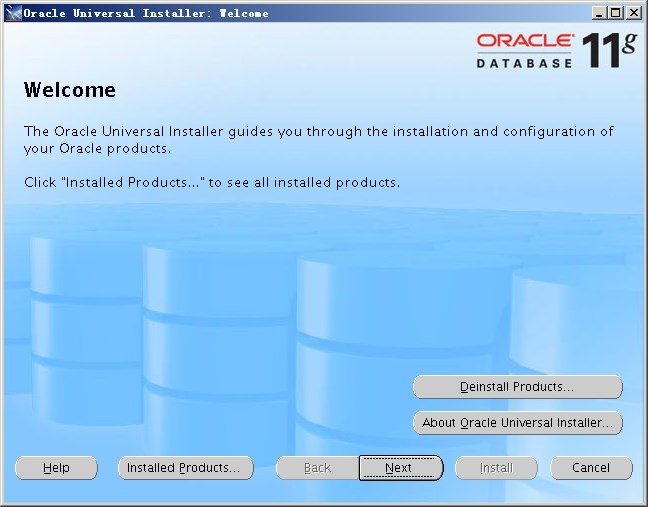

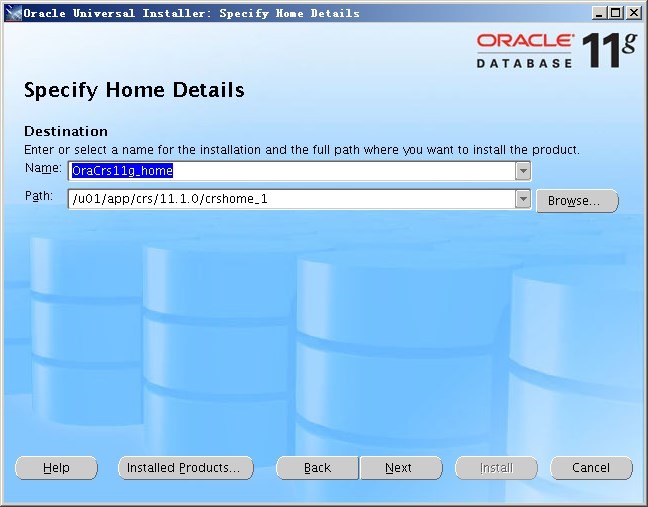

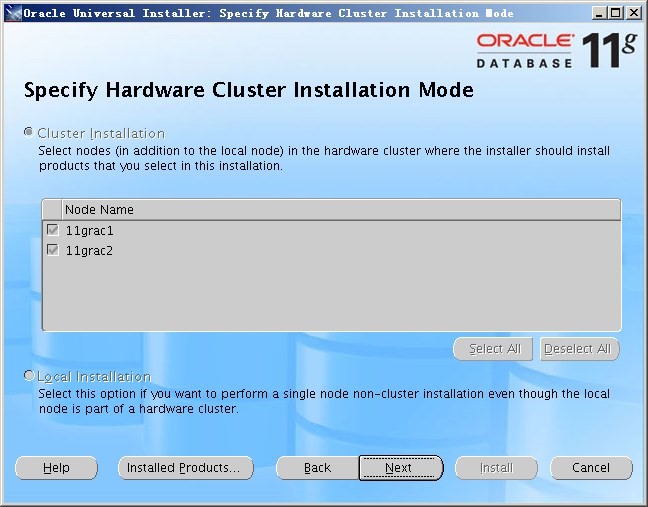

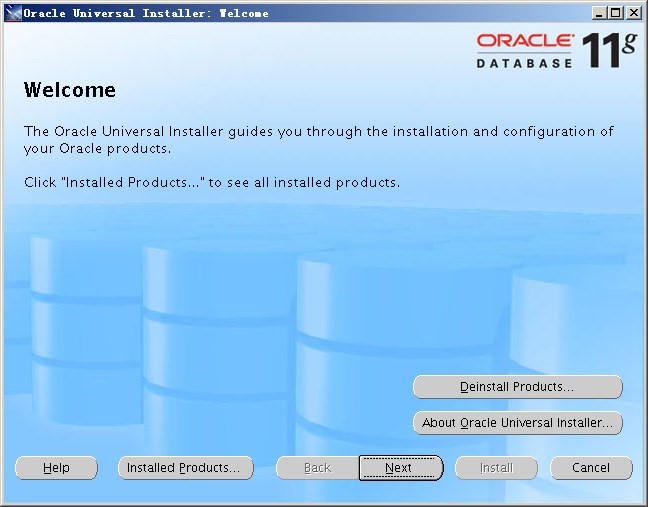

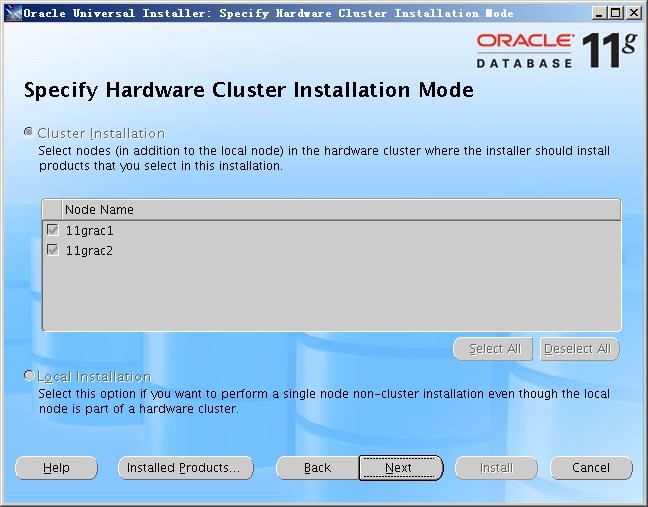

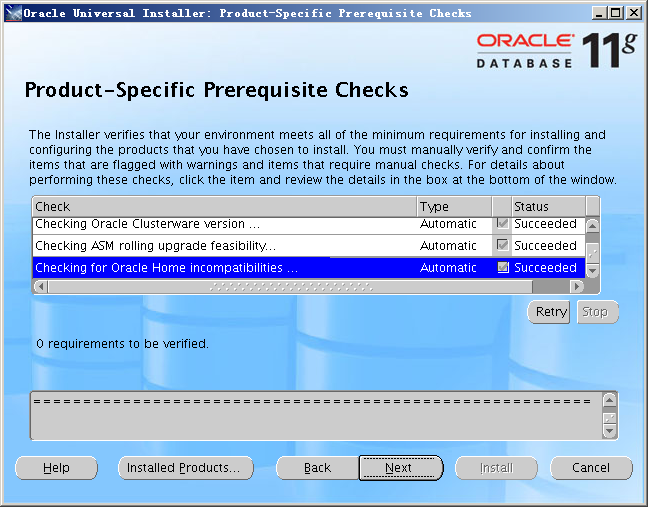

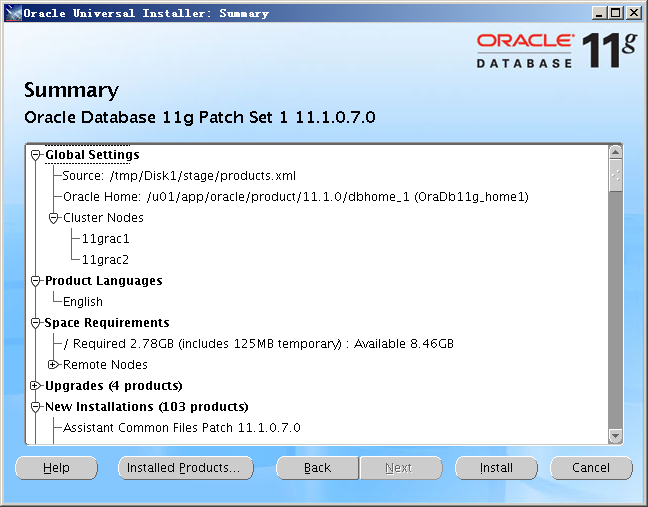

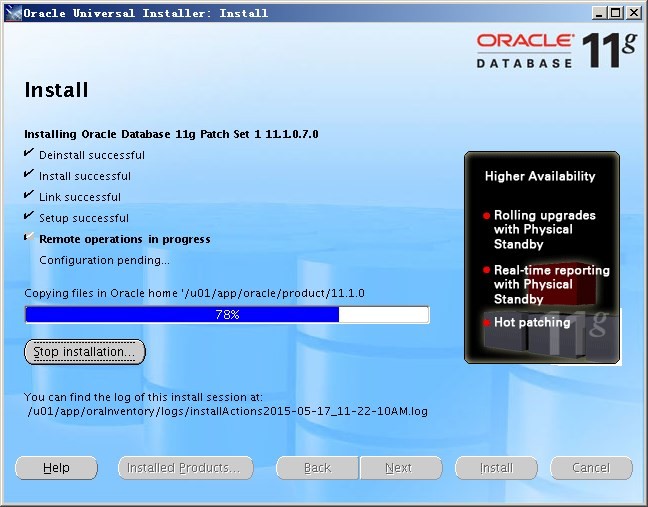

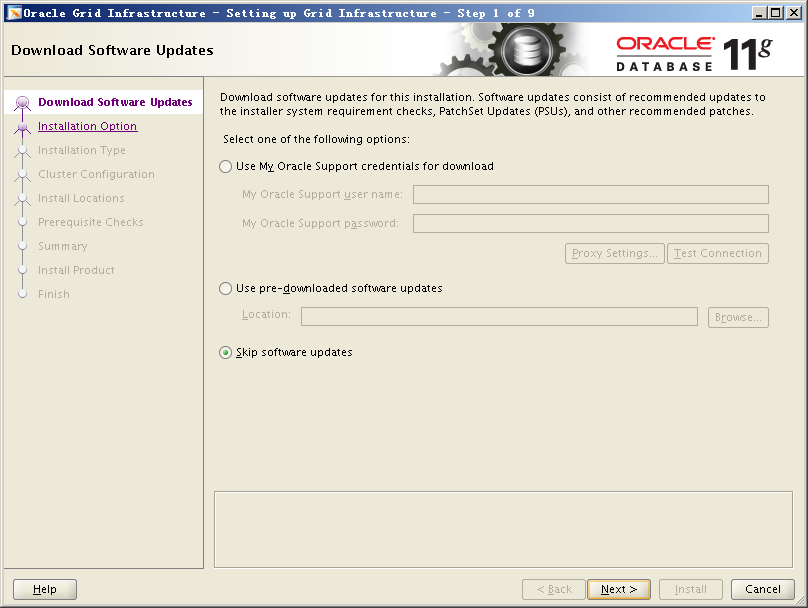

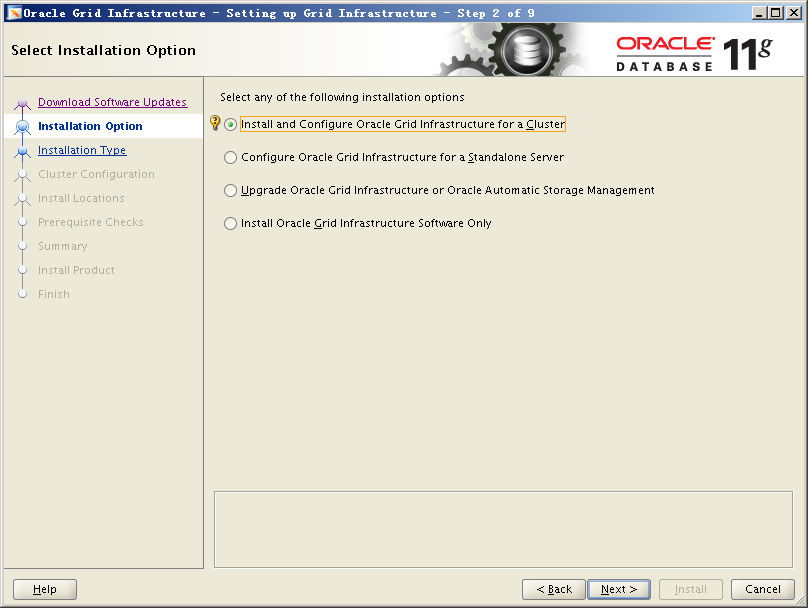

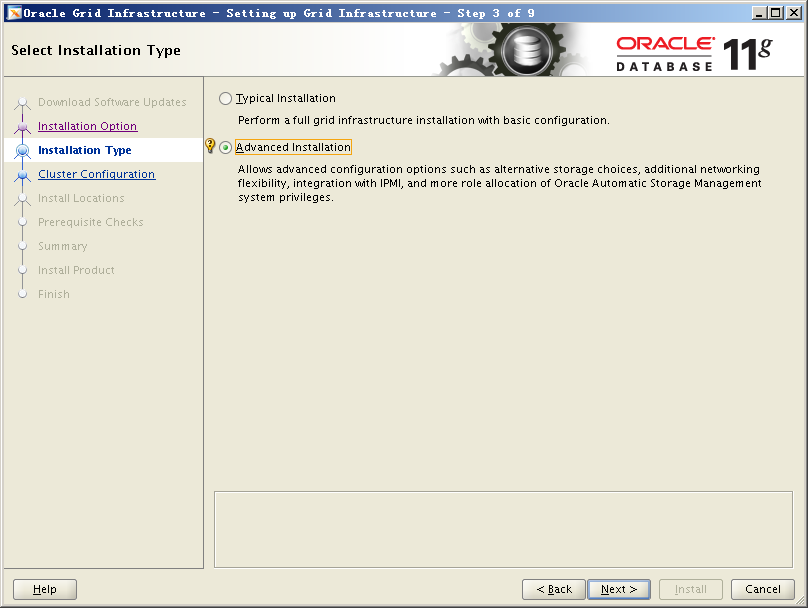

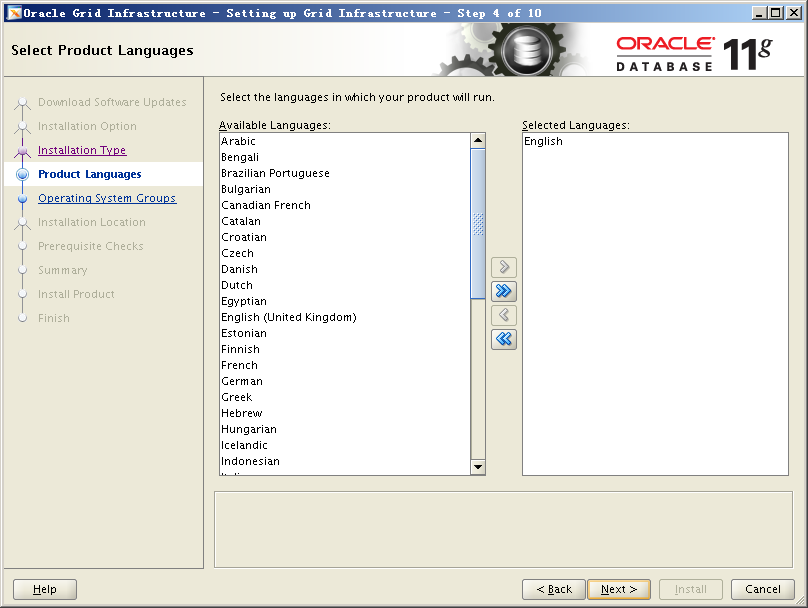

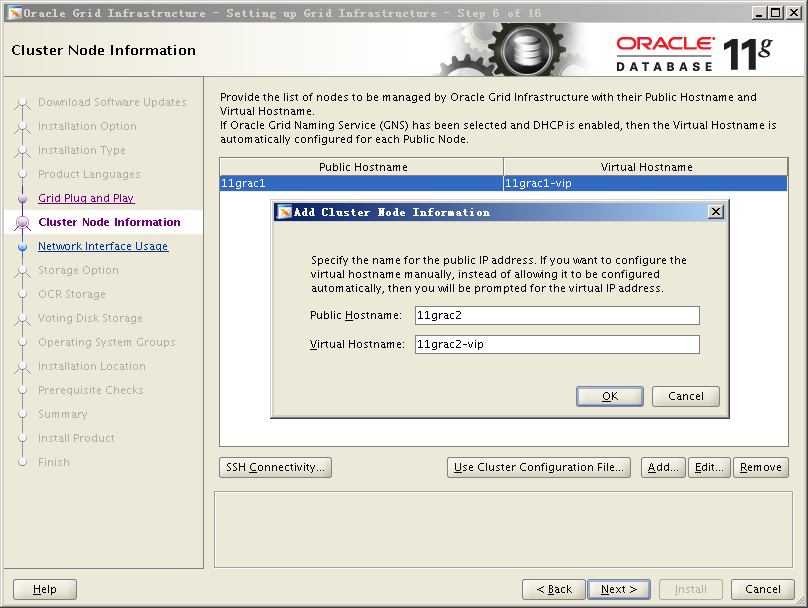

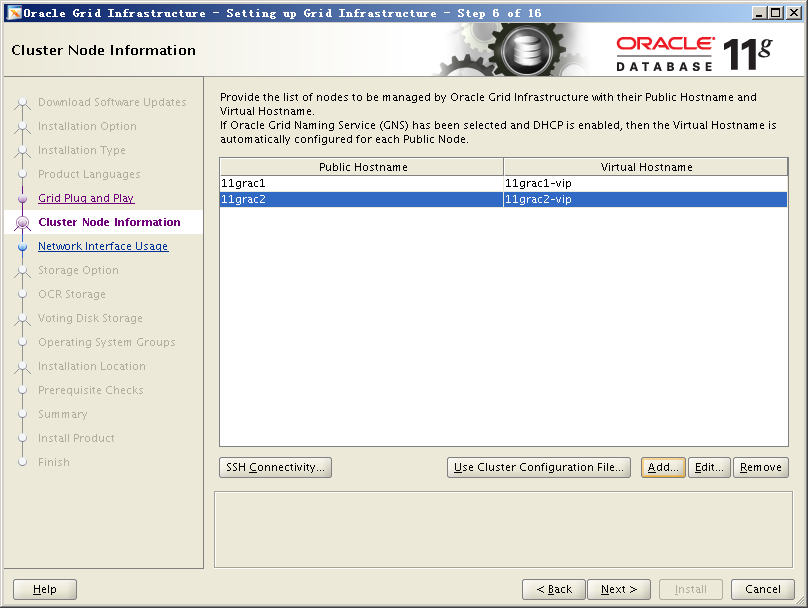

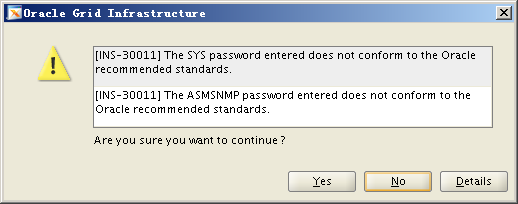

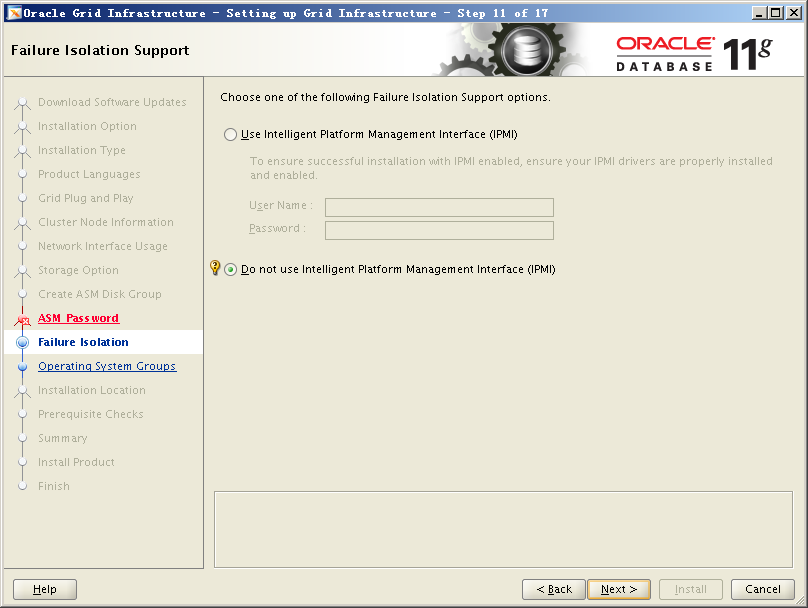

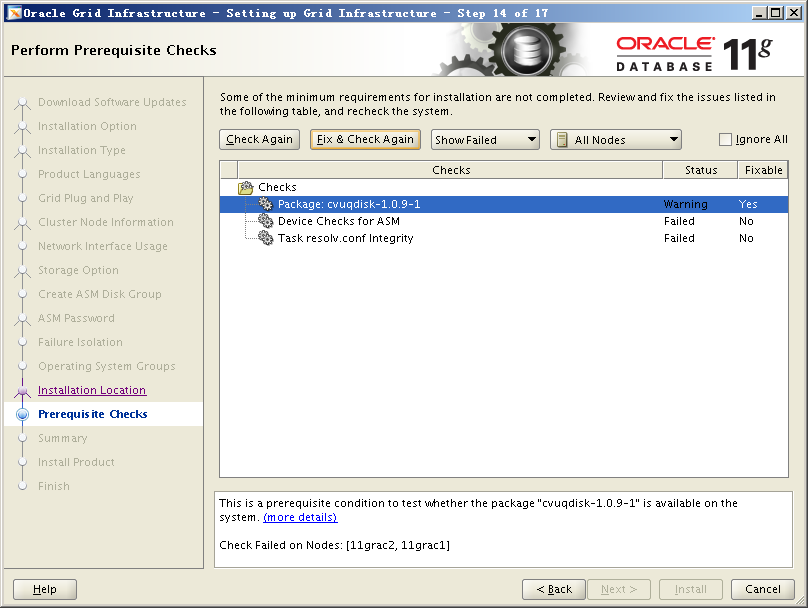

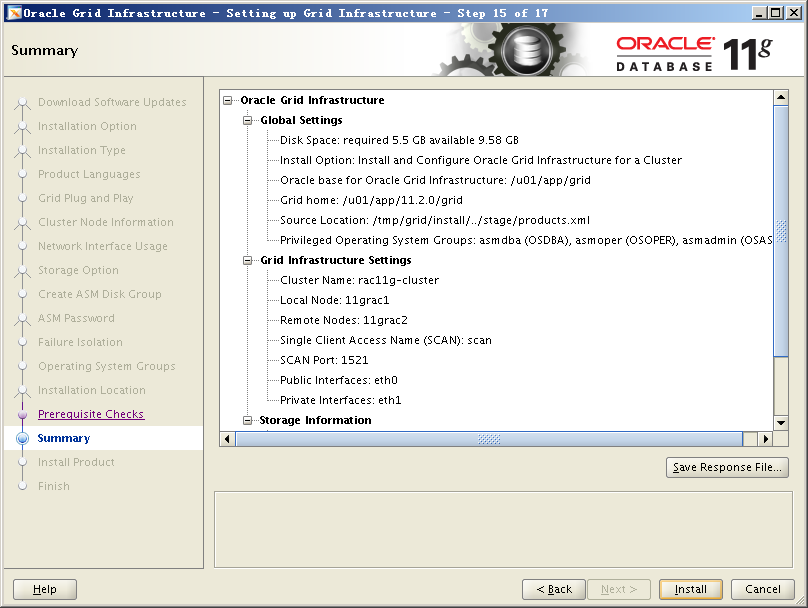

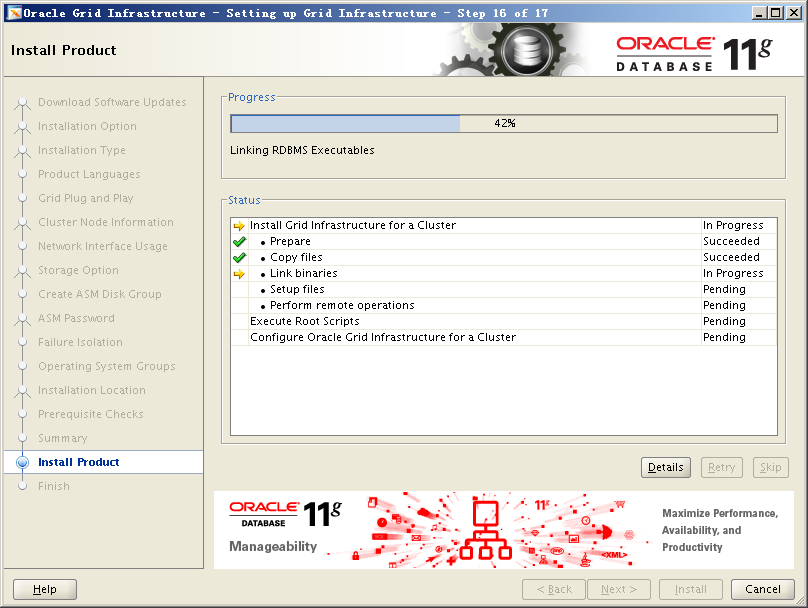

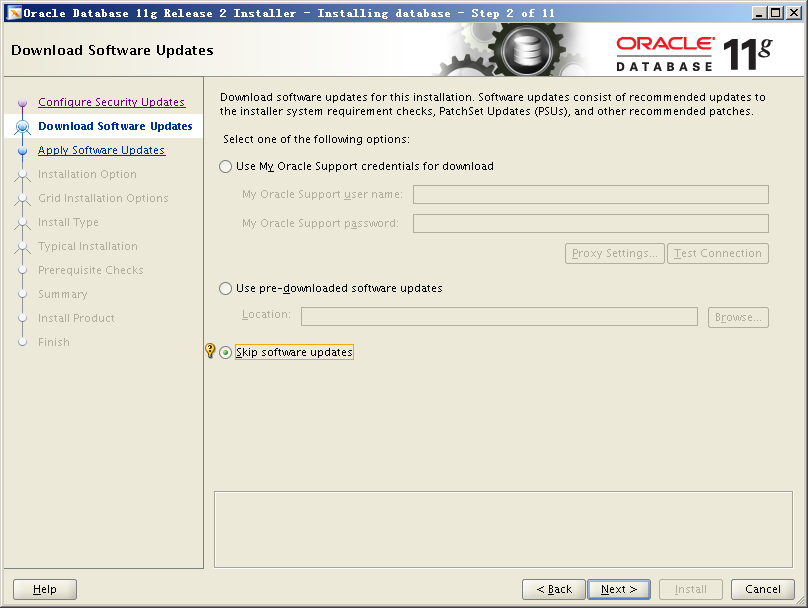

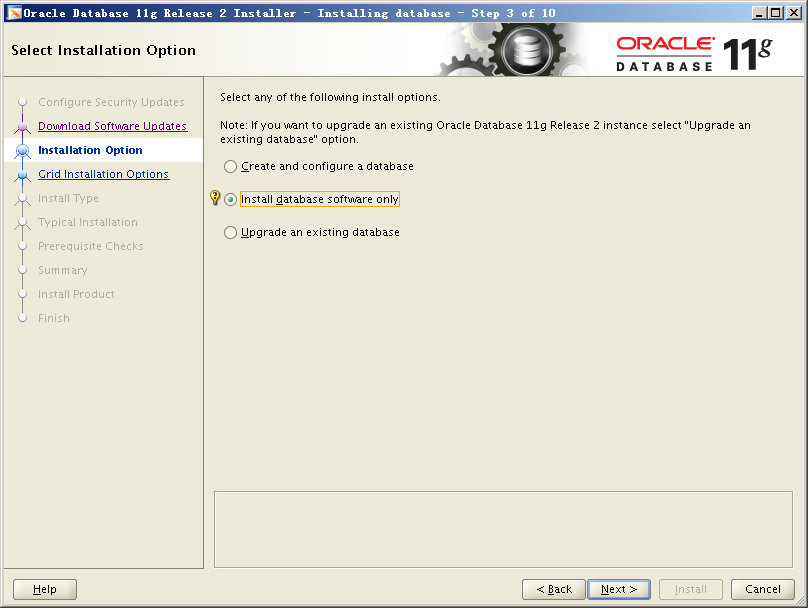

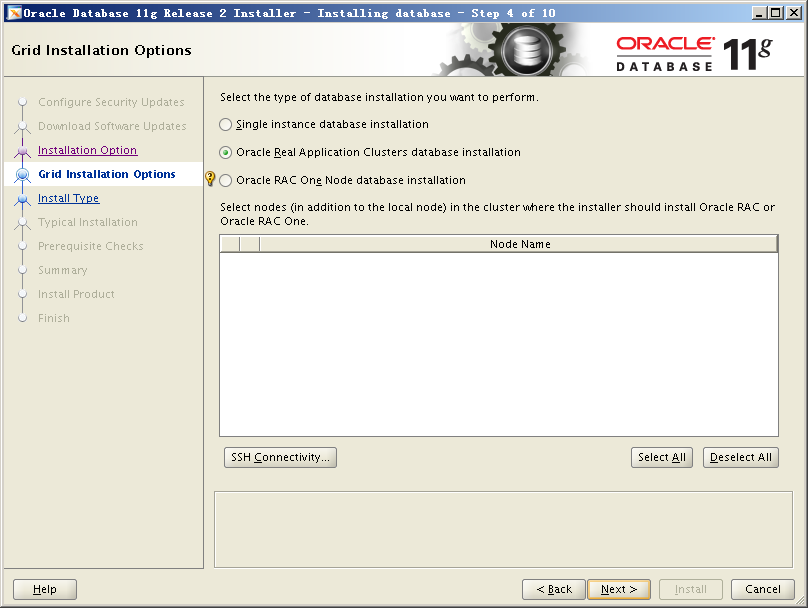

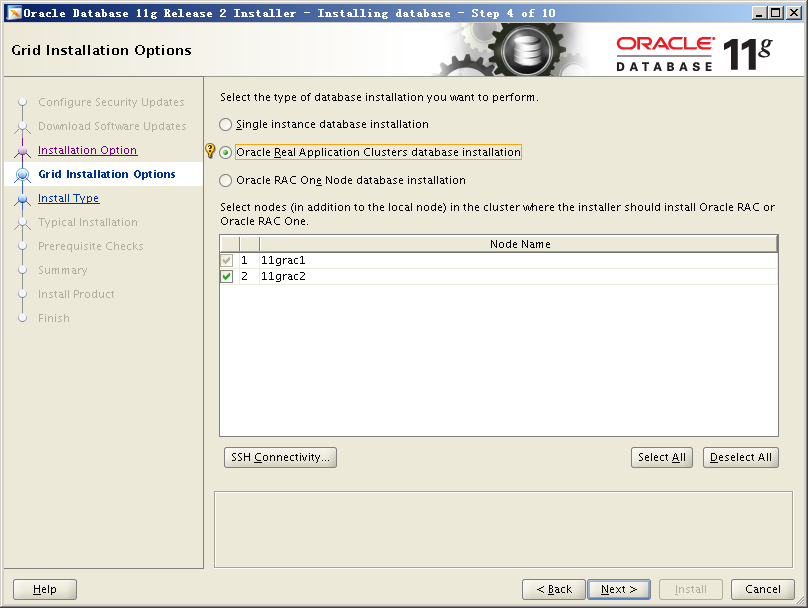

3)开始安装grid软件

[root@11grac1.localdomain:/tmp]$ unzip p13390677_112040_Linux-x86-64_3of7.zip

[root@11grac1.localdomain:/root]$ su – grid

[grid@11grac1 ~]$ cd /tmp/grid

[grid@11grac1 grid]$ export DISPLAY=192.168.56.1:0.0

[grid@11grac1 grid]$ ./runInstaller

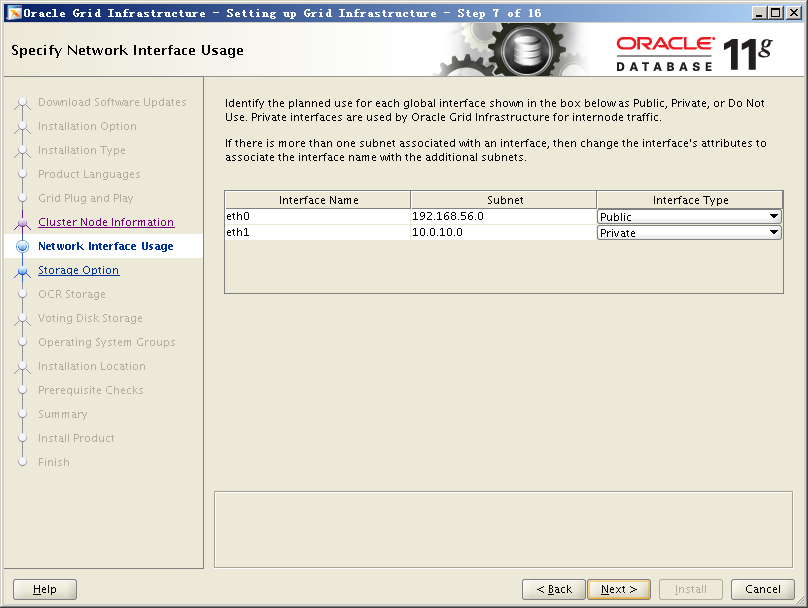

— 这里的SCAN Name一定要写成DNS中解析的名字

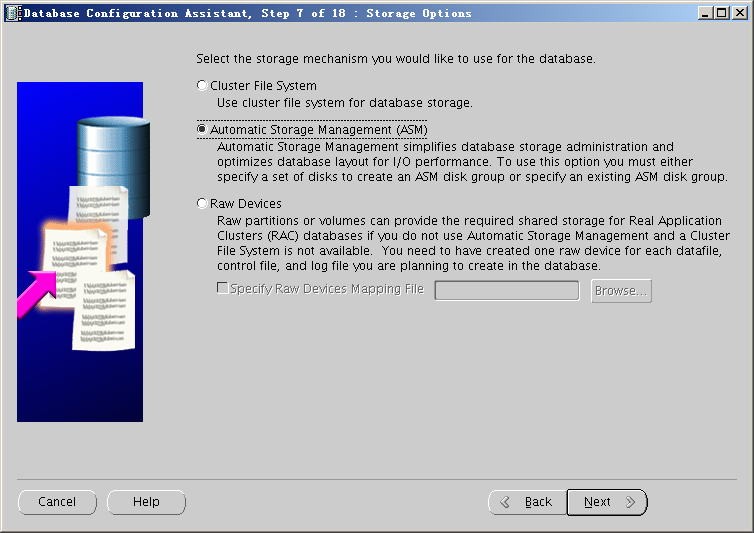

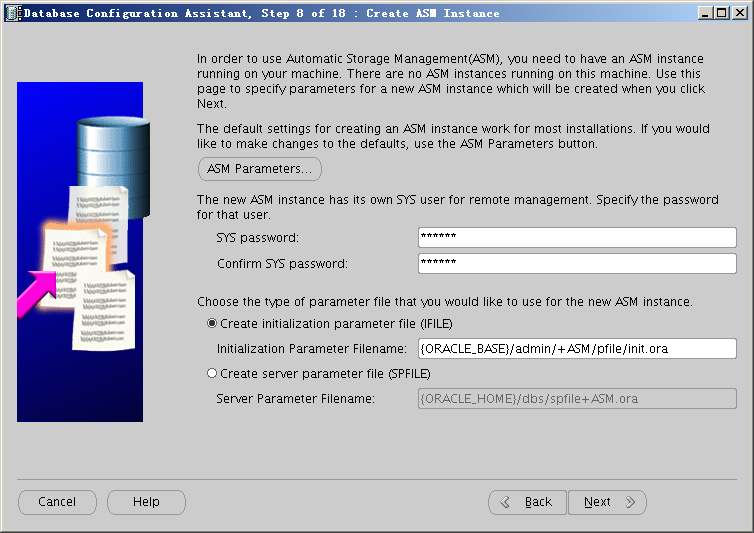

— 选择新添加配置好的ASM磁盘创建ASM DISK GROUP

— 按照提示运行fixup脚本

[root@11grac1.localdomain:/tmp]$ cd CVU_11.2.0.4.0_grid

[root@11grac1.localdomain:/tmp/CVU_11.2.0.4.0_grid]$ ./runfixup.sh

Response file being used is :./fixup.response

Enable file being used is :./fixup.enable

Log file location: ./orarun.log

Installing Package /tmp/CVU_11.2.0.4.0_grid//cvuqdisk-1.0.9-1.rpm

Preparing… ########################################### [100%]

1:cvuqdisk ########################################### [100%]

[root@11grac2.localdomain:/tmp]$ cd CVU_11.2.0.4.0_grid/

[root@11grac2.localdomain:/tmp/CVU_11.2.0.4.0_grid]$ ./runfixup.sh

Response file being used is :./fixup.response

Enable file being used is :./fixup.enable

Log file location: ./orarun.log

Installing Package /tmp/CVU_11.2.0.4.0_grid//cvuqdisk-1.0.9-1.rpm

Preparing… ########################################### [100%]

1:cvuqdisk ########################################### [100%]

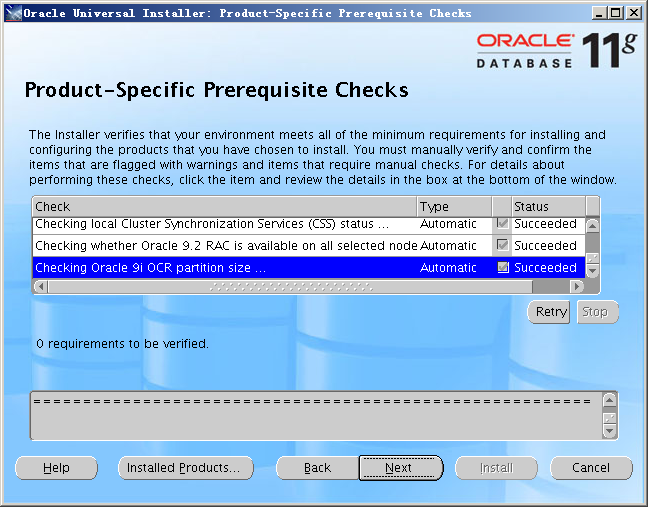

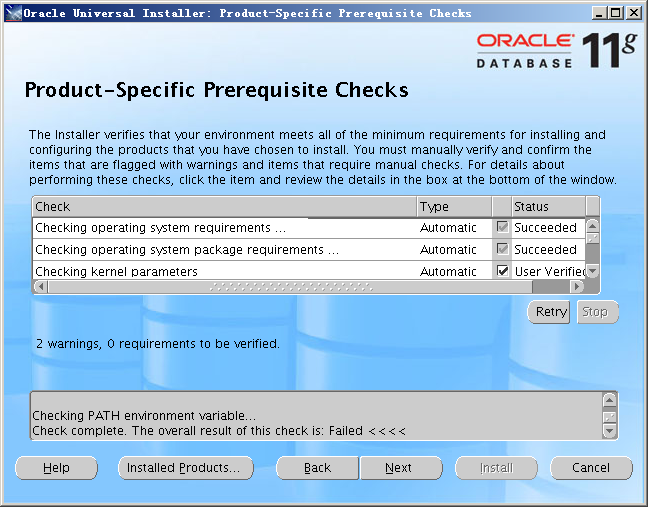

— 运行完fixup脚本后再执行Check

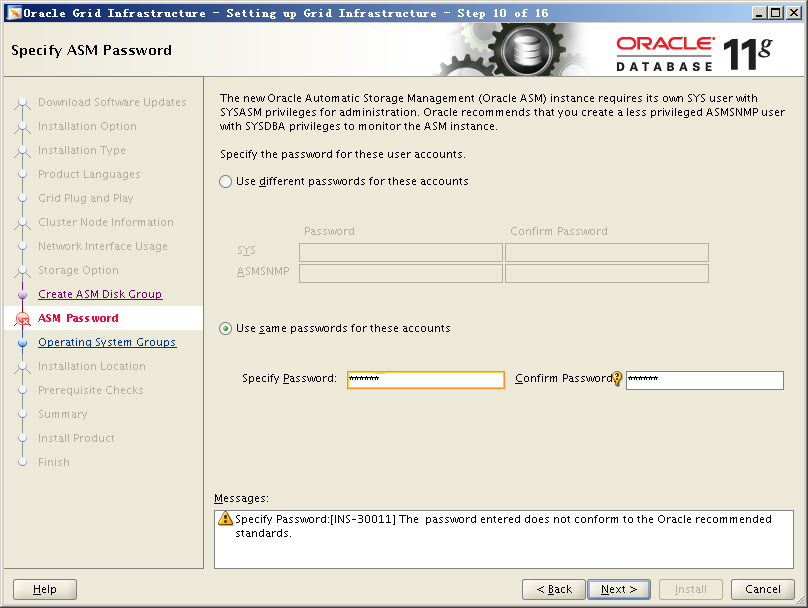

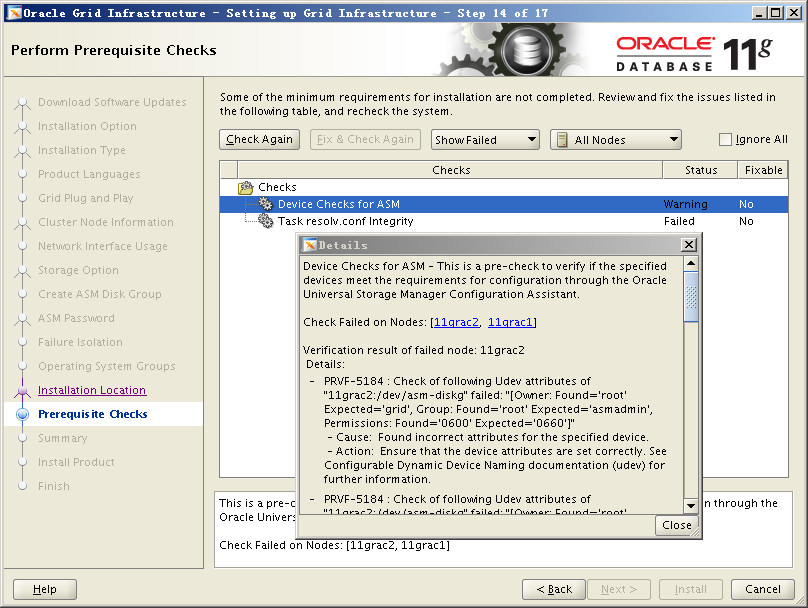

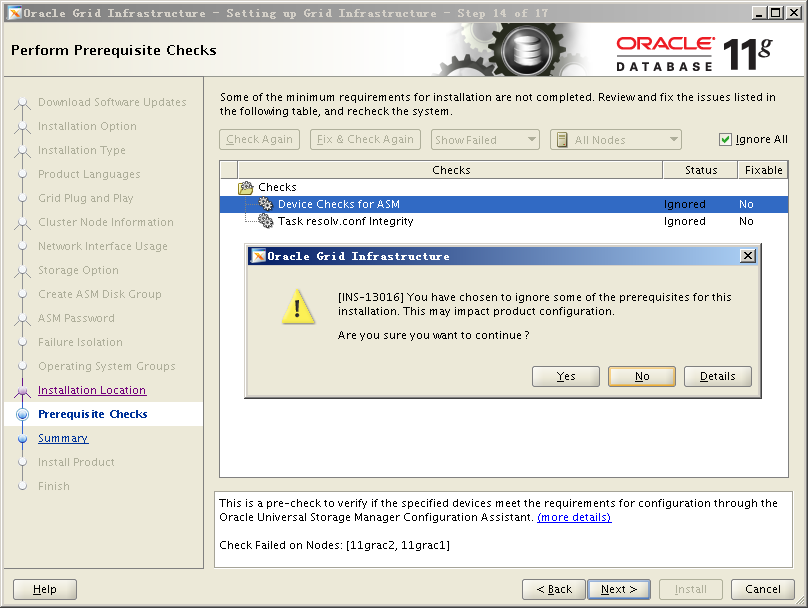

— 这里还是有两个报错,经检查我们的磁盘权限和DNS解析没有问题,因此可以忽略。

[root@11grac1.localdomain:/root]$ ls -l /dev/asm-diskg

brw-rw—- 1 grid asmadmin 8, 96 May 18 08:17 /dev/asm-diskg

[root@11grac2.localdomain:/root]$ ls -l /dev/asm-diskg

brw-rw—- 1 grid asmadmin 8, 96 May 18 08:18 /dev/asm-diskg

[root@11grac1.localdomain:/root]$ nslookup scan

Server: 192.168.56.111

Address: 192.168.56.111#53

Name: scan.oracle.com

Address: 192.168.56.102

Name: scan.oracle.com

Address: 192.168.56.103

Name: scan.oracle.com

Address: 192.168.56.101

[root@11grac1.localdomain:/root]$ nslookup 192.168.56.101

Server: 192.168.56.111

Address: 192.168.56.111#53

101.56.168.192.in-addr.arpa name = scan.oracle.com.

[root@11grac1.localdomain:/root]$ nslookup 192.168.56.102

Server: 192.168.56.111

Address: 192.168.56.111#53

102.56.168.192.in-addr.arpa name = scan.oracle.com.

[root@11grac1.localdomain:/root]$ nslookup 192.168.56.103

Server: 192.168.56.111

Address: 192.168.56.111#53

103.56.168.192.in-addr.arpa name = scan.oracle.com.

[root@11grac2.localdomain:/root]$ nslookup scan

Server: 192.168.56.111

Address: 192.168.56.111#53

Name: scan.oracle.com

Address: 192.168.56.103

Name: scan.oracle.com

Address: 192.168.56.101

Name: scan.oracle.com

Address: 192.168.56.102

[root@11grac2.localdomain:/root]$ nslookup 192.168.56.101

Server: 192.168.56.111

Address: 192.168.56.111#53

101.56.168.192.in-addr.arpa name = scan.oracle.com.

[root@11grac2.localdomain:/root]$ nslookup 192.168.56.102

Server: 192.168.56.111

Address: 192.168.56.111#53

102.56.168.192.in-addr.arpa name = scan.oracle.com.

[root@11grac2.localdomain:/root]$ nslookup 192.168.56.103

Server: 192.168.56.111

Address: 192.168.56.111#53

103.56.168.192.in-addr.arpa name = scan.oracle.com.

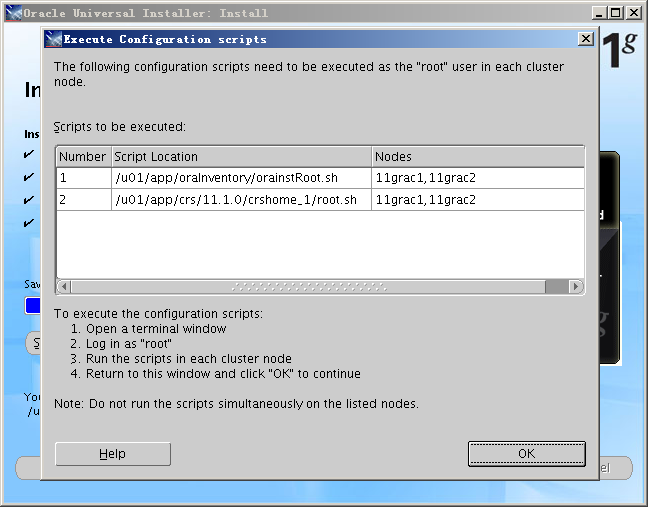

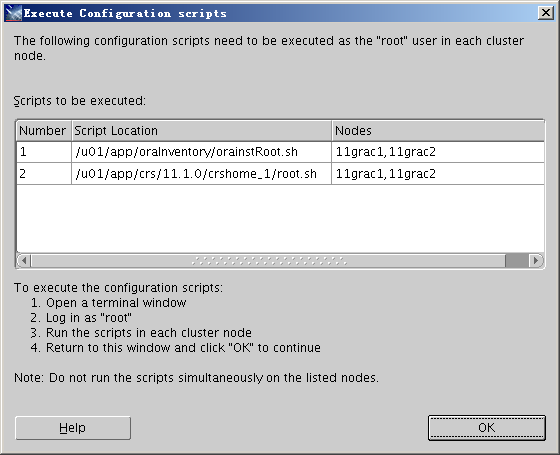

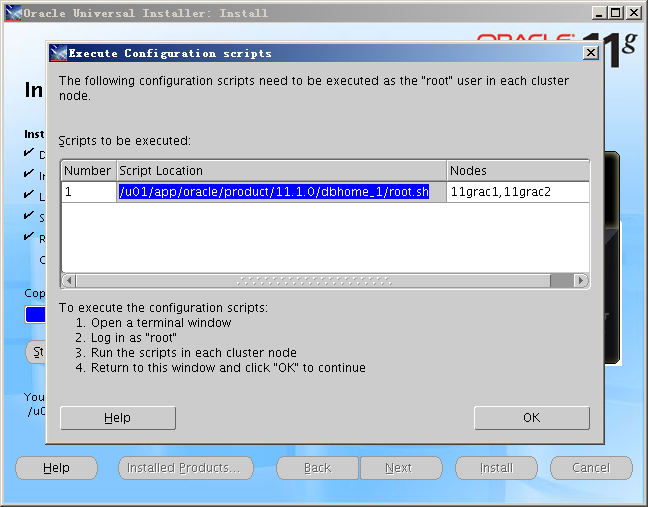

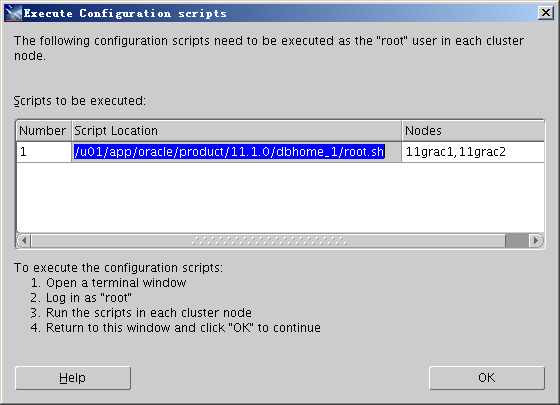

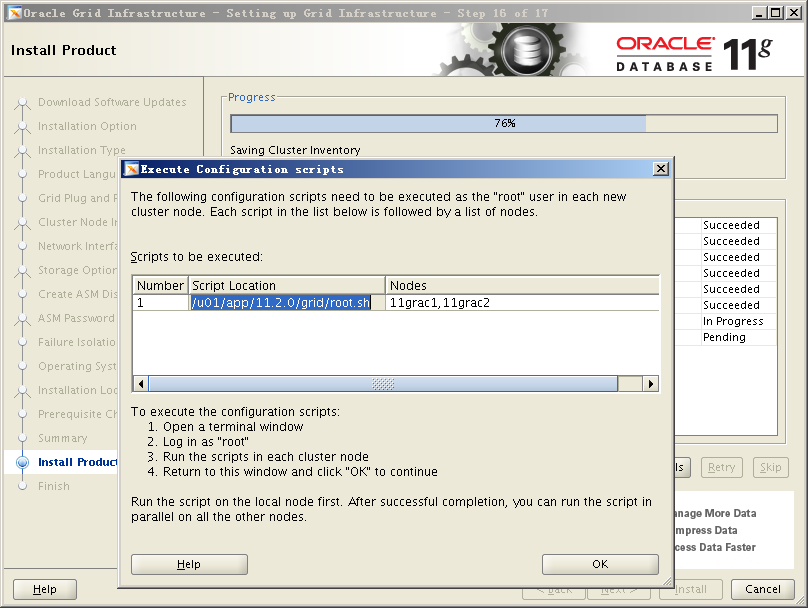

— 按照提示执行脚本

[root@11grac1.localdomain:/root]$ /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The file “oraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying oraenv to /usr/local/bin …

The file “coraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying coraenv to /usr/local/bin …

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization – successful

root wallet

root wallet cert

root cert export

peer wallet

profile reader wallet

pa wallet

peer wallet keys

pa wallet keys

peer cert request

pa cert request

peer cert

pa cert

peer root cert TP

profile reader root cert TP

pa root cert TP

peer pa cert TP

pa peer cert TP

profile reader pa cert TP

profile reader peer cert TP

peer user cert

pa user cert

Adding Clusterware entries to inittab

CRS-2672: Attempting to start ‘ora.mdnsd’ on ’11grac1′

CRS-2676: Start of ‘ora.mdnsd’ on ’11grac1′ succeeded

CRS-2672: Attempting to start ‘ora.gpnpd’ on ’11grac1′

CRS-2676: Start of ‘ora.gpnpd’ on ’11grac1′ succeeded

CRS-2672: Attempting to start ‘ora.cssdmonitor’ on ’11grac1′

CRS-2672: Attempting to start ‘ora.gipcd’ on ’11grac1′

CRS-2676: Start of ‘ora.gipcd’ on ’11grac1′ succeeded

CRS-2676: Start of ‘ora.cssdmonitor’ on ’11grac1′ succeeded

CRS-2672: Attempting to start ‘ora.cssd’ on ’11grac1′

CRS-2672: Attempting to start ‘ora.diskmon’ on ’11grac1′

CRS-2676: Start of ‘ora.diskmon’ on ’11grac1′ succeeded

CRS-2676: Start of ‘ora.cssd’ on ’11grac1′ succeeded

ASM created and started successfully.

Disk Group OVDF created successfully.

clscfg: -install mode specified

Successfully accumulated necessary OCR keys.

Creating OCR keys for user ‘root’, privgrp ‘root’..

Operation successful.

CRS-4256: Updating the profile

Successful addition of voting disk 1affe9a19f2a4f34bfdd54c179f54ae3.

Successfully replaced voting disk group with +OVDF.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

– —– —————– ——— ———

1. ONLINE 1affe9a19f2a4f34bfdd54c179f54ae3 (/dev/asm-diskg) [OVDF]

Located 1 voting disk(s).

CRS-2672: Attempting to start ‘ora.OVDF.dg’ on ’11grac1′

CRS-2676: Start of ‘ora.OVDF.dg’ on ’11grac1′ succeeded

Configure Oracle Grid Infrastructure for a Cluster … succeeded

[root@11grac2.localdomain:/root]$ /u01/app/11.2.0/grid/root.sh

Performing root user operation for Oracle 11g

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/11.2.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of “dbhome” have not changed. No need to overwrite.

The file “oraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying oraenv to /usr/local/bin …

The file “coraenv” already exists in /usr/local/bin. Overwrite it? (y/n)

[n]: y

Copying coraenv to /usr/local/bin …

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Using configuration parameter file: /u01/app/11.2.0/grid/crs/install/crsconfig_params

Creating trace directory

User ignored Prerequisites during installation

Installing Trace File Analyzer

OLR initialization – successful

Adding Clusterware entries to inittab

CRS-4402: The CSS daemon was started in exclusive mode but found an active CSS daemon on node 11grac1, number 1, and is terminating

An active cluster was found during exclusive startup, restarting to join the cluster

Configure Oracle Grid Infrastructure for a Cluster … succeeded

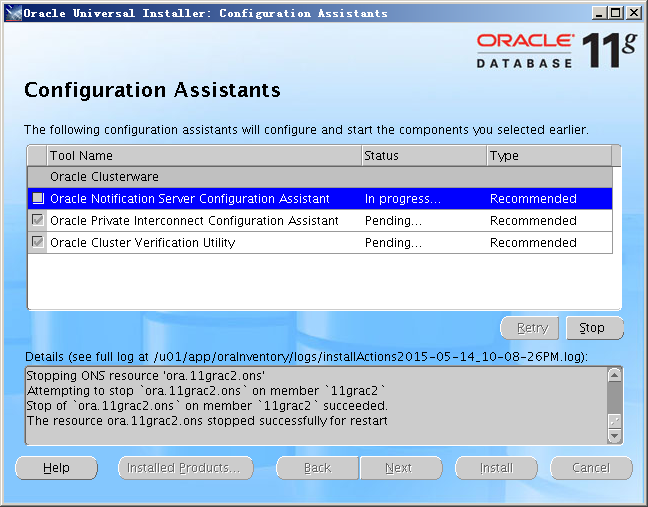

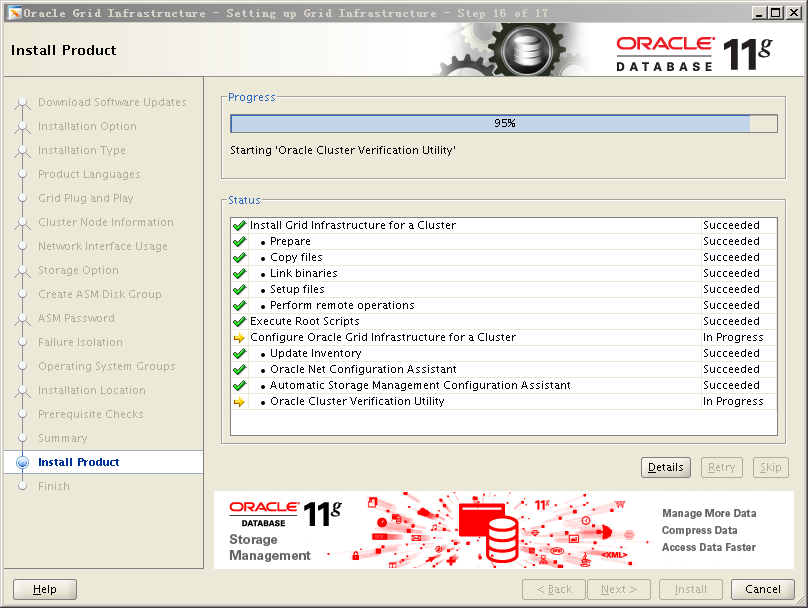

— 执行完点击“OK”完成grid最后的配置

— 检查集群状态

[grid@11grac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM1.asm application ONLINE ONLINE 11grac1

ora....C1.lsnr application ONLINE ONLINE 11grac1

ora....ac1.gsd application OFFLINE OFFLINE

ora....ac1.ons application ONLINE ONLINE 11grac1

ora....ac1.vip ora....t1.type ONLINE ONLINE 11grac1

ora....SM2.asm application ONLINE ONLINE 11grac2

ora....C2.lsnr application ONLINE ONLINE 11grac2

ora....ac2.gsd application OFFLINE OFFLINE

ora....ac2.ons application ONLINE ONLINE 11grac2

ora....ac2.vip ora....t1.type ONLINE ONLINE 11grac2

ora....ER.lsnr ora....er.type ONLINE ONLINE 11grac1

ora....N1.lsnr ora....er.type ONLINE ONLINE 11grac2

ora....N2.lsnr ora....er.type ONLINE ONLINE 11grac1

ora....N3.lsnr ora....er.type ONLINE ONLINE 11grac1

ora.OVDF.dg ora....up.type ONLINE ONLINE 11grac1

ora.asm ora.asm.type ONLINE ONLINE 11grac1

ora.cvu ora.cvu.type ONLINE ONLINE 11grac1

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE 11grac1

ora.oc4j ora.oc4j.type ONLINE ONLINE 11grac1

ora.ons ora.ons.type ONLINE ONLINE 11grac1

ora....ry.acfs ora....fs.type ONLINE ONLINE 11grac1

ora.scan1.vip ora....ip.type ONLINE ONLINE 11grac2

ora.scan2.vip ora....ip.type ONLINE ONLINE 11grac1

ora.scan3.vip ora....ip.type ONLINE ONLINE 11grac1

[grid@11grac1 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.OVDF.dg

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.asm

ONLINE ONLINE 11grac1 Started

ONLINE ONLINE 11grac2 Started

ora.gsd

OFFLINE OFFLINE 11grac1

OFFLINE OFFLINE 11grac2

ora.net1.network

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.ons

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.registry.acfs

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.11grac1.vip

1 ONLINE ONLINE 11grac1

ora.11grac2.vip

1 ONLINE ONLINE 11grac2

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE 11grac2

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE 11grac1

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE 11grac1

ora.cvu

1 ONLINE ONLINE 11grac1

ora.oc4j

1 ONLINE ONLINE 11grac1

ora.scan1.vip

1 ONLINE ONLINE 11grac2

ora.scan2.vip

1 ONLINE ONLINE 11grac1

ora.scan3.vip

1 ONLINE ONLINE 11grac1

九、迁移11gR1 RAC 磁盘组至11gR2 Grid下管理

这地方在操作之前,需要先对之前11gR1 ASM下的DATA和FRA磁盘组所对应的磁盘修改权限和属主(11gR1下没有grid用户),修改uedv rule文件。

[root@11grac1.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 2015 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 2015 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:16 /dev/asm-diskd

brw-rw—- 1 oracle oinstall 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 oracle oinstall 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 11:12 /dev/asm-diskg

[root@11grac2.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 08:16 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 08:16 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:17 /dev/asm-diskd

brw-rw—- 1 oracle oinstall 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 oracle oinstall 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 11:13 /dev/asm-diskg

[root@11grac1.localdomain:/root]$ cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB424a5eb7-c9274de0_”, NAME=”asm-diskb”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB95c63929-9336a092_”, NAME=”asm-diskc”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa044f79d-51b67554_”, NAME=”asm-diskd”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”00660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB86ee407e-415b5b32_”, NAME=”asm-diske”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBe59f5561-e0df75b7_”, NAME=”asm-diskf”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac2.localdomain:/root]$ cat /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB424a5eb7-c9274de0_”, NAME=”asm-diskb”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB95c63929-9336a092_”, NAME=”asm-diskc”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa044f79d-51b67554_”, NAME=”asm-diskd”, OWNER=”oracle”, GROUP=”oinstall”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VB86ee407e-415b5b32_”, NAME=”asm-diske”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBe59f5561-e0df75b7_”, NAME=”asm-diskf”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

KERNEL==”sd*”, BUS==”scsi”, PROGRAM==”/sbin/scsi_id -g -u -s %p”, RESULT==”SATA_VBOX_HARDDISK_VBa7feea0a-164d1478_”, NAME=”asm-diskg”, OWNER=”grid”, GROUP=”asmadmin”, MODE=”0660″

[root@11grac1.localdomain:/root]$ start_udev

Starting udev: [ OK ]

[root@11grac1.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 2015 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 2015 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:16 /dev/asm-diskd

brw-rw—- 1 grid asmadmin 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 grid asmadmin 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 11:13 /dev/asm-diskg

[root@11grac2.localdomain:/root]$ start_udev

Starting udev: [ OK ]

[root@11grac2.localdomain:/root]$ ls -l /dev/asm-disk*

brw-rw—- 1 oracle oinstall 8, 16 May 18 08:16 /dev/asm-diskb

brw-rw—- 1 oracle oinstall 8, 32 May 18 08:16 /dev/asm-diskc

brw-rw—- 1 oracle oinstall 8, 48 May 18 08:17 /dev/asm-diskd

brw-rw—- 1 grid asmadmin 8, 64 May 18 08:15 /dev/asm-diske

brw-rw—- 1 grid asmadmin 8, 80 May 18 08:15 /dev/asm-diskf

brw-rw—- 1 grid asmadmin 8, 96 May 18 11:13 /dev/asm-diskg

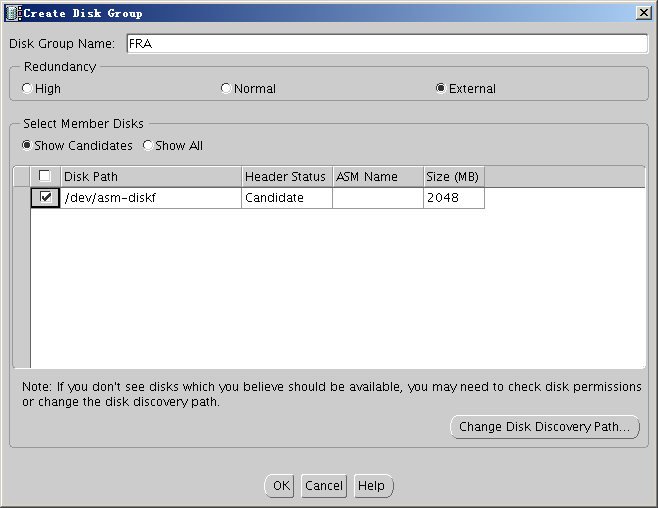

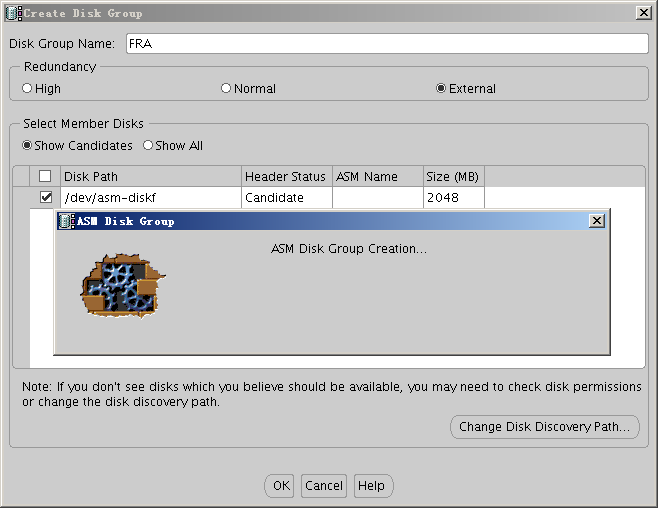

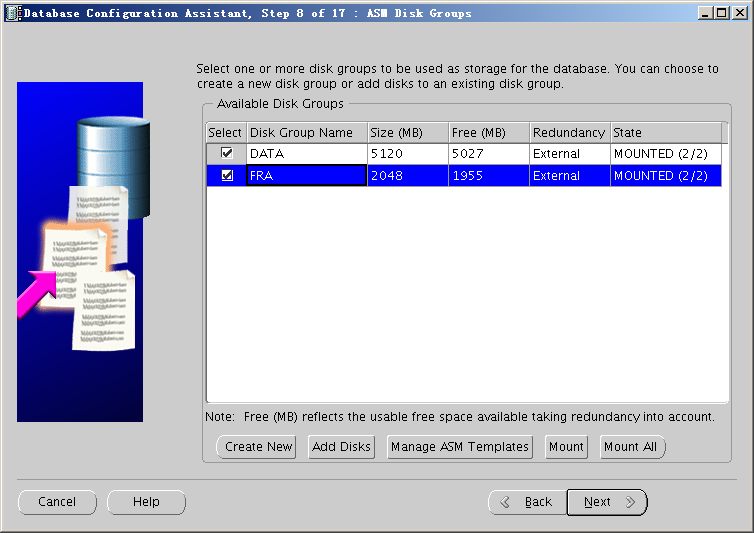

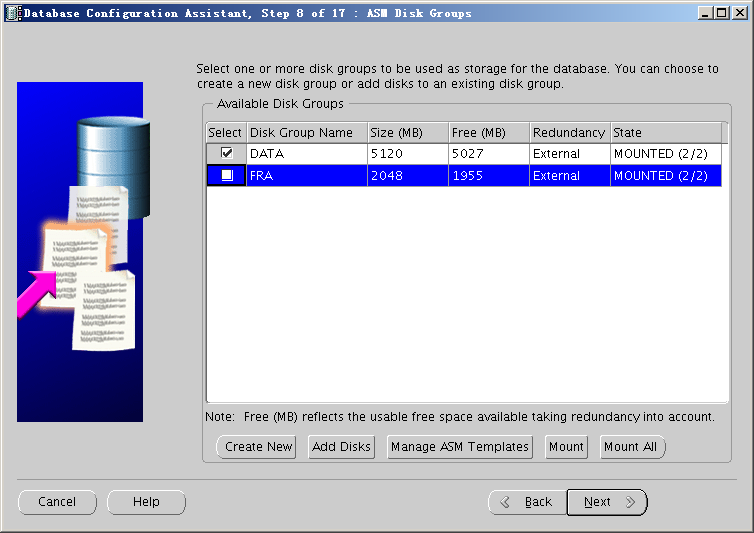

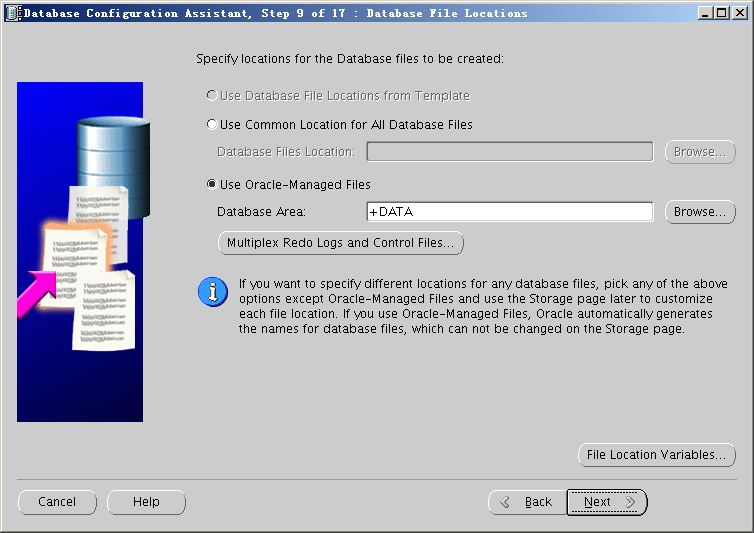

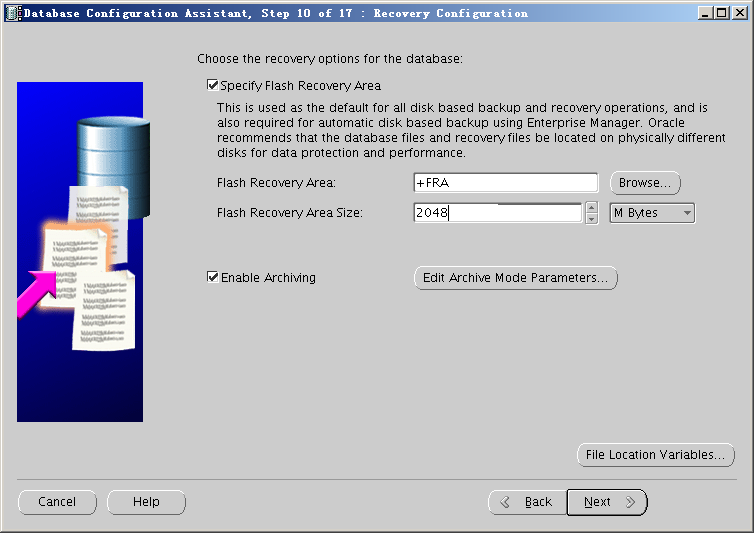

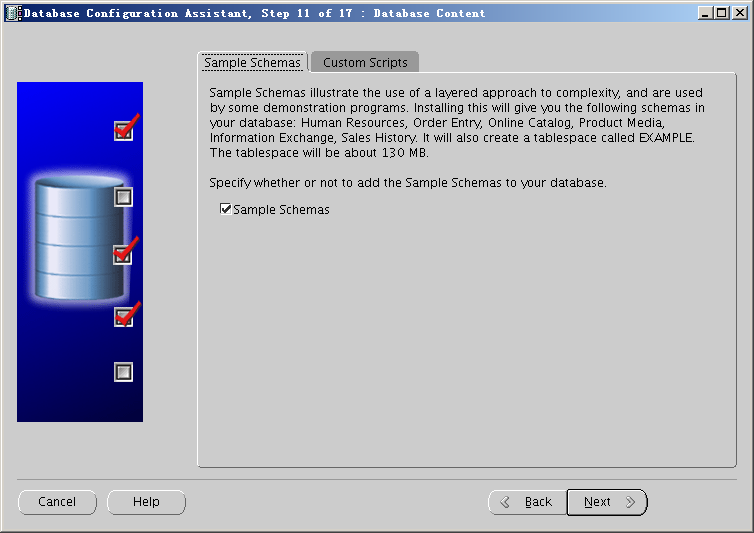

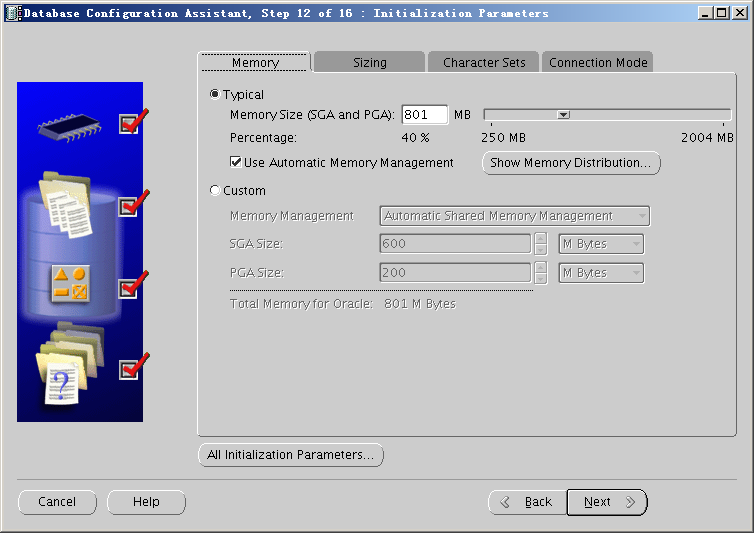

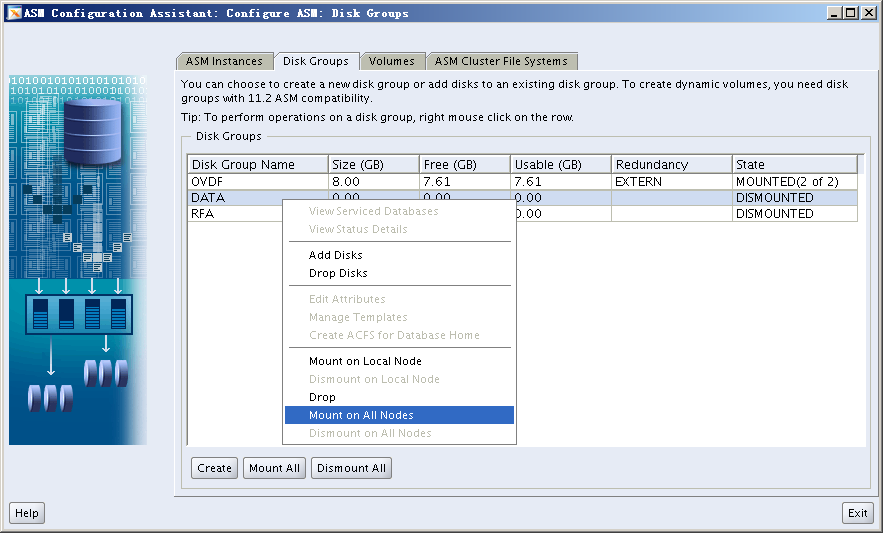

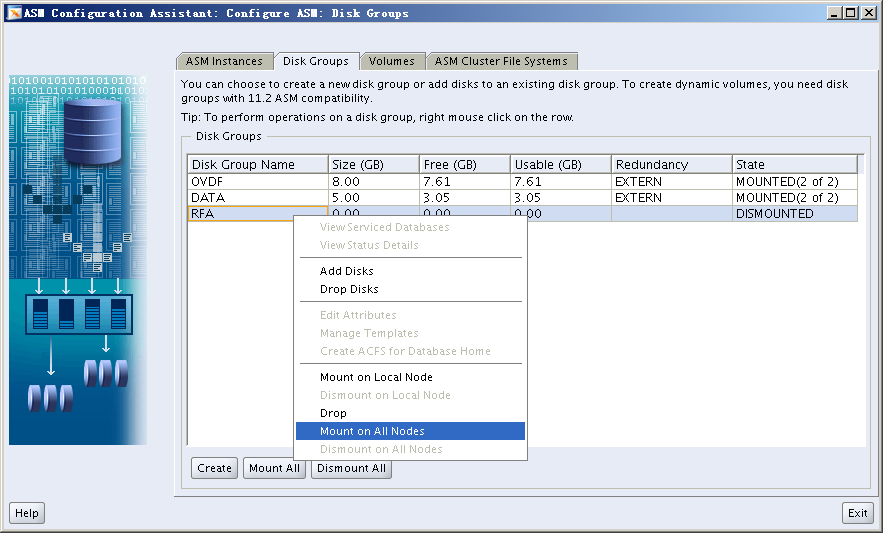

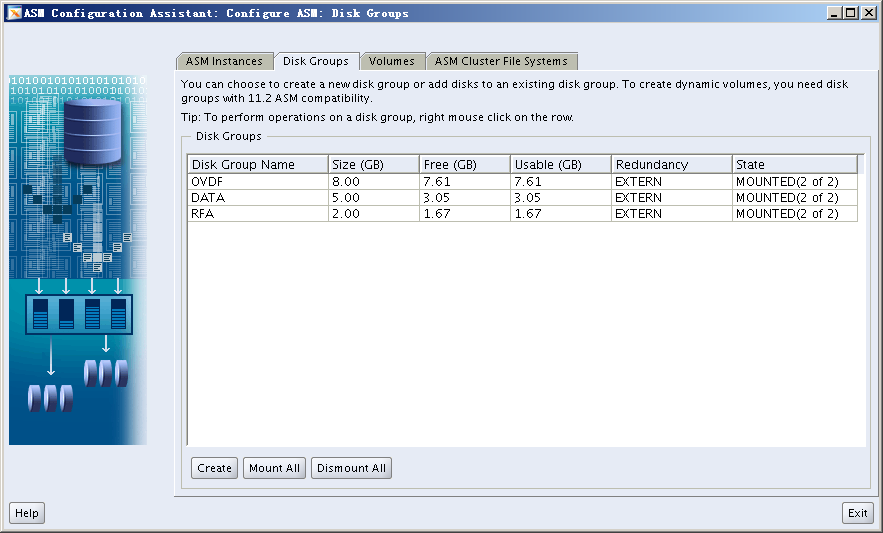

使用grid用户调用/u01/app/11.2.0/grid/bin/asmca进入ASMCA图形界面,将之前11gR1 RAC下的2块ASM磁盘组添加到11gR2 grid软件下的ASM实例中进行管理。

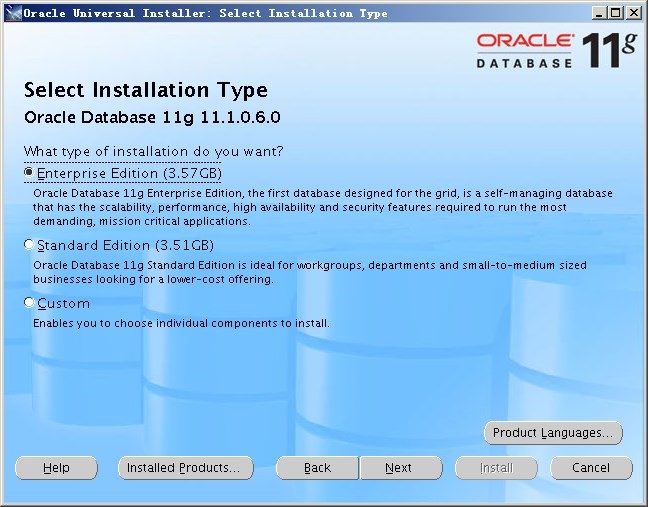

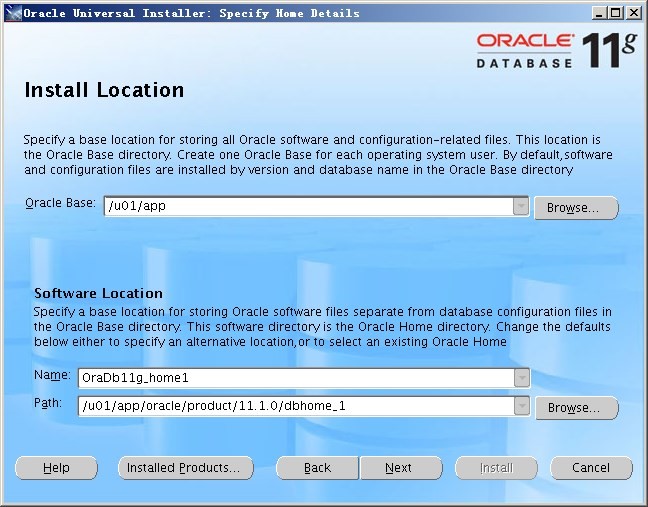

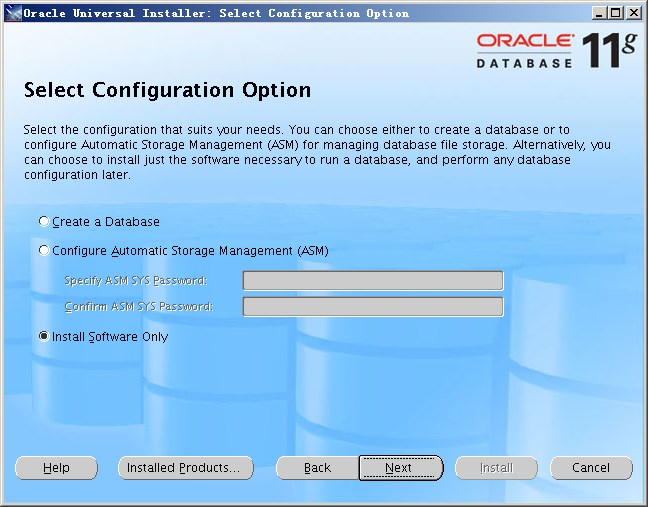

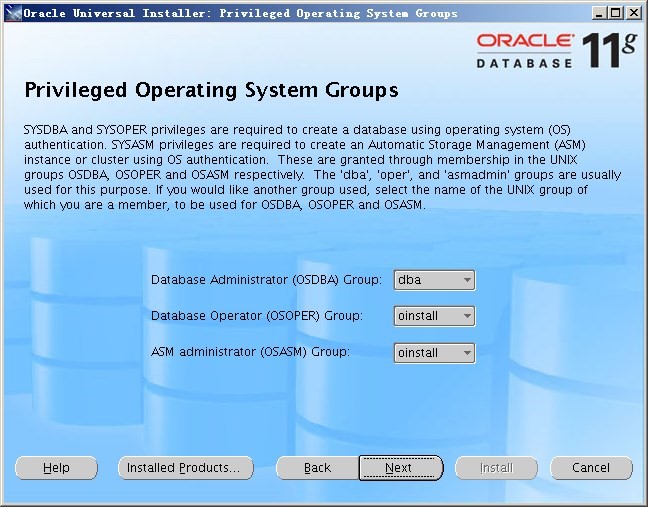

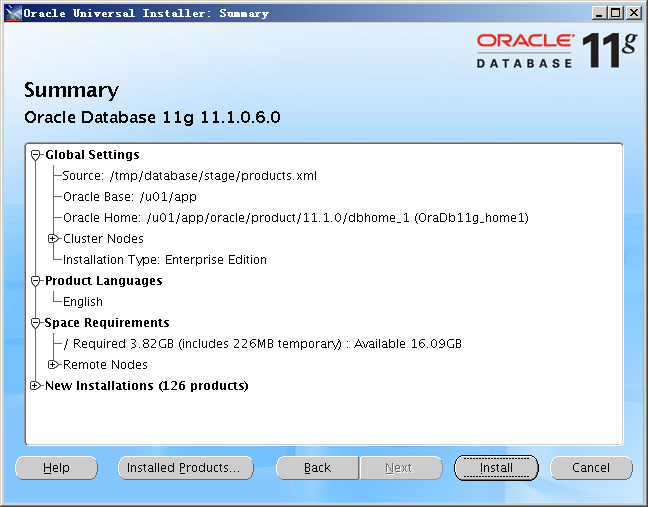

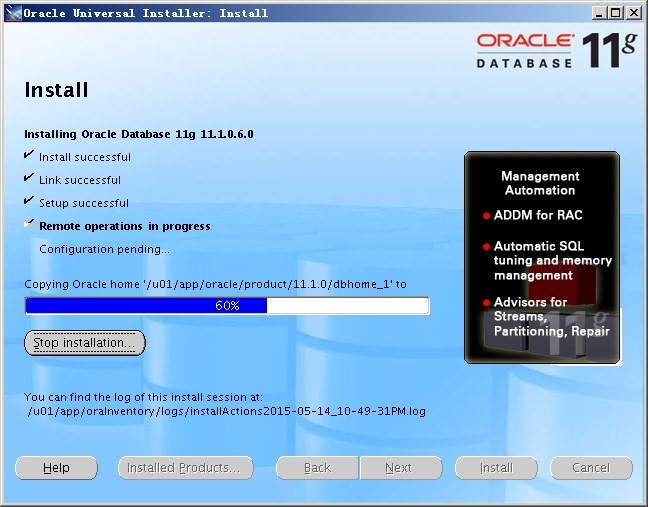

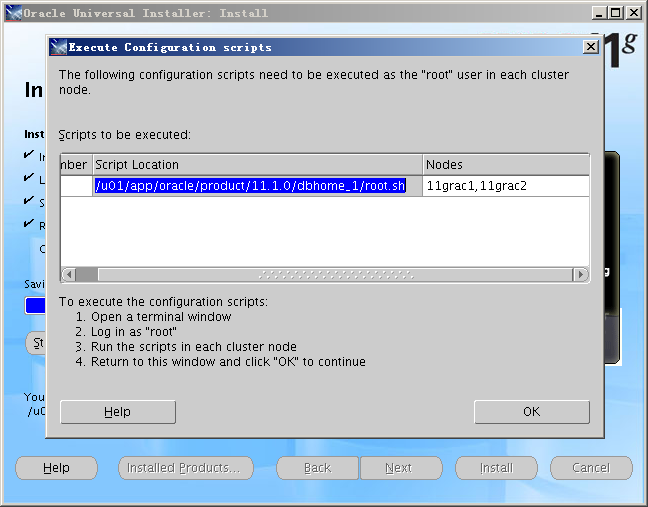

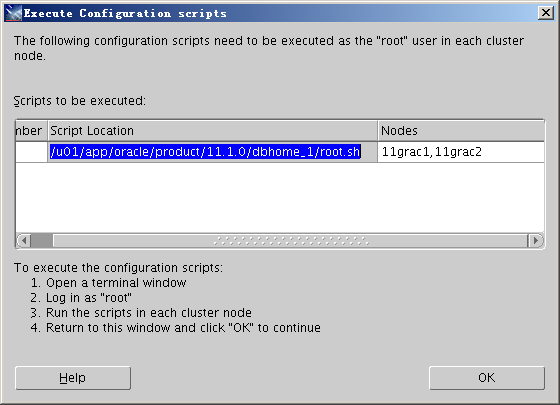

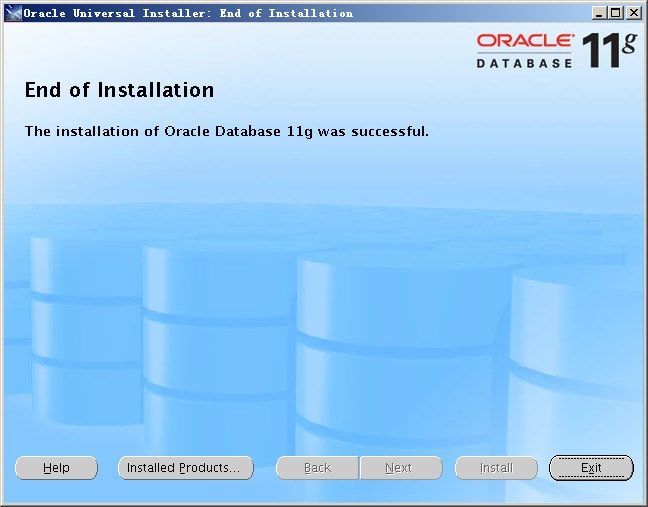

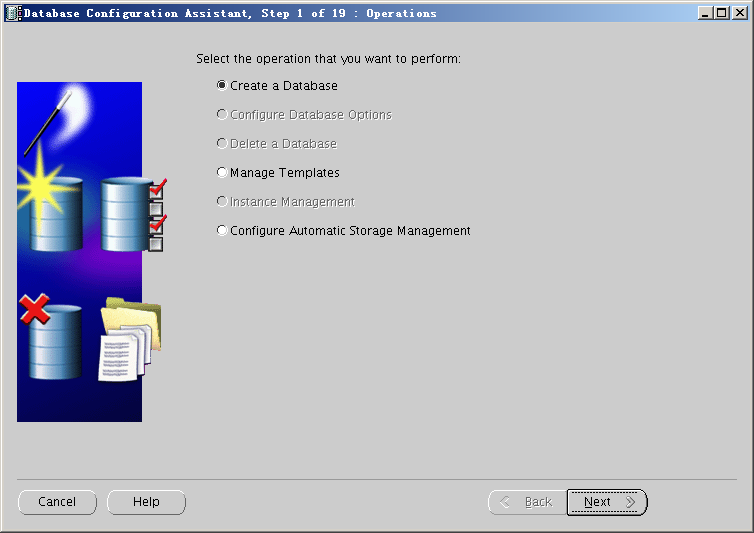

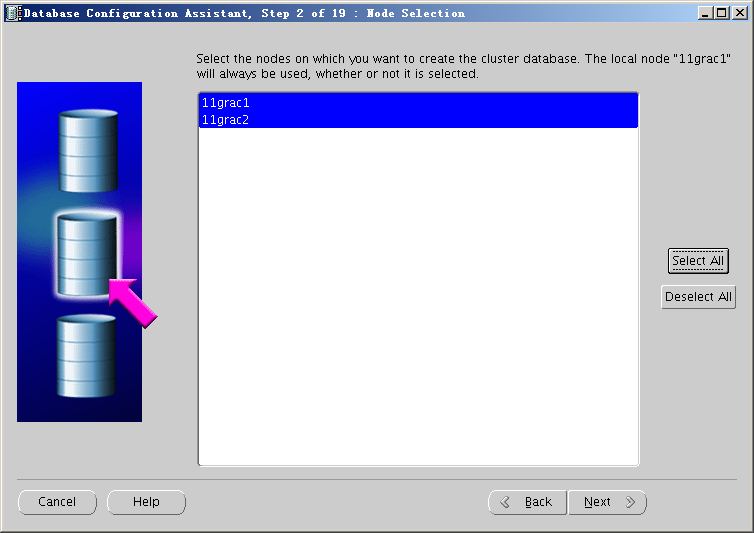

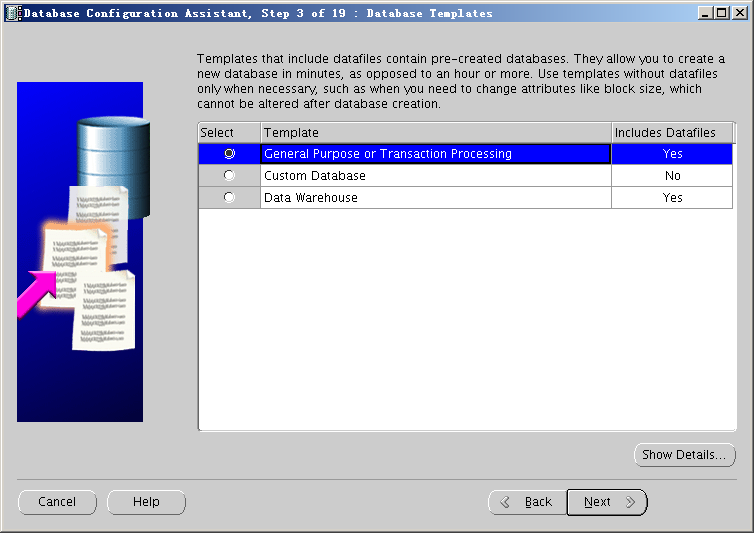

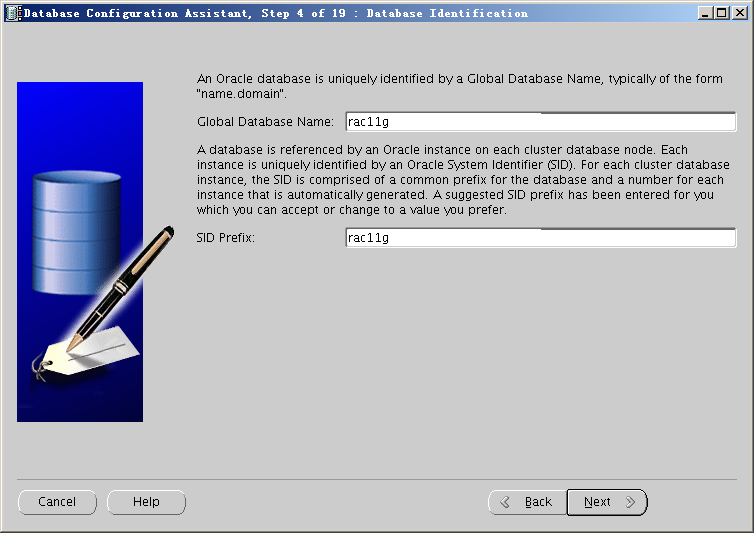

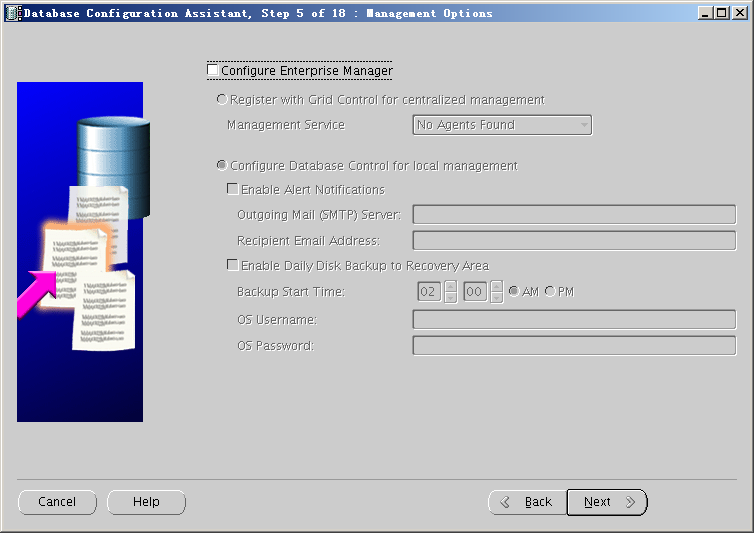

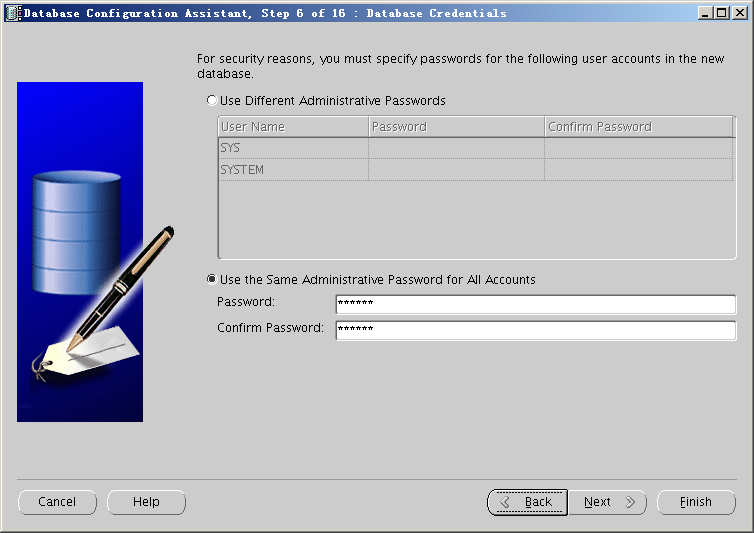

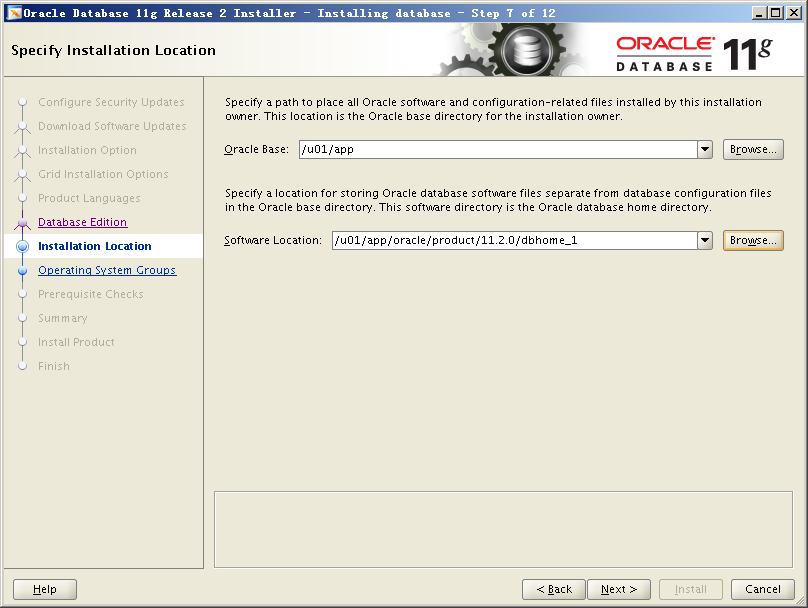

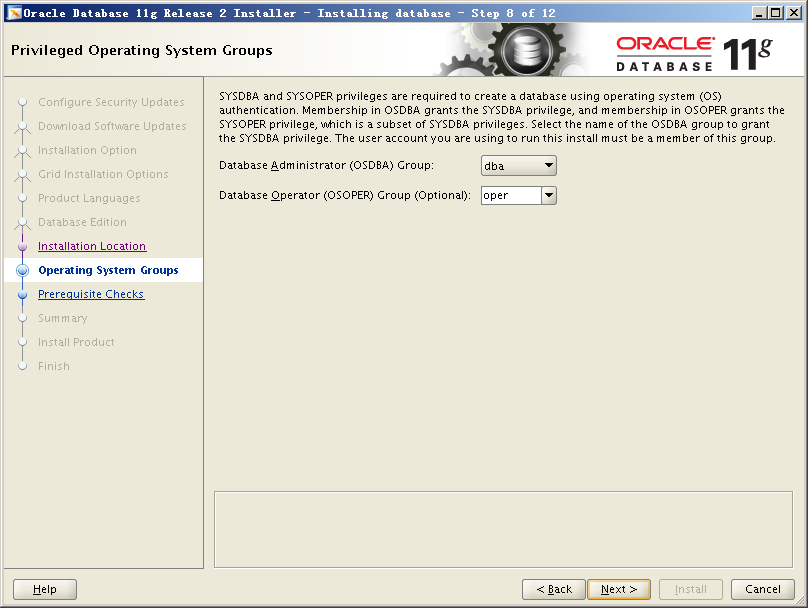

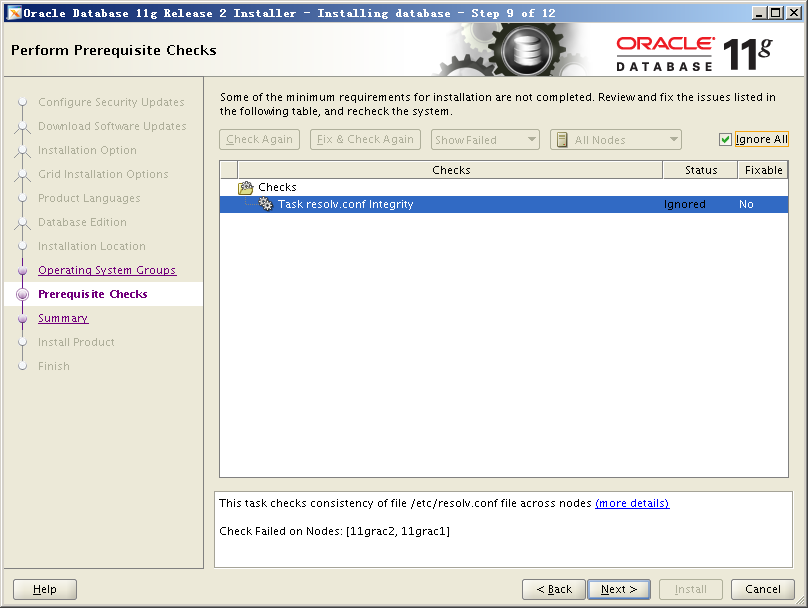

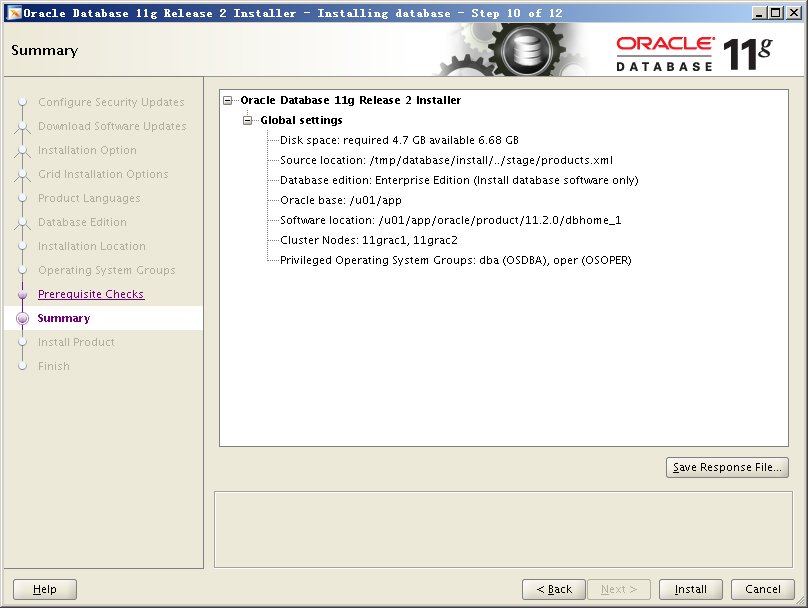

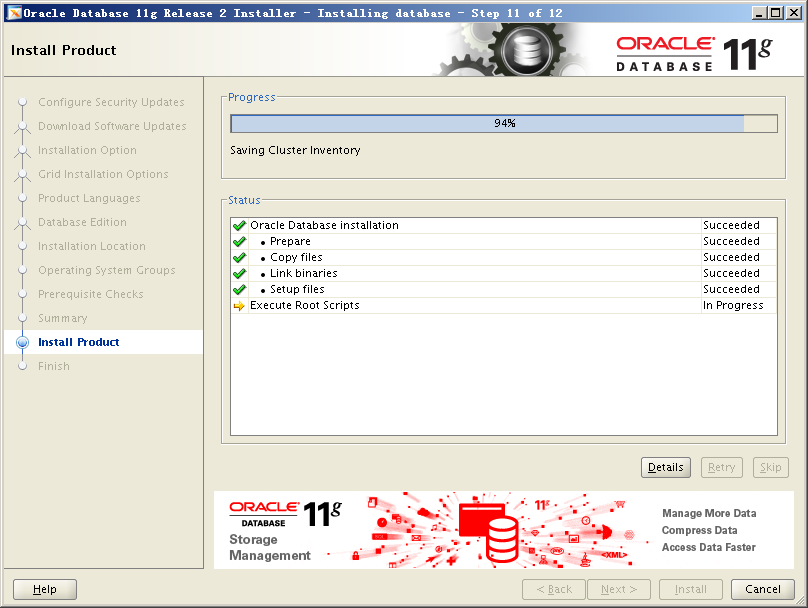

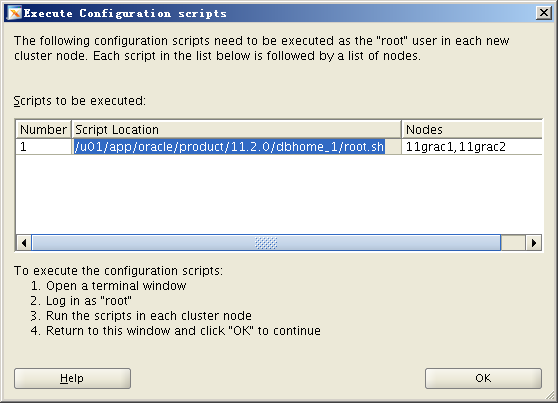

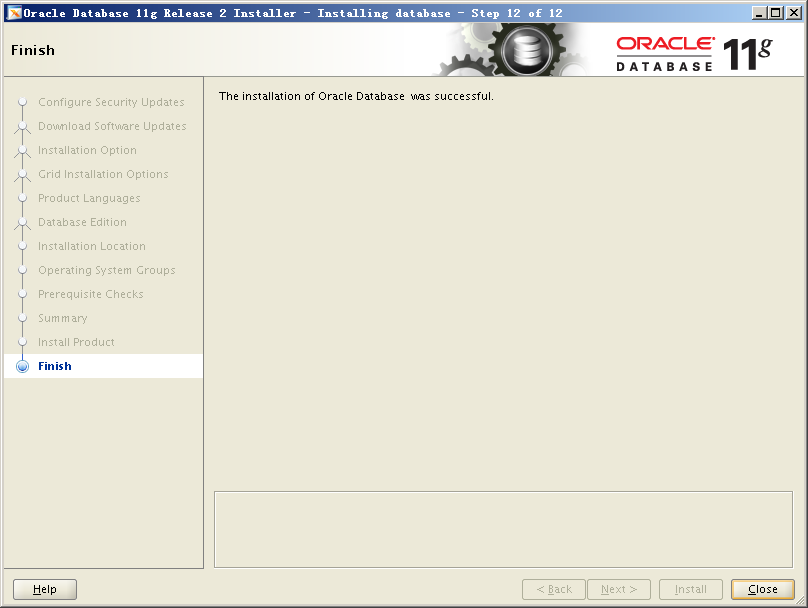

十、安装11gR2 oracle软件 [oracle@11grac1.localdomain:/home/oracle]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml [oracle@11grac2.localdomain:/home/oracle]$ cat /u01/app/oraInventory/ContentsXML/inventory.xml [root@11grac1.localdomain:/root]$ /u01/app/oracle/product/11.2.0/dbhome_1/root.sh The following environment variables are set as: Enter the full pathname of the local bin directory: [/usr/local/bin]: Entries will be added to the /etc/oratab file as needed by [root@11grac2.localdomain:/root]$ /u01/app/oracle/product/11.2.0/dbhome_1/root.sh The following environment variables are set as: Enter the full pathname of the local bin directory: [/usr/local/bin]: Entries will be added to the /etc/oratab file as needed by 十一、升级11gR1 RAC至11gR2 RAC [oracle@11grac1.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/dbs]$ cp initrac11g1.ora /u01/app/oracle/product/11.2.0/dbhome_1/dbs/ 2)修改oracle用户环境变量 # Get the aliases and functions # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH export PS1=[$LOGNAME@`hostname`:’$PWD”]$ ‘ export ORACLE_UNQNAME=rac11g [oracle@11grac2.localdomain:/home/oracle]$ cat .bash_profile # Get the aliases and functions # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH export PS1=[$LOGNAME@`hostname`:’$PWD”]$ ‘ export ORACLE_UNQNAME=rac11g [oracle@11grac1.localdomain:/home/oracle]$ source .bash_profile 3)修改初始化参数cluster_database SQL*Plus: Release 11.2.0.4.0 Production on Mon May 18 13:44:24 2015 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to an idle instance. SQL> create pfile=’/tmp/pfile.ora’ from spfile=’+DATA/rac11g/spfilerac11g.ora'; File created. SQL> exit [oracle@11grac1.localdomain:/tmp]$ sqlplus / as sysdba SQL*Plus: Release 11.2.0.4.0 Production on Mon May 18 13:46:52 2015 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to an idle instance. SQL> create spfile=’+DATA/rac11g/spfilerac11g.ora’ from pfile=’/tmp/pfile.ora'; File created. 4)执行升级前预检查脚本 [oracle@11grac1.localdomain:/home/oracle]$ echo $ORACLE_HOME SQL*Plus: Release 11.1.0.7.0 – Production on Mon May 18 15:27:18 2015 Copyright (c) 1982, 2008, Oracle. All rights reserved. Connected to an idle instance. SQL> startup upgrade pfile=’/tmp/pfile.ora'; — 查看alert日志发现如下信息 — 如果升级前不执行预检查脚本,直接升级,则会出现如下报错: SQL*Plus: Release 11.2.0.4.0 Production on Tue May 19 00:42:05 2015 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to an idle instance. SQL> startup upgrade Total System Global Area 626327552 bytes no rows selected DOC>###################################################################### no rows selected DOC>####################################################################### no rows selected DOC>####################################################################### no rows selected DOC>####################################################################### no rows selected DOC>####################################################################### no rows selected DOC>####################################################################### Session altered.

Table created.

Table altered.

no rows selected DOC>####################################################################### Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 – 64bit Production 从报错原因上似乎也很明确,错误出现在SELECT TO_NUMBER(‘MUST_HAVE_RUN_PRE-UPGRADE_TOOL_FOR_TIMEZONE’)处,从上面反馈的信息中也看到:Revert to the original oracle home and start the database. Run pre-upgrade tool against the database. — 按照上述方法检查调整 PLATFORM_ID PLATFORM_NAME EDITION TZ_VERSION SQL> select version from v$timezone_file; VERSION SQL> select platform_id from v$database; PLATFORM_ID SQL> select platform_name from v$database; PLATFORM_NAME SQL> update sys.registry$database set TZ_VERSION=14 where PLATFORM_ID=13; 1 row updated. SQL> commit; Commit complete. 5)修改完sys.registry$database表之后再直接执行升级脚本catupgrd.sql成功 ………………………….省略输出………………………………. Oracle Database 11.2 Post-Upgrade Status Tool 05-19-2015 00:30:30 PL/SQL procedure successfully completed. SQL> Commit complete. SQL> shutdown immediate; 6)正常启动数据库执行catuppst.sql脚本 SQL*Plus: Release 11.2.0.4.0 Production on Tue May 19 00:52:31 2015 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to an idle instance. SQL> startup Total System Global Area 839282688 bytes 7)编译失效对象运行脚本utlrp.sql

Function created.

PL/SQL procedure successfully completed.

Function dropped.

PL/SQL procedure successfully completed.

8)确保listener是运行在11gR2 Grid路径下 LSNRCTL for Linux: Version 11.2.0.4.0 – Production on 19-MAY-2015 01:39:38 Copyright (c) 1991, 2013, Oracle. All rights reserved. Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521)) 9)升级后两个节点修改/etc/oratab文件 [oracle@11grac2.localdomain:/home/oracle]$ tail -f /etc/oratab 10)修改cluster_database=true NAME TYPE VALUE System altered. SQL> shutdown immediate; 11)检查当前集群资源和服务状态 十二、将数据库、实例、服务重新配置到Grid Infrastructure下管理

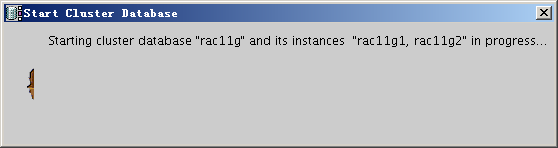

6)启动数据库实例服务

9)查看数据库状态

11)查看opatch版本

Oracle Home : /u01/app/11.2.0/grid Lsinventory Output file location : /u01/app/11.2.0/grid/cfgtoollogs/opatch/lsinv/lsinventory2015-05-19_02-50-56AM.txt ——————————————————————————– Oracle Grid Infrastructure 11g 11.2.0.4.0

There are no Interim patches installed in this Oracle Home.

Rac system comprising of multiple nodes ——————————————————————————– OPatch succeeded. [oracle@11grac1.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/OPatch]$ ./opatch lsinventory

Oracle Home : /u01/app/oracle/product/11.2.0/dbhome_1 Lsinventory Output file location : /u01/app/oracle/product/11.2.0/dbhome_1/cfgtoollogs/opatch/lsinv/lsinventory2015-05-19_02-50-27AM.txt ——————————————————————————– Oracle Database 11g 11.2.0.4.0

There are no Interim patches installed in this Oracle Home.

Rac system comprising of multiple nodes ——————————————————————————– OPatch succeeded. [grid@11grac2 OPatch]$ ./opatch lspatches

Oracle Home : /u01/app/11.2.0/grid Lsinventory Output file location : /u01/app/11.2.0/grid/cfgtoollogs/opatch/lsinv/lsinventory2015-05-19_02-53-58AM.txt ——————————————————————————– Oracle Grid Infrastructure 11g 11.2.0.4.0

There are no Interim patches installed in this Oracle Home.

Rac system comprising of multiple nodes ——————————————————————————– OPatch succeeded. [oracle@11grac2.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/OPatch]$ ./opatch lsinventory

Oracle Home : /u01/app/oracle/product/11.2.0/dbhome_1 Lsinventory Output file location : /u01/app/oracle/product/11.2.0/dbhome_1/cfgtoollogs/opatch/lsinv/lsinventory2015-05-19_02-54-07AM.txt ——————————————————————————– Oracle Database 11g 11.2.0.4.0

There are no Interim patches installed in this Oracle Home.

Rac system comprising of multiple nodes ——————————————————————————– OPatch succeeded. 十三、总结

搜索

复制

SQL> select name,state,total_mb,free_mb from v$asm_diskgroup;

NAME STATE TOTAL_MB FREE_MB

------------------------------ ----------- ---------- ----------

OVDF MOUNTED 8192 7796

RFA MOUNTED 2048 1706

DATA MOUNTED 5120 3121

SQL> select name,state,total_mb,free_mb,path from v$asm_disk

NAME STATE TOTAL_MB FREE_MB PATH

---------- -------- ---------- ---------- ------------------------------

NORMAL 0 0 /dev/asm-diskd

NORMAL 0 0 /dev/asm-diskc

NORMAL 0 0 /dev/asm-diskb

OVDF_0000 NORMAL 8192 7796 /dev/asm-diskg

RFA_0000 NORMAL 2048 1706 /dev/asm-diskf

DATA_0000 NORMAL 5120 3121 /dev/asm-diske

其中v$ASM_DISK中看到的name空的前3条记录是11gR1 RAC下的存放OCR和Voting Disk的磁盘,不予理会。

[grid@11grac1 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.DATA.dg

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.LISTENER.lsnr

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.OVDF.dg

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.RFA.dg

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.asm

ONLINE ONLINE 11grac1 Started

ONLINE ONLINE 11grac2 Started

ora.gsd

OFFLINE OFFLINE 11grac1

OFFLINE OFFLINE 11grac2

ora.net1.network

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.ons

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

ora.registry.acfs

ONLINE ONLINE 11grac1

ONLINE ONLINE 11grac2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.11grac1.vip

1 ONLINE ONLINE 11grac1

ora.11grac2.vip

1 ONLINE ONLINE 11grac2

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE 11grac1

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE 11grac1

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE 11grac2

ora.cvu

1 ONLINE ONLINE 11grac1

ora.oc4j

1 ONLINE ONLINE 11grac1

ora.scan1.vip

1 ONLINE ONLINE 11grac1

ora.scan2.vip

1 ONLINE ONLINE 11grac1

ora.scan3.vip

1 ONLINE ONLINE 11grac2

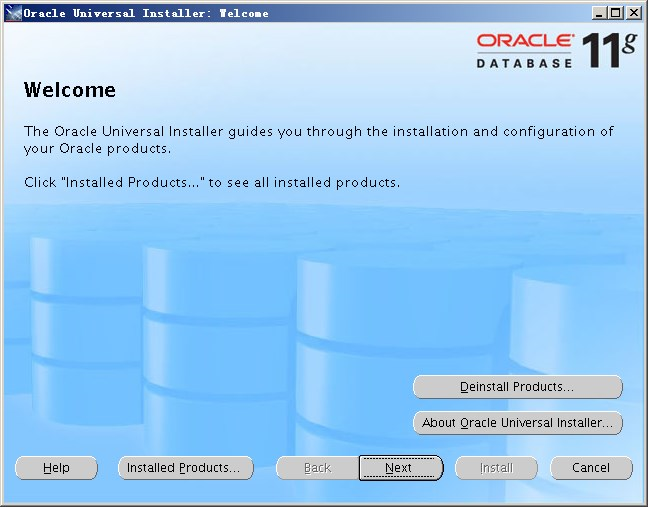

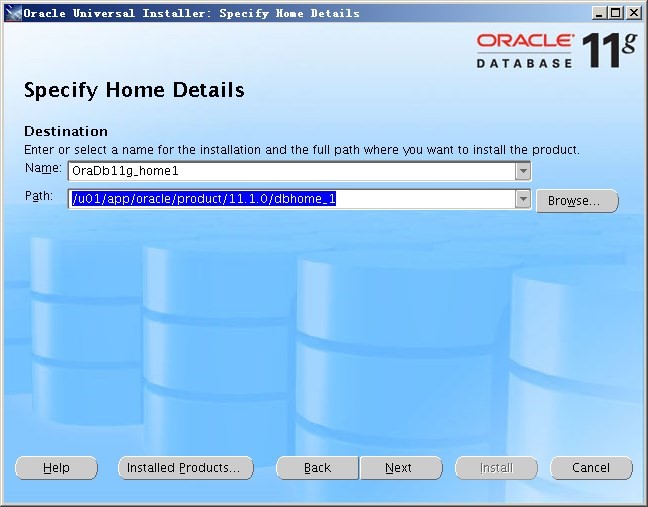

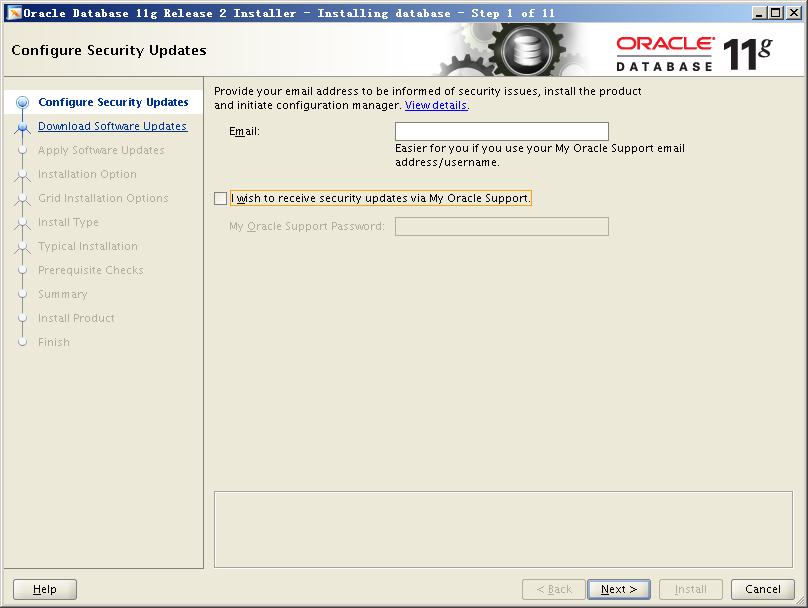

[root@11grac1.localdomain:/tmp]$ unzip p13390677_112040_Linux-x86-64_1of7.zip

[root@11grac1.localdomain:/tmp]$ unzip p13390677_112040_Linux-x86-64_2of7.zip

[root@11grac1.localdomain:/root]$ su – oracle

[oracle@11grac1.localdomain:/home/oracle]$ cd /tmp/database/

[oracle@11grac1.localdomain:/tmp/database]$ ls -l

total 60

drwxr-xr-x 4 root root 4096 Aug 27 2013 install

-rw-r–r– 1 root root 30016 Aug 27 2013 readme.html

drwxr-xr-x 2 root root 4096 Aug 27 2013 response

drwxr-xr-x 2 root root 4096 Aug 27 2013 rpm

-rwxr-xr-x 1 root root 3267 Aug 27 2013 runInstaller

drwxr-xr-x 2 root root 4096 Aug 27 2013 sshsetup

drwxr-xr-x 14 root root 4096 Aug 27 2013 stage

-rw-r–r– 1 root root 500 Aug 27 2013 welcome.html

[oracle@11grac1.localdomain:/tmp/database]$ export DISPLAY=192.168.56.1:0.0

[oracle@11grac1.localdomain:/tmp/database]$ ./runInstaller

— 解决安装RAC数据库软件时,OUI找不到节点,解决办法如下:

<?xml version=”1.0″ standalone=”yes” ?>

<!– Copyright (c) 1999, 2013, Oracle and/or its affiliates.

All rights reserved. –>

<!– Do not modify the contents of this file by hand. –>

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.4.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME=”OraCrs11g_home” LOC=”/u01/app/crs/11.1.0/crshome_1″ TYPE=”O” IDX=”1″ CRS=”true”>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

<HOME NAME=”OraDb11g_home1″ LOC=”/u01/app/oracle/product/11.1.0/dbhome_1″ TYPE=”O” IDX=”2″>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

<HOME NAME=”Ora11g_gridinfrahome1″ LOC=”/u01/app/11.2.0/grid” TYPE=”O” IDX=”3″ CRS=”true”>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

<?xml version=”1.0″ standalone=”yes” ?>

<!– Copyright (c) 1999, 2013, Oracle and/or its affiliates.

All rights reserved. –>

<!– Do not modify the contents of this file by hand. –>

<INVENTORY>

<VERSION_INFO>

<SAVED_WITH>11.2.0.4.0</SAVED_WITH>

<MINIMUM_VER>2.1.0.6.0</MINIMUM_VER>

</VERSION_INFO>

<HOME_LIST>

<HOME NAME=”OraCrs11g_home” LOC=”/u01/app/crs/11.1.0/crshome_1″ TYPE=”O” IDX=”1″ CRS=”true”>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

<HOME NAME=”OraDb11g_home1″ LOC=”/u01/app/oracle/product/11.1.0/dbhome_1″ TYPE=”O” IDX=”2″>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

<HOME NAME=”Ora11g_gridinfrahome1″ LOC=”/u01/app/11.2.0/grid” TYPE=”O” IDX=”3″ CRS=”true”>

<NODE_LIST>

<NODE NAME=”11grac1″/>

<NODE NAME=”11grac2″/>

</NODE_LIST>

</HOME>

</HOME_LIST>

<COMPOSITEHOME_LIST>

</COMPOSITEHOME_LIST>

</INVENTORY>

— 在两个节点将以上标色部分删除,重新运行安装即可

Performing root user operation for Oracle 11g

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

Performing root user operation for Oracle 11g

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/11.2.0/dbhome_1

The contents of “dbhome” have not changed. No need to overwrite.

The contents of “oraenv” have not changed. No need to overwrite.

The contents of “coraenv” have not changed. No need to overwrite.

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Finished product-specific root actions.

1)复制11gR1 RAC下的初始化参数文件、口令文件、网络配置文件至11gR2 RAC对应的目录下

[oracle@11grac1.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/network/admin]$ cp tnsnames.ora /u01/app/oracle/product/11.2.0/dbhome_1/network/admin/

[oracle@11grac2.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/network/admin]$ cp tnsnames.ora /u01/app/oracle/product/11.2.0/dbhome_1/network/admin/

[oracle@11grac1.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/network/admin]$ ll

total 12

drwxr-xr-x 2 oracle oinstall 4096 May 18 12:20 samples

-rw-r–r– 1 oracle oinstall 381 Dec 17 2012 shrept.lst

-rw-r—– 1 oracle oinstall 1195 May 18 13:03 tnsnames.ora

[oracle@11grac2.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/network/admin]$ ll

total 12

drwxr-xr-x 2 oracle oinstall 4096 May 18 12:46 samples

-rw-r–r– 1 oracle oinstall 381 May 18 12:46 shrept.lst

-rw-r—– 1 oracle oinstall 1195 May 18 13:04 tnsnames.ora

[oracle@11grac1.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/dbs]$ cp orapwrac11g1 /u01/app/oracle/product/11.2.0/dbhome_1/dbs/

[oracle@11grac2.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/dbs]$ cp initrac11g2.ora /u01/app/oracle/product/11.2.0/dbhome_1/dbs/

[oracle@11grac2.localdomain:/u01/app/oracle/product/11.1.0/dbhome_1/dbs]$ cp orapwrac11g2 /u01/app/oracle/product/11.2.0/dbhome_1/dbs/

[oracle@11grac1.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/dbs]$ ll

total 12

-rw-r–r– 1 oracle oinstall 2851 May 15 2009 init.ora

-rw-r—– 1 oracle oinstall 39 May 18 13:09 initrac11g1.ora

-rw-r—– 1 oracle oinstall 1536 May 18 13:09 orapwrac11g1

[oracle@11grac2.localdomain:/u01/app/oracle/product/11.2.0/dbhome_1/dbs]$ ll

total 12

-rw-r–r– 1 oracle oinstall 2851 May 18 12:36 init.ora

-rw-r—– 1 oracle oinstall 39 May 18 13:10 initrac11g2.ora

-rw-r—– 1 oracle oinstall 1536 May 18 13:10 orapwrac11g2

[oracle@11grac1.localdomain:/home/oracle]$ cat .bash_profile

# .bash_profile

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

export ORACLE_SID=rac11g1

export ORACLE_BASE=/u01/app

#export CRS_HOME=$ORACLE_BASE/crs/11.1.0/crshome_1

export ORACLE_HOME=$ORACLE_BASE/oracle/product/11.2.0/dbhome_1

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib:/usr/share/lib

export CLASSPATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/jdbc/lib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib

export PATH=$ORACLE_HOME/bin:$PATH

# .bash_profile

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

export ORACLE_SID=rac11g2

export ORACLE_BASE=/u01/app

#export CRS_HOME=$ORACLE_BASE/crs/11.1.0/crshome_1

export ORACLE_HOME=$ORACLE_BASE/oracle/product/11.2.0/dbhome_1

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib:/usr/share/lib

export CLASSPATH=$ORACLE_HOME/jre:$ORACLE_HOME/jlib:$ORACLE_HOME/jdbc/lib:$ORACLE_HOME/rdbms/jlib:$ORACLE_HOME/network/jlib

export PATH=$ORACLE_HOME/bin:$PATH

[oracle@11grac2.localdomain:/home/oracle]$ source .bash_profile

[oracle@11grac1.localdomain:/home/oracle]$ sqlplus / as sysdba

Disconnected

[oracle@11grac1.localdomain:/home/oracle]$ cd /tmp/

[oracle@11grac1.localdomain:/tmp]$ vi pfile.ora

— 将cluster_database参数改为false

这里需要注意,我们要以原来11gR1 RAC环境的数据库软件来启动数据库,去执行升级前的预检查脚本。该预检查脚本是要把低版本的数据库升级到11204版本的数据库,需要执行预检查脚本ORACLE_HOME/rdbms/admin/utlu112i.sql,可以参考MetaLink官方文档Doc ID 837570.1及Note 884522.1

这里要以原来11gR1 RAC环境的数据库软件来启动数据库,并且以UPGRADE方式来启库,且需要注意环境变量需要设置成对应原11gR1 RAC数据库软件HOME的配置,同样需要修改11gR1参数文件里的cluster_database=false(如果原版本为10g版本,还需要注意在11gR2版本下,oracle会自动创建一个隐含参数”__oracle_base”,而在10g版本下不支持该参数,因此创建出pfile,然后将pfile里的该初始化参数注释掉。)

— 使用如下修改后的参数文件启动11gR1的database

[oracle@11grac1.localdomain:/tmp]$ cat pfile.ora

rac11g1.__db_cache_size=251658240

rac11g2.__db_cache_size=268435456

rac11g1.__java_pool_size=16777216

rac11g2.__java_pool_size=4194304

rac11g1.__large_pool_size=4194304

rac11g2.__large_pool_size=4194304

rac11g1.__oracle_base=’/u01/app’#ORACLE_BASE set from environment

rac11g2.__oracle_base=’/u01/app’#ORACLE_BASE set from environment

rac11g1.__pga_aggregate_target=348127232

rac11g2.__pga_aggregate_target=348127232

rac11g1.__sga_target=494927872

rac11g2.__sga_target=494927872

rac11g1.__shared_io_pool_size=0

rac11g2.__shared_io_pool_size=0

rac11g1.__shared_pool_size=213909504

rac11g2.__shared_pool_size=209715200

rac11g1.__streams_pool_size=0

rac11g2.__streams_pool_size=0

*.audit_file_dest=’/u01/app/admin/rac11g/adump’

*.audit_trail=’db’

*.cluster_database_instances=2

*.cluster_database=false

*.compatible=’11.1.0.0.0′

*.control_files=’+DATA/rac11g/controlfile/current.260.879941791′,’+RFA/rac11g/controlfile/current.256.879941793′

*.db_block_size=8192

*.db_create_file_dest=’+DATA’

*.db_domain=”

*.db_name=’rac11g’

*.db_recovery_file_dest=’+RFA’

*.db_recovery_file_dest_size=2147483648

*.diagnostic_dest=’/u01/app’

*.dispatchers='(PROTOCOL=TCP) (SERVICE=rac11gXDB)’

rac11g1.instance_number=1

rac11g2.instance_number=2

rac11g2.local_listener=’LISTENER_RAC11G2′

rac11g1.local_listener=’LISTENER_RAC11G1′

*.log_archive_dest_1=’LOCATION=+DATA/’

*.log_archive_format=’%t_%s_%r.dbf’

*.memory_target=839909376

*.open_cursors=300

*.processes=150

*.remote_listener=’LISTENERS_RAC11G’

*.remote_login_passwordfile=’exclusive’

rac11g2.thread=2

rac11g1.thread=1

rac11g1.undo_tablespace=’UNDOTBS1′

rac11g2.undo_tablespace=’UNDOTBS2′

/u01/app/oracle/product/11.1.0/dbhome_1

[oracle@11grac1.localdomain:/home/oracle]$ sqlplus / as sysdba

ORA-29702: error occurred in Cluster Group Service operation

[oracle@11grac1.localdomain:/home/oracle]$ cd /u01/app/diag/rdbms/rac11g/rac11g1/trace/

[oracle@11grac1.localdomain:/u01/app/diag/rdbms/rac11g/rac11g1/trace]$ tail -20f alert_rac11g1.log

CKPT started with pid=16, OS id=11345

Mon May 18 15:27:33 2015

SMON started with pid=17, OS id=11349

Mon May 18 15:27:33 2015

RECO started with pid=18, OS id=11353

Mon May 18 15:27:33 2015

RBAL started with pid=19, OS id=11357

Mon May 18 15:27:33 2015

ASMB started with pid=20, OS id=11361

Errors in file /u01/app/diag/rdbms/rac11g/rac11g1/trace/rac11g1_asmb_11361.trc:

ORA-15077: could not locate ASM instance serving a required diskgroup

ORA-29701: unable to connect to Cluster Manager

Mon May 18 15:27:34 2015

MMON started with pid=21, OS id=11365

starting up 1 dispatcher(s) for network address ‘(ADDRESS=(PARTIAL=YES)(PROTOCOL=TCP))’…

Mon May 18 15:27:34 2015

MMNL started with pid=20, OS id=11369

starting up 1 shared server(s) …

USER (ospid: 11213): terminating the instance due to error 29702

Instance terminated by USER, pid = 11213

这里想以原来11gR1 RAC环境的数据库软件来启动数据库执行升级预检查脚本,结果因为ASM问题失败(因为我们已经将原11gR1 RAC下的ASM磁盘组使用11gR2 grid进行管理,因此这里可能需要回退到11gR1 RAC版本以upgrade方式启动数据库后运行11gR2 ORACLE_HOME/rdbms/admin/catupgrd.sql,但经过测试不能成功,因此考虑绕过这一步直接执行升级脚本catupgrd.sql)

[oracle@11grac1.localdomain:/home/oracle]$ echo $ORACLE_HOME

/u01/app/oracle/product/11.2.0/dbhome_1

[oracle@11grac1.localdomain:/home/oracle]$ sqlplus / as sysdba

ORACLE instance started.

Fixed Size 2255832 bytes

Variable Size 230687784 bytes

Database Buffers 390070272 bytes

Redo Buffers 3313664 bytes

Database mounted.

Database opened.

SQL> @?/rdbms/admin/catupgrd.sql

DOC>#######################################################################

DOC>#######################################################################

DOC>

DOC> The first time this script is run, there should be no error messages

DOC> generated; all normal upgrade error messages are suppressed.

DOC>

DOC> If this script is being re-run after correcting some problem, then

DOC> expect the following error which is not automatically suppressed:

DOC>

DOC> ORA-00001: unique constraint (<constraint_name>) violated

DOC> possibly in conjunction with

DOC> ORA-06512: at “<procedure/function name>”, line NN

DOC>

DOC> These errors will automatically be suppressed by the Database Upgrade

DOC> Assistant (DBUA) when it re-runs an upgrade.

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>######################################################################

DOC>######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if the user running this script is not SYS. Disconnect

DOC> and reconnect with AS SYSDBA.

DOC>######################################################################

DOC>######################################################################

DOC>#

DOC>######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if the database server version is not correct for this script.

DOC> Perform “ALTER SYSTEM CHECKPOINT” prior to “SHUTDOWN ABORT”, and use

DOC> a different script or a different server.

DOC>######################################################################

DOC>######################################################################

DOC>#

DOC>#######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if the database has not been opened for UPGRADE.

DOC>

DOC> Perform “ALTER SYSTEM CHECKPOINT” prior to “SHUTDOWN ABORT”, and

DOC> restart using UPGRADE.

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>#######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if the Oracle Database Vault option is TRUE. Upgrades cannot

DOC> be run with the Oracle Database Vault option set to TRUE since

DOC> AS SYSDBA connections are restricted.

DOC>

DOC> Perform “ALTER SYSTEM CHECKPOINT” prior to “SHUTDOWN ABORT”, relink

DOC> the server without the Database Vault option, and restart the server

DOC> using UPGRADE mode.

DOC>

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>#######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if Database Vault is installed in the database but the Oracle

DOC> Label Security option is FALSE. To successfully upgrade Oracle

DOC> Database Vault, the Oracle Label Security option must be TRUE.

DOC>

DOC> Perform “ALTER SYSTEM CHECKPOINT” prior to “SHUTDOWN ABORT”,

DOC> relink the server with the OLS option (but without the Oracle Database

DOC> Vault option) and restart the server using UPGRADE.

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>#######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if bootstrap migration is in progress and logminer clients

DOC> require utlmmig.sql to be run next to support this redo stream.

DOC>

DOC> Run utlmmig.sql

DOC> then (if needed)

DOC> restart the database using UPGRADE and

DOC> rerun the upgrade script.

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>#######################################################################

DOC> The following error is generated if the pre-upgrade tool has not been

DOC> run in the old ORACLE_HOME home prior to upgrading a pre-11.2 database:

DOC>

DOC> SELECT TO_NUMBER(‘MUST_HAVE_RUN_PRE-UPGRADE_TOOL_FOR_TIMEZONE’)

DOC> *

DOC> ERROR at line 1:

DOC> ORA-01722: invalid number

DOC>

DOC> o Action:

DOC> Shutdown database (“alter system checkpoint” and then “shutdown abort”).

DOC> Revert to the original oracle home and start the database.

DOC> Run pre-upgrade tool against the database.

DOC> Review and take appropriate actions based on the pre-upgrade

DOC> output before opening the datatabase in the new software version.

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>#######################################################################

DOC> The following error is generated if the pre-upgrade tool has not been

DOC> run in the old oracle home prior to upgrading a pre-11.2 database:

DOC>

DOC> SELECT TO_NUMBER(‘MUST_BE_SAME_TIMEZONE_FILE_VERSION’)

DOC> *

DOC> ERROR at line 1:

DOC> ORA-01722: invalid number

DOC>

DOC>

DOC> o Action:

DOC> Shutdown database (“alter system checkpoint” and then “shutdown abort”).

DOC> Revert to the original ORACLE_HOME and start the database.

DOC> Run pre-upgrade tool against the database.

DOC> Review and take appropriate actions based on the pre-upgrade

DOC> output before opening the datatabase in the new software version.

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

SELECT TO_NUMBER(‘MUST_BE_SAME_TIMEZONE_FILE_VERSION’)

*

ERROR at line 1:

ORA-01722: invalid number

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

根据该错在在MOS上发现Master Note : ORA-1722 Errors during Upgrade (文档 ID 1466464.1)中提到解决办法:

1)检查该sys.registry$database表是否存在,若不存在手动创建;

CREATE TABLE registry$database(

platform_id NUMBER,

platform_name VARCHAR2(101),

edition VARCHAR2(30),

tz_version NUMBER

);

2)若存在检查该表中的记录值是否正确,若不正确请修改;

INSERT into registry$database

(platform_id, platform_name, edition, tz_version)

VALUES ((select platform_id from v$database),

(select platform_name from v$database),

NULL,

(select version from v$timezone_file));

SQL> select * from sys.registry$database

———– —————————— —————————— ———-

13 Linux x86 64-bit 4

———-

14

———–

13

——————————

Linux x86 64-bit

SQL> @?/rdbms/admin/catupgrd.sql

DOC>#######################################################################

DOC>#######################################################################

DOC>

DOC> The first time this script is run, there should be no error messages

DOC> generated; all normal upgrade error messages are suppressed.

DOC>

DOC> If this script is being re-run after correcting some problem, then

DOC> expect the following error which is not automatically suppressed:

DOC>

DOC> ORA-00001: unique constraint (<constraint_name>) violated

DOC> possibly in conjunction with

DOC> ORA-06512: at “<procedure/function name>”, line NN

DOC>

DOC> These errors will automatically be suppressed by the Database Upgrade

DOC> Assistant (DBUA) when it re-runs an upgrade.

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

DOC>######################################################################

DOC>######################################################################

DOC> The following statement will cause an “ORA-01722: invalid number”

DOC> error if the user running this script is not SYS. Disconnect

DOC> and reconnect with AS SYSDBA.

DOC>######################################################################

DOC>######################################################################

.

Component Current Version Elapsed Time

Name Status Number HH:MM:SS

.

Oracle Server

. VALID 11.2.0.4.0 00:35:25

JServer JAVA Virtual Machine

. VALID 11.2.0.4.0 00:23:43

Oracle Real Application Clusters

. VALID 11.2.0.4.0 00:00:03

Oracle Workspace Manager

. VALID 11.2.0.4.0 00:02:05

OLAP Analytic Workspace

. VALID 11.2.0.4.0 00:02:01

OLAP Catalog

. VALID 11.2.0.4.0 00:01:32

Oracle OLAP API

. VALID 11.2.0.4.0 00:02:33

Oracle Enterprise Manager

. VALID 11.2.0.4.0 00:11:27

Oracle XDK

. VALID 11.2.0.4.0 00:02:38

Oracle Text

. VALID 11.2.0.4.0 00:02:03

Oracle XML Database

. VALID 11.2.0.4.0 00:07:24

Oracle Database Java Packages

. VALID 11.2.0.4.0 00:00:57

Oracle Multimedia

. VALID 11.2.0.4.0 00:12:50

Spatial

. VALID 11.2.0.4.0 00:18:06

Oracle Expression Filter

. VALID 11.2.0.4.0 00:00:40

Oracle Rules Manager

. VALID 11.2.0.4.0 05:51:54

Oracle Application Express

. VALID 3.2.1.00.12 00:35:14

Final Actions

. 00:04:26

Total Upgrade Time: 08:36:32

SQL> SET SERVEROUTPUT OFF

SQL> SET VERIFY ON

SQL> commit;

Database closed.

Database dismounted.

ORACLE instance shut down.

SQL>

SQL>

SQL>

SQL> DOC

DOC>#######################################################################

DOC>#######################################################################

DOC>

DOC> The above sql script is the final step of the upgrade. Please

DOC> review any errors in the spool log file. If there are any errors in

DOC> the spool file, consult the Oracle Database Upgrade Guide for

DOC> troubleshooting recommendations.

DOC>

DOC> Next restart for normal operation, and then run utlrp.sql to

DOC> recompile any invalid application objects.

DOC>

DOC> If the source database had an older time zone version prior to

DOC> upgrade, then please run the DBMS_DST package. DBMS_DST will upgrade

DOC> TIMESTAMP WITH TIME ZONE data to use the latest time zone file shipped

DOC> with Oracle.

DOC>

DOC>#######################################################################

DOC>#######################################################################

DOC>#

SQL>

SQL> Rem Set errorlogging off

SQL> SET ERRORLOGGING OFF;

SQL>

SQL> REM END OF CATUPGRD.SQL

SQL>

SQL> REM bug 12337546 – Exit current sqlplus session at end of catupgrd.sql.

SQL> REM This forces user to start a new sqlplus session in order

SQL> REM to connect to the upgraded db.

SQL> exit

Disconnected from Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 – 64bit Production

With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP,

Data Mining and Real Application Testing options

— 在执行过程中注意监控alert日志,归档空间使用等问题,执行完脚本后注意检查日志,看是否存在错误。

[oracle@11grac1.localdomain:/home/oracle]$ echo $ORACLE_HOME

/u01/app/oracle/product/11.2.0/dbhome_1

[oracle@11grac1.localdomain:/home/oracle]$ sqlplus / as sysdba

ORACLE instance started.

Fixed Size 2257880 bytes

Variable Size 759172136 bytes

Database Buffers 75497472 bytes

Redo Buffers 2355200 bytes

Database mounted.

Database opened.

SQL> @?/rdbms/admin/catuppst.sql

………………………….省略输出……………………………….

SQL> SET echo off

Check the following log file for errors:

/u01/app/cfgtoollogs/catbundle/catbundle_PSU_RAC11G_APPLY_2015May19_00_57_34.log

— 根据提示检查日志文件看是否有错误存在

SQL> @?/rdbms/admin/utlrp.sql

………………………….省略输出……………………………….

ERRORS DURING RECOMPILATION

—————————

0

[oracle@11grac1.localdomain:/home/oracle]$ lsnrctl status

STATUS of the LISTENER

————————

Alias LISTENER

Version TNSLSNR for Linux: Version 11.2.0.4.0 – Production

Start Date 19-MAY-2015 00:49:33

Uptime 0 days 0 hr. 50 min. 6 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/11.2.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/11grac1/listener/alert/log.xml

Listening Endpoints Summary…

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.56.111)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.56.112)(PORT=1521)))

Services Summary…

Service “+ASM” has 1 instance(s).

Instance “+ASM1″, status READY, has 1 handler(s) for this service…

Service “rac11g” has 1 instance(s).

Instance “rac11g1″, status READY, has 2 handler(s) for this service…

Service “rac11gXDB” has 1 instance(s).

Instance “rac11g1″, status READY, has 1 handler(s) for this service…

The command completed successfully

[oracle@11grac1.localdomain:/home/oracle]$ tail -f /etc/oratab

# The first and second fields are the system identifier and home

# directory of the database respectively. The third filed indicates

# to the dbstart utility that the database should , “Y”, or should not,

# “N”, be brought up at system boot time.

#

# Multiple entries with the same $ORACLE_SID are not allowed.

#

#

+ASM1:/u01/app/11.2.0/grid:N # line added by Agent

rac11g:/u01/app/oracle/product/11.2.0/dbhome_1:N # line added by Agent

# The first and second fields are the system identifier and home

# directory of the database respectively. The third filed indicates

# to the dbstart utility that the database should , “Y”, or should not,

# “N”, be brought up at system boot time.

#

# Multiple entries with the same $ORACLE_SID are not allowed.

#

#

+ASM2:/u01/app/11.2.0/grid:N # line added by Agent

rac11g:/u01/app/oracle/product/11.2.0/dbhome_1:N # line added by Agent

SQL> show parameter cluster

———————————— ———– ——————————

cluster_database boolean FALSE

cluster_database_instances integer 1

cluster_interconnects string

SQL> alter system set cluster_database=true scope=spfile;

SQL> startup;

[grid@11grac1 ~]$ crs_stat -t

Name Type Target State Host

------------------------------------------------------------

ora....SM1.asm application ONLINE ONLINE 11grac1

ora....C1.lsnr application ONLINE ONLINE 11grac1

ora....ac1.gsd application OFFLINE OFFLINE

ora....ac1.ons application ONLINE ONLINE 11grac1

ora....ac1.vip ora....t1.type ONLINE ONLINE 11grac1

ora....SM2.asm application ONLINE ONLINE 11grac2

ora....C2.lsnr application ONLINE ONLINE 11grac2

ora....ac2.gsd application OFFLINE OFFLINE

ora....ac2.ons application ONLINE ONLINE 11grac2

ora....ac2.vip ora....t1.type ONLINE ONLINE 11grac2

ora.DATA.dg ora....up.type ONLINE ONLINE 11grac1

ora....ER.lsnr ora....er.type ONLINE ONLINE 11grac1

ora....N1.lsnr ora....er.type ONLINE ONLINE 11grac1

ora....N2.lsnr ora....er.type ONLINE ONLINE 11grac2

ora....N3.lsnr ora....er.type ONLINE ONLINE 11grac2

ora.OVDF.dg ora....up.type ONLINE ONLINE 11grac1

ora.RFA.dg ora....up.type ONLINE ONLINE 11grac1

ora.asm ora.asm.type ONLINE ONLINE 11grac1

ora.cvu ora.cvu.type ONLINE ONLINE 11grac2

ora.gsd ora.gsd.type OFFLINE OFFLINE

ora....network ora....rk.type ONLINE ONLINE 11grac1

ora.oc4j ora.oc4j.type ONLINE ONLINE 11grac2

ora.ons ora.ons.type ONLINE ONLINE 11grac1

ora....ry.acfs ora....fs.type ONLINE ONLINE 11grac1

ora.scan1.vip ora....ip.type ONLINE ONLINE 11grac1

ora.scan2.vip ora....ip.type ONLINE ONLINE 11grac2