--- 0.环境描述

2节点RAC配置共享存储

系统版本RedHat 6.6

10块共享存储磁盘/dev/sdb~sdk

--- 系统版本

[root@dbtest3 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 6.6 (Santiago)

[root@dbtest4 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 6.6 (Santiago)

--- 10块共享磁盘

[root@dbtest3 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 2 22:09 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 2 22:09 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 2 22:09 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 2 22:09 /dev/sda3

brw-rw---- 1 root disk 8, 16 Aug 2 22:09 /dev/sdb

brw-rw---- 1 root disk 8, 32 Aug 2 22:09 /dev/sdc

brw-rw---- 1 root disk 8, 48 Aug 2 22:09 /dev/sdd

brw-rw---- 1 root disk 8, 64 Aug 2 22:09 /dev/sde

brw-rw---- 1 root disk 8, 80 Aug 2 22:09 /dev/sdf

brw-rw---- 1 root disk 8, 96 Aug 2 22:09 /dev/sdg

brw-rw---- 1 root disk 8, 112 Aug 2 22:09 /dev/sdh

brw-rw---- 1 root disk 8, 128 Aug 2 22:09 /dev/sdi

brw-rw---- 1 root disk 8, 144 Aug 2 22:09 /dev/sdj

brw-rw---- 1 root disk 8, 160 Aug 2 22:09 /dev/sdk

[root@dbtest4 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 2 22:10 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 2 22:10 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 2 22:10 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 2 22:10 /dev/sda3

brw-rw---- 1 root disk 8, 16 Aug 2 22:10 /dev/sdb

brw-rw---- 1 root disk 8, 32 Aug 2 22:10 /dev/sdc

brw-rw---- 1 root disk 8, 48 Aug 2 22:10 /dev/sdd

brw-rw---- 1 root disk 8, 64 Aug 2 22:10 /dev/sde

brw-rw---- 1 root disk 8, 80 Aug 2 22:10 /dev/sdf

brw-rw---- 1 root disk 8, 96 Aug 2 22:10 /dev/sdg

brw-rw---- 1 root disk 8, 112 Aug 2 22:10 /dev/sdh

brw-rw---- 1 root disk 8, 128 Aug 2 22:10 /dev/sdi

brw-rw---- 1 root disk 8, 144 Aug 2 22:10 /dev/sdj

brw-rw---- 1 root disk 8, 160 Aug 2 22:10 /dev/sdk

--- 10块共享磁盘fdisk输出

[root@dbtest3 ~]# fdisk -l

Disk /dev/sda: 85.9 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0002c572

Device Boot Start End Blocks Id System

/dev/sda1 * 1 26 204800 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 26 536 4096000 82 Linux swap / Solaris

Partition 2 does not end on cylinder boundary.

/dev/sda3 536 10444 79584256 8e Linux LVM

Disk /dev/sdb: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x6ae81c6f

Device Boot Start End Blocks Id System

Disk /dev/sdc: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sde: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdd: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdf: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdh: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdg: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdi: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdk: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdj: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/mapper/vg_dbtest1-LogVol00: 81.5 GB, 81491132416 bytes

255 heads, 63 sectors/track, 9907 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

[root@dbtest4 ~]# fdisk -l

Disk /dev/sda: 85.9 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0002c572

Device Boot Start End Blocks Id System

/dev/sda1 * 1 26 204800 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 26 536 4096000 82 Linux swap / Solaris

Partition 2 does not end on cylinder boundary.

/dev/sda3 536 10444 79584256 8e Linux LVM

Disk /dev/sdb: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x6ae81c6f

Device Boot Start End Blocks Id System

Disk /dev/sdc: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdd: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sde: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdf: 2147 MB, 2147483648 bytes

67 heads, 62 sectors/track, 1009 cylinders

Units = cylinders of 4154 * 512 = 2126848 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdg: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdh: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdj: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdi: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/sdk: 10.7 GB, 10737418240 bytes

64 heads, 32 sectors/track, 10240 cylinders

Units = cylinders of 2048 * 512 = 1048576 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

Disk /dev/mapper/vg_dbtest1-LogVol00: 81.5 GB, 81491132416 bytes

255 heads, 63 sectors/track, 9907 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

--- 10块共享磁盘uuid

[root@dbtest3 ~]# scsi_id /dev/sdb

36000c29cadb411725a7d6daacd6ad108

[root@dbtest3 ~]# scsi_id /dev/sdc

36000c29838a242f103bb6941175efec1

[root@dbtest3 ~]# scsi_id /dev/sdd

36000c29227146322659b155492a717c3

[root@dbtest3 ~]# scsi_id /dev/sde

36000c298040617a958533e6a46671d60

[root@dbtest3 ~]# scsi_id /dev/sdf

36000c2973cf2951a3b61c87301e1c99a

[root@dbtest3 ~]# scsi_id /dev/sdg

36000c29a926c801b7f9a3b245308e092

[root@dbtest3 ~]# scsi_id /dev/sdh

36000c29944cdbb8110dc96a802e142c8

[root@dbtest3 ~]# scsi_id /dev/sdi

36000c29b1312cf84809d67bc7c8dbe28

[root@dbtest3 ~]# scsi_id /dev/sdj

36000c29d4d97c71a36232c4e0a322be0

[root@dbtest3 ~]# scsi_id /dev/sdk

36000c29d2c6230eae26892a4670d909e

[root@dbtest4 ~]# scsi_id /dev/sdb

36000c29cadb411725a7d6daacd6ad108

[root@dbtest4 ~]# scsi_id /dev/sdc

36000c29838a242f103bb6941175efec1

[root@dbtest4 ~]# scsi_id /dev/sdd

36000c29227146322659b155492a717c3

[root@dbtest4 ~]# scsi_id /dev/sde

36000c298040617a958533e6a46671d60

[root@dbtest4 ~]# scsi_id /dev/sdf

36000c2973cf2951a3b61c87301e1c99a

[root@dbtest4 ~]# scsi_id /dev/sdg

36000c29a926c801b7f9a3b245308e092

[root@dbtest4 ~]# scsi_id /dev/sdh

36000c29944cdbb8110dc96a802e142c8

[root@dbtest4 ~]# scsi_id /dev/sdi

36000c29b1312cf84809d67bc7c8dbe28

[root@dbtest4 ~]# scsi_id /dev/sdj

36000c29d4d97c71a36232c4e0a322be0

[root@dbtest4 ~]# scsi_id /dev/sdk

36000c29d2c6230eae26892a4670d909e

--- 1.使用99规则文件udev绑定共享磁盘

--- 将options=--whitelisted --replace-whitespace写入/etc/scsi_id.config配置文件

[root@dbtest3 ~]# echo "options=--whitelisted --replace-whitespace" > /etc/scsi_id.config

[root@dbtest4 ~]# echo "options=--whitelisted --replace-whitespace" > /etc/scsi_id.config

--- 在RAC两节点获取10块共享磁盘的uudi并生成udev的99规则

--- 将以下输出结果分别添加到两节点的/etc/udev/rules.d/99-oracle-asmdevices.rules文件中

[root@dbtest3 ~]# for i in b c d e f g h i j k;

> do

> echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\""

> done

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29cadb411725a7d6daacd6ad108", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29838a242f103bb6941175efec1", NAME="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29227146322659b155492a717c3", NAME="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c298040617a958533e6a46671d60", NAME="asm-diske", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c2973cf2951a3b61c87301e1c99a", NAME="asm-diskf", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29a926c801b7f9a3b245308e092", NAME="asm-diskg", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29944cdbb8110dc96a802e142c8", NAME="asm-diskh", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29b1312cf84809d67bc7c8dbe28", NAME="asm-diski", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29d4d97c71a36232c4e0a322be0", NAME="asm-diskj", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29d2c6230eae26892a4670d909e", NAME="asm-diskk", OWNER="grid", GROUP="asmadmin", MODE="0660"

[root@dbtest4 ~]# for i in b c d e f g h i j k;

> do

> echo "KERNEL==\"sd*\", BUS==\"scsi\", PROGRAM==\"/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\", RESULT==\"`/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", NAME=\"asm-disk$i\", OWNER=\"grid\", GROUP=\"asmadmin\", MODE=\"0660\""

> done

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29cadb411725a7d6daacd6ad108", NAME="asm-diskb", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29838a242f103bb6941175efec1", NAME="asm-diskc", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29227146322659b155492a717c3", NAME="asm-diskd", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c298040617a958533e6a46671d60", NAME="asm-diske", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c2973cf2951a3b61c87301e1c99a", NAME="asm-diskf", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29a926c801b7f9a3b245308e092", NAME="asm-diskg", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29944cdbb8110dc96a802e142c8", NAME="asm-diskh", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29b1312cf84809d67bc7c8dbe28", NAME="asm-diski", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29d4d97c71a36232c4e0a322be0", NAME="asm-diskj", OWNER="grid", GROUP="asmadmin", MODE="0660"

KERNEL=="sd*", BUS=="scsi", PROGRAM=="/sbin/scsi_id --whitelisted --replace-whitespace --device=/dev/$name", RESULT=="36000c29d2c6230eae26892a4670d909e", NAME="asm-diskk", OWNER="grid", GROUP="asmadmin", MODE="0660"

[root@dbtest3 ~]# vi /etc/udev/rules.d/99-oracle-asmdevices.rules

[root@dbtest4 ~]# vi /etc/udev/rules.d/99-oracle-asmdevices.rules

--- 重新加载规则文件并启动udev

[root@dbtest3 ~]# udevadm control --reload-rules

[root@dbtest3 ~]# start_udev

Starting udev: udevd[7979]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@dbtest4 ~]# udevadm control --reload-rules

[root@dbtest4 ~]# start_udev

Starting udev: udevd[7967]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

--- 检查绑定生成的ams共享磁盘

[root@dbtest3 ~]# ls -l /dev/asm-disk*

brw-rw---- 1 grid asmadmin 8, 16 Aug 3 10:33 /dev/asm-diskb

brw-rw---- 1 grid asmadmin 8, 32 Aug 3 10:33 /dev/asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Aug 3 10:33 /dev/asm-diskd

brw-rw---- 1 grid asmadmin 8, 64 Aug 3 10:33 /dev/asm-diske

brw-rw---- 1 grid asmadmin 8, 80 Aug 3 10:33 /dev/asm-diskf

brw-rw---- 1 grid asmadmin 8, 96 Aug 3 10:33 /dev/asm-diskg

brw-rw---- 1 grid asmadmin 8, 112 Aug 3 10:33 /dev/asm-diskh

brw-rw---- 1 grid asmadmin 8, 128 Aug 3 10:33 /dev/asm-diski

brw-rw---- 1 grid asmadmin 8, 144 Aug 3 10:33 /dev/asm-diskj

brw-rw---- 1 grid asmadmin 8, 160 Aug 3 10:33 /dev/asm-diskk

[root@dbtest4 ~]# ls -l /dev/asm-disk*

brw-rw---- 1 grid asmadmin 8, 16 Aug 3 10:33 /dev/asm-diskb

brw-rw---- 1 grid asmadmin 8, 32 Aug 3 10:33 /dev/asm-diskc

brw-rw---- 1 grid asmadmin 8, 48 Aug 3 10:33 /dev/asm-diskd

brw-rw---- 1 grid asmadmin 8, 64 Aug 3 10:33 /dev/asm-diske

brw-rw---- 1 grid asmadmin 8, 80 Aug 3 10:33 /dev/asm-diskf

brw-rw---- 1 grid asmadmin 8, 96 Aug 3 10:33 /dev/asm-diskg

brw-rw---- 1 grid asmadmin 8, 112 Aug 3 10:33 /dev/asm-diskh

brw-rw---- 1 grid asmadmin 8, 128 Aug 3 10:33 /dev/asm-diski

brw-rw---- 1 grid asmadmin 8, 144 Aug 3 10:33 /dev/asm-diskj

brw-rw---- 1 grid asmadmin 8, 160 Aug 3 10:33 /dev/asm-diskk

--- Linux 6使用udev绑定共享磁盘之后原有的/dev/sdb~k不再显示

[root@dbtest3 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 3 10:33 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 3 10:33 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 3 10:33 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 3 10:33 /dev/sda3

[root@dbtest4 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 3 10:33 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 3 10:33 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 3 10:33 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 3 10:33 /dev/sda3

--- fdisk输出也不再显示/dev/sdb~k

[root@dbtest3 ~]# fdisk -l

Disk /dev/sda: 85.9 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0002c572

Device Boot Start End Blocks Id System

/dev/sda1 * 1 26 204800 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 26 536 4096000 82 Linux swap / Solaris

Partition 2 does not end on cylinder boundary.

/dev/sda3 536 10444 79584256 8e Linux LVM

Disk /dev/mapper/vg_dbtest1-LogVol00: 81.5 GB, 81491132416 bytes

255 heads, 63 sectors/track, 9907 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

[root@dbtest4 ~]# fdisk -l

Disk /dev/sda: 85.9 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x0002c572

Device Boot Start End Blocks Id System

/dev/sda1 * 1 26 204800 83 Linux

Partition 1 does not end on cylinder boundary.

/dev/sda2 26 536 4096000 82 Linux swap / Solaris

Partition 2 does not end on cylinder boundary.

/dev/sda3 536 10444 79584256 8e Linux LVM

Disk /dev/mapper/vg_dbtest1-LogVol00: 81.5 GB, 81491132416 bytes

255 heads, 63 sectors/track, 9907 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

---【关于Linux不同版本下udev的变化和区别可以参考Tim Hall的技术博客https://oracle-base.com/articles/linux/udev-scsi-rules-configuration-in-oracle-linux】

---【在文章中Tim Hall在使用udev绑定共享磁盘时,先将共享磁盘分区格式化,然后使用/dev/sdb1~sde1进行绑定】

---【经测试使用udev绑定后/dev/sdb1~sde1也不再显示,只显示/dev/sdb~e】

---【在测试完使用99-rules配置文件绑定共享磁盘后,同时也进行了使用60-raw.rules配置文件绑定共享磁盘生成裸设备的测试】

---【经测试发现,在Linux 6上使用60-raw.rules配置文件绑定共享磁盘生成裸设备已经不再支持uuid方式】

---【经测试使使用uuid绑定共享磁盘生成裸设备,start_udev时并不会加载60-raw.rules文件生效】

---【但通过盘符绑定共享磁盘生成裸设备,start_udev时并会加载60-raw.rules文件生效】

---【但因为共享磁盘在RAC的多个节点上,同一块盘的顺序可能会不同,并且重启系统后共享磁盘的盘符也会发生变化,所以使用盘符绑定共享磁盘生成裸设备会导致盘符漂移错乱】

---【经测试还发现,使用60-raw.rules配置文件绑定共享磁盘生成裸设备,存在裸设备的缓存问题,即便60-raw.rules配置文件被修改更新,重新加载规则文件后启动udev】

---【之前配置绑定生成的裸设备依然存在,除非系统重启后才会去除,以下为测试过程结果】

---【准备绑定裸设备前的磁盘信息】

[root@dbtest3 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 0 Aug 3 11:02 /dev/raw/rawctl

[root@dbtest3 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 3 11:02 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 3 11:02 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 3 11:02 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 3 11:02 /dev/sda3

brw-rw---- 1 root disk 8, 16 Aug 3 11:02 /dev/sdb

brw-rw---- 1 root disk 8, 32 Aug 3 11:02 /dev/sdc

brw-rw---- 1 root disk 8, 48 Aug 3 11:02 /dev/sdd

brw-rw---- 1 root disk 8, 64 Aug 3 11:02 /dev/sde

brw-rw---- 1 root disk 8, 80 Aug 3 11:02 /dev/sdf

brw-rw---- 1 root disk 8, 96 Aug 3 11:02 /dev/sdg

brw-rw---- 1 root disk 8, 112 Aug 3 11:02 /dev/sdh

brw-rw---- 1 root disk 8, 128 Aug 3 11:02 /dev/sdi

brw-rw---- 1 root disk 8, 144 Aug 3 11:02 /dev/sdj

brw-rw---- 1 root disk 8, 160 Aug 3 11:02 /dev/sdk

[root@dbtest4 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 0 Aug 3 11:03 /dev/raw/rawctl

[root@dbtest4 ~]# ls -l /dev/sd*

brw-rw---- 1 root disk 8, 0 Aug 3 11:03 /dev/sda

brw-rw---- 1 root disk 8, 1 Aug 3 11:03 /dev/sda1

brw-rw---- 1 root disk 8, 2 Aug 3 11:03 /dev/sda2

brw-rw---- 1 root disk 8, 3 Aug 3 11:03 /dev/sda3

brw-rw---- 1 root disk 8, 16 Aug 3 11:03 /dev/sdb

brw-rw---- 1 root disk 8, 32 Aug 3 11:03 /dev/sdc

brw-rw---- 1 root disk 8, 48 Aug 3 11:03 /dev/sdd

brw-rw---- 1 root disk 8, 64 Aug 3 11:03 /dev/sde

brw-rw---- 1 root disk 8, 80 Aug 3 11:03 /dev/sdf

brw-rw---- 1 root disk 8, 96 Aug 3 11:03 /dev/sdg

brw-rw---- 1 root disk 8, 112 Aug 3 11:03 /dev/sdh

brw-rw---- 1 root disk 8, 128 Aug 3 11:03 /dev/sdi

brw-rw---- 1 root disk 8, 144 Aug 3 11:03 /dev/sdj

brw-rw---- 1 root disk 8, 160 Aug 3 11:03 /dev/sdk

--- 2.将以下规则写入/etc/udev/rules.d/60-raw.rules 文件

---【使用uudi方式绑定共享磁盘生成裸设备】

[root@dbtest3 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29cadb411725a7d6daacd6ad108", RUN+="/bin/raw /dev/raw/raw11 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29838a242f103bb6941175efec1", RUN+="/bin/raw /dev/raw/raw12 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29227146322659b155492a717c3", RUN+="/bin/raw /dev/raw/raw13 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c298040617a958533e6a46671d60", RUN+="/bin/raw /dev/raw/raw14 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c2973cf2951a3b61c87301e1c99a", RUN+="/bin/raw /dev/raw/raw15 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29a926c801b7f9a3b245308e092", RUN+="/bin/raw /dev/raw/raw16 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29944cdbb8110dc96a802e142c8", RUN+="/bin/raw /dev/raw/raw17 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29b1312cf84809d67bc7c8dbe28", RUN+="/bin/raw /dev/raw/raw18 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29d4d97c71a36232c4e0a322be0", RUN+="/bin/raw /dev/raw/raw19 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29d2c6230eae26892a4670d909e", RUN+="/bin/raw /dev/raw/raw20 %N"

KERNEL=="raw[11-20]", OWNER="grid", GROUP="asmadmin", MODE="660"

[root@dbtest4 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29cadb411725a7d6daacd6ad108", RUN+="/bin/raw /dev/raw/raw11 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29838a242f103bb6941175efec1", RUN+="/bin/raw /dev/raw/raw12 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29227146322659b155492a717c3", RUN+="/bin/raw /dev/raw/raw13 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c298040617a958533e6a46671d60", RUN+="/bin/raw /dev/raw/raw14 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c2973cf2951a3b61c87301e1c99a", RUN+="/bin/raw /dev/raw/raw15 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29a926c801b7f9a3b245308e092", RUN+="/bin/raw /dev/raw/raw16 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29944cdbb8110dc96a802e142c8", RUN+="/bin/raw /dev/raw/raw17 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29b1312cf84809d67bc7c8dbe28", RUN+="/bin/raw /dev/raw/raw18 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29d4d97c71a36232c4e0a322be0", RUN+="/bin/raw /dev/raw/raw19 %N"

ACTION=="add", KERNEL=="sd*", PROGRAM=="/sbin/scsi_id -g -u -d %p", RESULT=="36000c29d2c6230eae26892a4670d909e", RUN+="/bin/raw /dev/raw/raw20 %N"

KERNEL=="raw[11-20]", OWNER="grid", GROUP="asmadmin", MODE="660"

--- 重新加载规则文件并启动udev

[root@dbtest3 ~]# udevadm control --reload-rules

[root@dbtest3 ~]# start_udev

Starting udev: udevd[9693]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@dbtest4 ~]# udevadm control --reload-rules

[root@dbtest4 ~]# start_udev

Starting udev: udevd[11386]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

--- 并没有绑定生成裸设备文件

[root@dbtest3 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 0 Aug 3 11:20 /dev/raw/rawctl

[root@dbtest4 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 0 Aug 3 11:24 /dev/raw/rawctl

[root@dbtest4 ~]# vi /etc/udev/rules.d/60-raw.rules

--- 3.使用盘符方式绑定共享磁盘生成裸设备

---【为了演示上面提到的裸设备缓存问题,本次测试,每次绑定5块共享磁盘,分别绑定两次,以此来确认裸设备的缓存问题确实存在】

---【注意这里两个节点在使用盘符绑定共享磁盘时,只是为了测试裸设备的缓存问题确实存在,并没有确认1节点的sdb~f和2节点的sdb~f是否一一对应同一块盘】

---【第1次使用盘符绑定共享磁盘生成裸设备】

--- 将以下规则写入/etc/udev/rules.d/60-raw.rules 文件

[root@dbtest3 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sdb", RUN+="/bin/raw /dev/raw/raw11 %N"

ACTION=="add", KERNEL=="sdc", RUN+="/bin/raw /dev/raw/raw12 %N"

ACTION=="add", KERNEL=="sdd", RUN+="/bin/raw /dev/raw/raw13 %N"

ACTION=="add", KERNEL=="sde", RUN+="/bin/raw /dev/raw/raw14 %N"

ACTION=="add", KERNEL=="sdf", RUN+="/bin/raw /dev/raw/raw15 %N"

KERNEL=="raw[11-15]", OWNER="grid", GROUP="asmadmin", MODE="660"

[root@dbtest4 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sdb", RUN+="/bin/raw /dev/raw/raw11 %N"

ACTION=="add", KERNEL=="sdc", RUN+="/bin/raw /dev/raw/raw12 %N"

ACTION=="add", KERNEL=="sdd", RUN+="/bin/raw /dev/raw/raw13 %N"

ACTION=="add", KERNEL=="sde", RUN+="/bin/raw /dev/raw/raw14 %N"

ACTION=="add", KERNEL=="sdf", RUN+="/bin/raw /dev/raw/raw15 %N"

KERNEL=="raw[11-15]", OWNER="grid", GROUP="asmadmin", MODE="660"

--- 重新加载规则文件并启动udev

[root@dbtest3 ~]# udevadm control --reload-rules

[root@dbtest3 ~]# start_udev

Starting udev: udevd[10586]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@dbtest4 ~]# udevadm control --reload-rules

[root@dbtest4 ~]# start_udev

Starting udev: udevd[12262]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

--- 绑定成功生成裸设备文件

[root@dbtest3 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 11 Aug 3 11:31 /dev/raw/raw11

crw-rw---- 1 root disk 162, 12 Aug 3 11:31 /dev/raw/raw12

crw-rw---- 1 root disk 162, 13 Aug 3 11:31 /dev/raw/raw13

crw-rw---- 1 root disk 162, 14 Aug 3 11:31 /dev/raw/raw14

crw-rw---- 1 root disk 162, 15 Aug 3 11:31 /dev/raw/raw15

crw-rw---- 1 root disk 162, 0 Aug 3 11:31 /dev/raw/rawctl

[root@dbtest4 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 11 Aug 3 11:31 /dev/raw/raw11

crw-rw---- 1 root disk 162, 12 Aug 3 11:31 /dev/raw/raw12

crw-rw---- 1 root disk 162, 13 Aug 3 11:31 /dev/raw/raw13

crw-rw---- 1 root disk 162, 14 Aug 3 11:31 /dev/raw/raw14

crw-rw---- 1 root disk 162, 15 Aug 3 11:31 /dev/raw/raw15

crw-rw---- 1 root disk 162, 0 Aug 3 11:31 /dev/raw/rawctl

---【第2次使用盘符绑定共享磁盘生成裸设备】

--- 将以下规则写入/etc/udev/rules.d/60-raw.rules 文件

---【删除第1次绑定生成的裸设备文件/dev/raw/raw11~15】

---【将/etc/udev/rules.d/60-raw.rules 文件中第1次写入的绑定规则删除后添加下面的规则】

[root@dbtest3 ~]# rm -f /dev/raw/raw1*

[root@dbtest4 ~]# rm -f /dev/raw/raw1*

[root@dbtest3 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sdg", RUN+="/bin/raw /dev/raw/raw21 %N"

ACTION=="add", KERNEL=="sdh", RUN+="/bin/raw /dev/raw/raw22 %N"

ACTION=="add", KERNEL=="sdi", RUN+="/bin/raw /dev/raw/raw23 %N"

ACTION=="add", KERNEL=="sdj", RUN+="/bin/raw /dev/raw/raw24 %N"

ACTION=="add", KERNEL=="sdk", RUN+="/bin/raw /dev/raw/raw25 %N"

KERNEL=="raw[21-25]", OWNER="grid", GROUP="asmadmin", MODE="660"

[root@dbtest4 ~]# vi /etc/udev/rules.d/60-raw.rules

ACTION=="add", KERNEL=="sdg", RUN+="/bin/raw /dev/raw/raw21 %N"

ACTION=="add", KERNEL=="sdh", RUN+="/bin/raw /dev/raw/raw22 %N"

ACTION=="add", KERNEL=="sdi", RUN+="/bin/raw /dev/raw/raw23 %N"

ACTION=="add", KERNEL=="sdj", RUN+="/bin/raw /dev/raw/raw24 %N"

ACTION=="add", KERNEL=="sdk", RUN+="/bin/raw /dev/raw/raw25 %N"

KERNEL=="raw[21-25]", OWNER="grid", GROUP="asmadmin", MODE="660"

--- 重新加载规则文件并启动udev

[root@dbtest3 ~]# udevadm control --reload-rules

[root@dbtest3 ~]# start_udev

Starting udev: udevd[11431]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

[root@dbtest4 ~]# udevadm control --reload-rules

[root@dbtest4 ~]# start_udev

Starting udev: udevd[13102]: GOTO 'pulseaudio_check_usb' has no matching label in: '/lib/udev/rules.d/90-pulseaudio.rules'

[ OK ]

--- 绑定生成裸设备文件

---【第1次绑定共享磁盘生成的5个裸设备文件/dev/raw/raw11~15依然存在】

---【重启系统后第1次绑定共享磁盘生成的5个裸设备文件/dev/raw/raw11~15消失】

[root@dbtest3 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 11 Aug 3 12:16 /dev/raw/raw11

crw-rw---- 1 root disk 162, 12 Aug 3 12:16 /dev/raw/raw12

crw-rw---- 1 root disk 162, 13 Aug 3 12:16 /dev/raw/raw13

crw-rw---- 1 root disk 162, 14 Aug 3 12:16 /dev/raw/raw14

crw-rw---- 1 root disk 162, 15 Aug 3 12:16 /dev/raw/raw15

crw-rw---- 1 root disk 162, 21 Aug 3 12:16 /dev/raw/raw21

crw-rw---- 1 root disk 162, 22 Aug 3 12:16 /dev/raw/raw22

crw-rw---- 1 root disk 162, 23 Aug 3 12:16 /dev/raw/raw23

crw-rw---- 1 root disk 162, 24 Aug 3 12:16 /dev/raw/raw24

crw-rw---- 1 root disk 162, 25 Aug 3 12:16 /dev/raw/raw25

crw-rw---- 1 root disk 162, 0 Aug 3 12:16 /dev/raw/rawctl

[root@dbtest4 ~]# ls -l /dev/raw/*

crw-rw---- 1 root disk 162, 11 Aug 3 12:16 /dev/raw/raw11

crw-rw---- 1 root disk 162, 12 Aug 3 12:16 /dev/raw/raw12

crw-rw---- 1 root disk 162, 13 Aug 3 12:16 /dev/raw/raw13

crw-rw---- 1 root disk 162, 14 Aug 3 12:16 /dev/raw/raw14

crw-rw---- 1 root disk 162, 15 Aug 3 12:16 /dev/raw/raw15

crw-rw---- 1 root disk 162, 21 Aug 3 12:16 /dev/raw/raw21

crw-rw---- 1 root disk 162, 22 Aug 3 12:16 /dev/raw/raw22

crw-rw---- 1 root disk 162, 23 Aug 3 12:16 /dev/raw/raw23

crw-rw---- 1 root disk 162, 24 Aug 3 12:16 /dev/raw/raw24

crw-rw---- 1 root disk 162, 25 Aug 3 12:16 /dev/raw/raw25

crw-rw---- 1 root disk 162, 0 Aug 3 12:16 /dev/raw/rawctl

标签归档:ASM

使用VMware Workstation配置Oracle RAC共享存储磁盘

#环境信息

VMware® Workstation 10.0.1 build-1379776

#前提环境准备

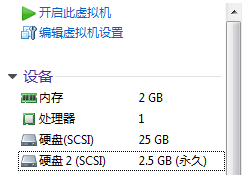

已经安装好两台虚拟Linux系统分别为rac1和rac2

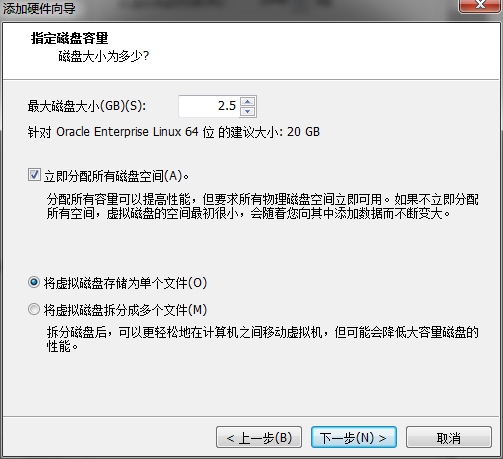

#添加新的磁盘作为RAC共享磁盘(添加多少块磁盘及每块磁盘容量根据数据量进行规划这里只做演示)

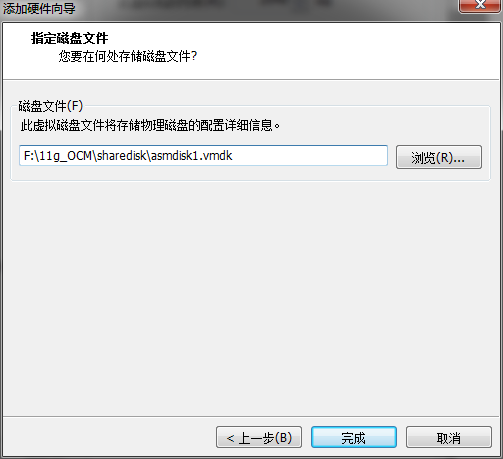

#这里演示添加14块虚拟磁盘每个磁盘大2.5GB,磁盘名字分别为asmdisk1~asmdisk14

#在添加磁盘的过程中关闭虚拟机

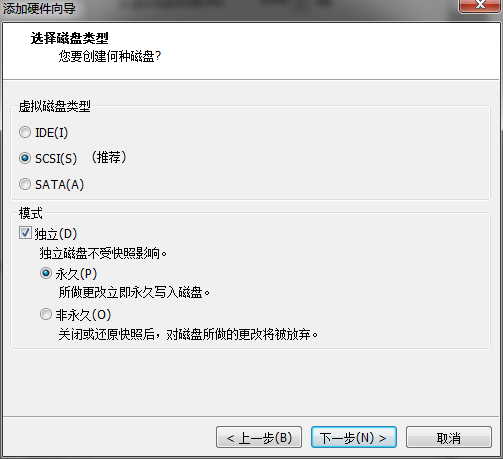

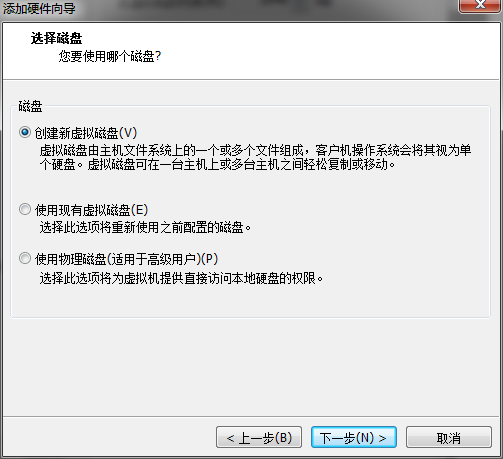

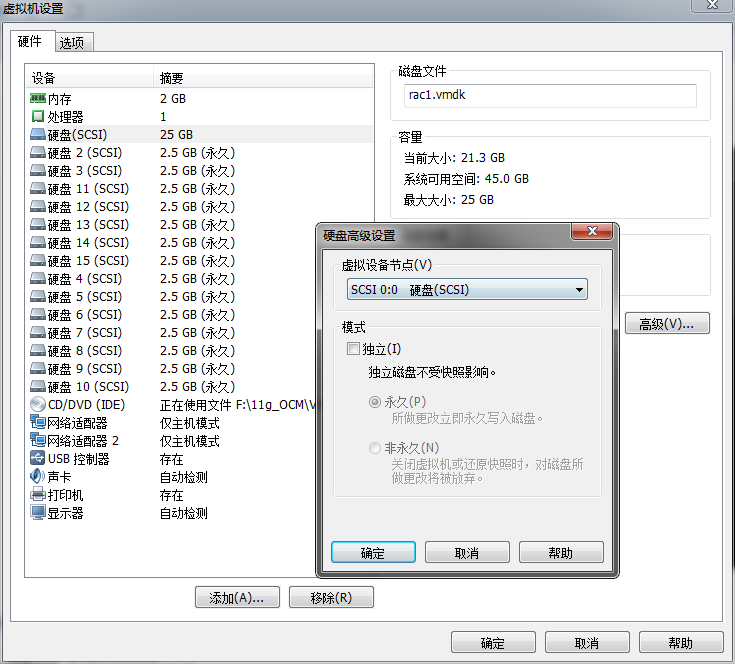

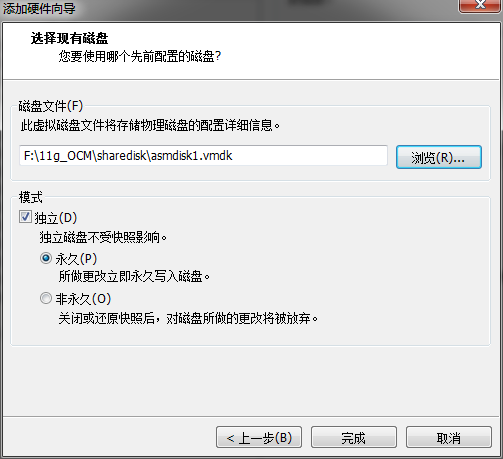

在rac1节点虚拟机上点击“编辑虚拟机设置”,在弹出的界面点击“添加”,在弹出的界面选择“磁盘”点击“下一步”出现下图,依次按照图示操作

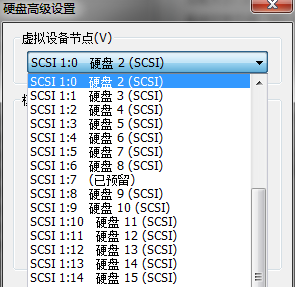

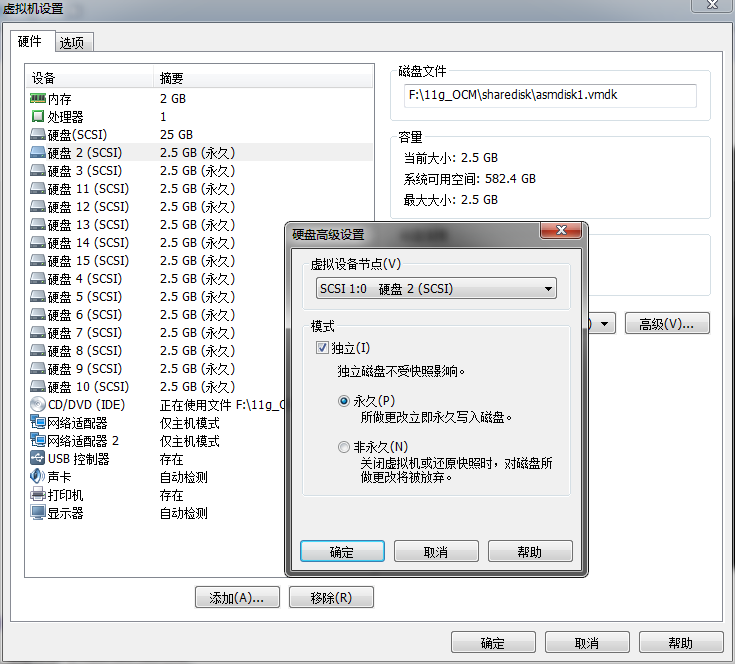

点击“完成”之后,等该磁盘创建完成之后,在虚拟机的主界面上点击刚添加的这块磁盘“磁盘2”

在弹出的界面上选中“磁盘2”,在右侧点击“高级”弹出如下界面

这里将磁盘2的SCSI选择1:0后选择确定,第一块共享磁盘添加完毕,依次按照上面相同步骤添加asmdisk2~asmdisk14,每个磁盘添加完后均需要修改SCSI对应的号,以下为添加每块磁盘对应的SCSI号:

磁盘2 asmdisk1 SCSI 1:0

磁盘3 asmdisk2 SCSI 1:1

磁盘4 asmdisk3 SCSI 1:2

磁盘5 asmdisk4 SCSI 1:3

磁盘6 asmdisk5 SCSI 1:4

磁盘7 asmdisk6 SCSI 1:5

磁盘8 asmdisk7 SCSI 1:6

磁盘9 asmdisk8 SCSI 1:8

磁盘10 asmdisk9 SCSI 1:9

磁盘11 asmdisk10 SCSI 1:10

磁盘12 asmdisk11 SCSI 1:11

磁盘13 asmdisk12 SCSI 1:12

磁盘14 asmdisk13 SCSI 1:13

磁盘15 asmdisk14 SCSI 1:14

这里说明一下,为什么将新添加的14块磁盘asmdisk1~asmdisk14的SCSI用1:0~1:14来标记,原因从下图可以看出:

由于安装系统时分配的那块磁盘默认使用了SCSI 0:0,这块磁盘是本地磁盘(非共享),所以我们后面添加的14块磁盘由于是要用作共享磁盘,因此只能使用新的SCSI通道,这里选择了SCSI 1

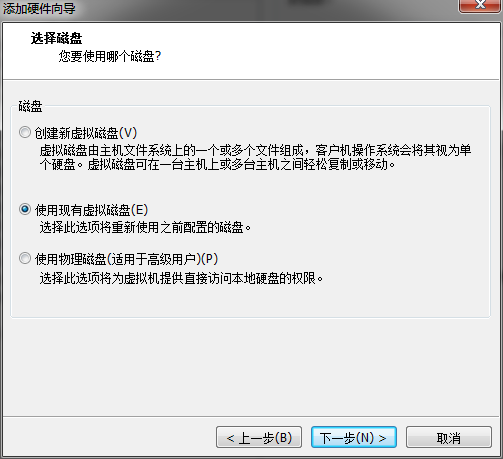

下面在rac2节点上添加刚才rac1节点上创建好的这些磁盘,步骤和上面一样,只需要在选择磁盘时选择“使用现有的虚拟磁盘”,然后依次浏览asmdisk1~asmdisk14磁盘进行添加

所有的磁盘添加完毕后,还是和rac1节点上一样,修改每块磁盘的SCSI对应的号,和rac1节点保持相同

做完以上步骤,还需要编辑两个节点的虚拟机配置文件,使用文本编辑器打开rac1.vmx文件(这个是虚拟机节点的配置文件),rac1.vmx配置文件中关于添加的14块磁盘的内容按照如下进行调整修改

scsi1:0.present = “TRUE”

scsi1:0.fileName = “F:\11g_OCM\sharedisk\asmdisk1.vmdk”

scsi1:0.mode = “independent-persistent”

scsi1:0.deviceType = “disk”

scsi1:0.redo = “”

scsi1:1.present = “TRUE”

scsi1:1.fileName = “F:\11g_OCM\sharedisk\asmdisk2.vmdk”

scsi1:1.mode = “independent-persistent”

scsi1:1.deviceType = “disk”

scsi1:1.redo = “”

scsi1:2.present = “TRUE”

scsi1:2.fileName = “F:\11g_OCM\sharedisk\asmdisk3.vmdk”

scsi1:2.mode = “independent-persistent”

scsi1:2.deviceType = “disk”

scsi1:2.redo = “”

scsi1:3.present = “TRUE”

scsi1:3.fileName = “F:\11g_OCM\sharedisk\asmdisk4.vmdk”

scsi1:3.mode = “independent-persistent”

scsi1:3.deviceType = “disk”

scsi1:3.redo = “”

scsi1:4.present = “TRUE”

scsi1:4.fileName = “F:\11g_OCM\sharedisk\asmdisk5.vmdk”

scsi1:4.mode = “independent-persistent”

scsi1:4.deviceType = “disk”

scsi1:4.redo = “”

scsi1:5.present = “TRUE”

scsi1:5.fileName = “F:\11g_OCM\sharedisk\asmdisk6.vmdk”

scsi1:5.mode = “independent-persistent”

scsi1:5.deviceType = “disk”

scsi1:5.redo = “”

scsi1:6.present = “TRUE”

scsi1:6.fileName = “F:\11g_OCM\sharedisk\asmdisk7.vmdk”

scsi1:6.mode = “independent-persistent”

scsi1:6.deviceType = “disk”

scsi1:6.redo = “”

scsi1:8.present = “TRUE”

scsi1:8.fileName = “F:\11g_OCM\sharedisk\asmdisk8.vmdk”

scsi1:8.mode = “independent-persistent”

scsi1:8.deviceType = “disk”

scsi1:8.redo = “”

scsi1:9.present = “TRUE”

scsi1:9.fileName = “F:\11g_OCM\sharedisk\asmdisk9.vmdk”

scsi1:9.mode = “independent-persistent”

scsi1:9.deviceType = “disk”

scsi1:9.redo = “”

scsi1:10.present = “TRUE”

scsi1:10.fileName = “F:\11g_OCM\sharedisk\asmdisk10.vmdk”

scsi1:10.mode = “independent-persistent”

scsi1:10.deviceType = “disk”

scsi1:10.redo = “”

scsi1:11.present = “TRUE”

scsi1:11.fileName = “F:\11g_OCM\sharedisk\asmdisk11.vmdk”

scsi1:11.mode = “independent-persistent”

scsi1:11.deviceType = “disk”

scsi1:11.redo = “”

scsi1:12.present = “TRUE”

scsi1:12.fileName = “F:\11g_OCM\sharedisk\asmdisk12.vmdk”

scsi1:12.mode = “independent-persistent”

scsi1:12.deviceType = “disk”

scsi1:12.redo = “”

scsi1:13.present = “TRUE”

scsi1:13.fileName = “F:\11g_OCM\sharedisk\asmdisk13.vmdk”

scsi1:13.mode = “independent-persistent”

scsi1:13.deviceType = “disk”

scsi1:13.redo = “”

scsi1:14.present = “TRUE”

scsi1:14.fileName = “F:\11g_OCM\sharedisk\asmdisk14.vmdk”

scsi1:14.mode = “independent-persistent”

scsi1:14.deviceType = “disk”

scsi1:14.redo = “”

同时添加如下参数到rac1.vmx配置文件

disk.EnableUUID = “FALSE”

disk.locking = “FALSE”

diskLib.dataCacheMaxSize = “0”

diskLib.dataCacheMaxReadAheadSize = “0”

diskLib.dataCacheMinReadAheadSize = “0”

diskLib.dataCachePageSize= “4096”

diskLib.maxUnsyncedWrites = “0”

scsi1.shared = “TRUE”

scsi1.present = “TRUE”

scsi1.virtualDev = “lsilogic”

scsi1.sharedBus = “VIRTUAL”

按照同样的方法使用文本编辑器打开rac2.vmx文件(这个是虚拟机节点的配置文件)进行修改调整完成这些操作之后,在VMware Workstation层面的共享磁盘配置基本上就完成了

再往下就是在系统中如何进一步配置共享磁盘给RAC使用,无论是使用asmlib或是udev均可,但是这里需要提到一点,使用asmlib的话,添加的14块磁盘必须进行分区才可以被创建成ASM磁盘被RAC使用,但是使用udev可以直接绑定裸盘即可。

搜索

复制